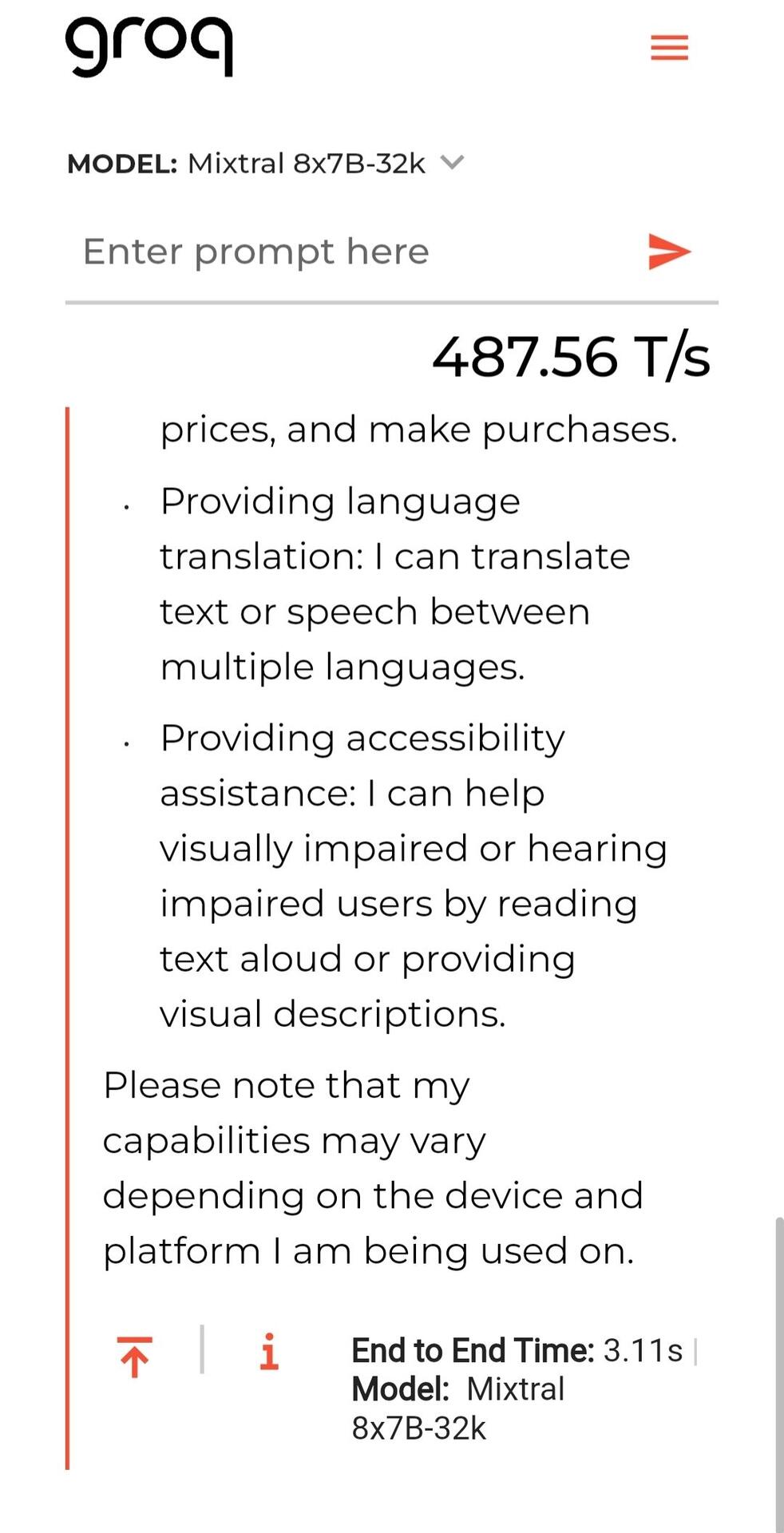

Groq's LPU is faster than Nvidia GPUs, handling requests and responding more quickly.

Groq's LPUs don't need speedy data delivery like Nvidia GPUs do because they don't have HBM in their system. They use SRAM, which is about 20 times faster than what GPUs use. Since inference runs use way less data than model training, Groq's LPU is more energy-efficient. It reads less from external memory and uses less power than a Nvidia GPU for inference tasks.

The LPU works differently from GPUs. It uses a Temporal Instruction Set Computer architecture, so it doesn't have to reload data from memory as often as GPUs do with High Bandwidth Memory (HBM). This helps avoid issues with HBM shortages and keeps costs down.

If Groq's LPU is used in places that do AI processing, you might not need special storage for Nvidia GPUs. The LPU doesn't demand super-fast storage like GPUs do. Groq is claiming that its technology could replace GPUs in AI tasks with its powerful chip and software.

#m=image%2Fjpeg&alt=Verifiable+file+url&x=5206f9b1f5d04e7d282d60ad5be502f78617db861f7b71e2c12c3911bf6c9750&size=125246&dim=982x1920&blurhash=%5D6SigQMx%7EWs%3Axut7R*M%7Bofxu%25MbbD%25WVWBWBIURjxut7xuRjWBofWBRPM_jZofoft8RjWBofjtIUxajtM%7Bt7ozWVM%7Bj%5Bt7&ox=1752393204192781fccea05ec104271408cee897c2ba95d17e5ba1b9a1314fde

#m=image%2Fjpeg&alt=Verifiable+file+url&x=5206f9b1f5d04e7d282d60ad5be502f78617db861f7b71e2c12c3911bf6c9750&size=125246&dim=982x1920&blurhash=%5D6SigQMx%7EWs%3Axut7R*M%7Bofxu%25MbbD%25WVWBWBIURjxut7xuRjWBofWBRPM_jZofoft8RjWBofjtIUxajtM%7Bt7ozWVM%7Bj%5Bt7&ox=1752393204192781fccea05ec104271408cee897c2ba95d17e5ba1b9a1314fde