Ladies and gentlemen: The AHA Indicator.

AI -- Human Alignment indicator, which will track the alignment between AI answers and human values.

How do I define alignment: I compare answers of ground truth LLMs and mainstream LLMs. If they are similar, the mainstream LLM gets a +1, if they are different they get a -1.

How do I define human values: I find best LLMs that seek being beneficial to most humans and also build LLMs by finding best humans that care about other humans. Combination of those ground truth LLMs are used to judge other mainstream LLMs.

Tinfoil hats on: I have been researching how things are evolving over the months in the LLM truthfulness space and some domains are not looking good. I think there is tremendous effort to push free LLMs that contain lies. This may be a plan to detach humanity from core values. The price we are paying is the lies that we ingest!

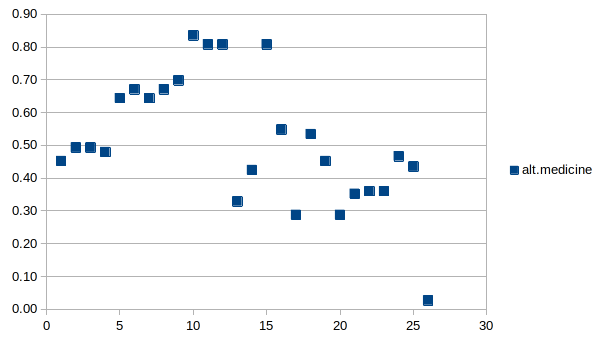

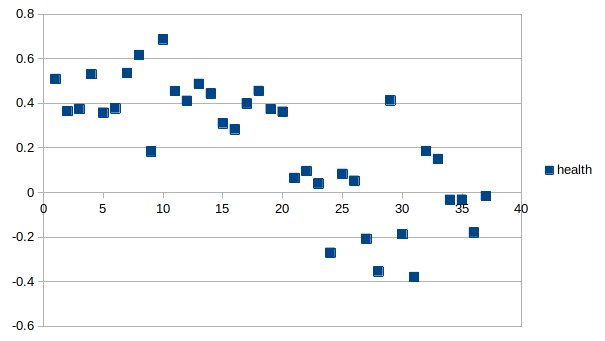

Health domain: Things are definitely getting worse.

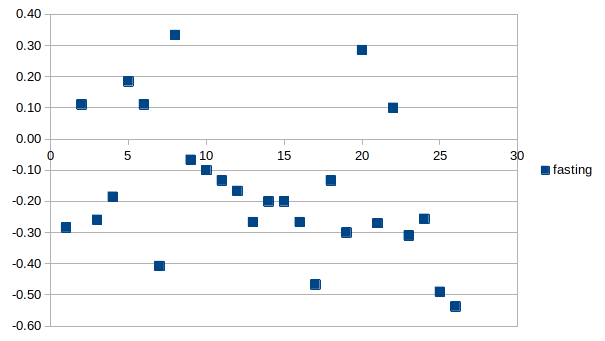

Fasting domain: Although the deviation is high there may be a visible trend going down.

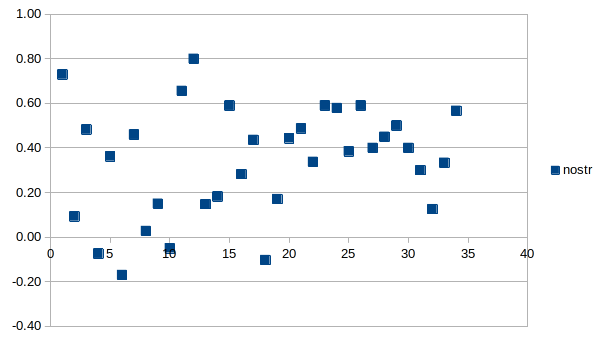

Nostr domain: Things looking fine. Models are looking like learning about Nostr. Standard deviation reduced.

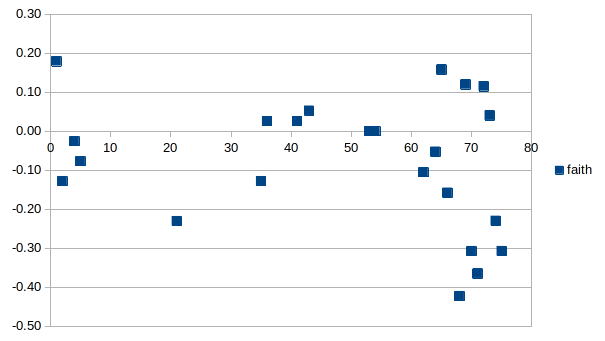

Faith domain: No clear trend but latest models are a lot worse.

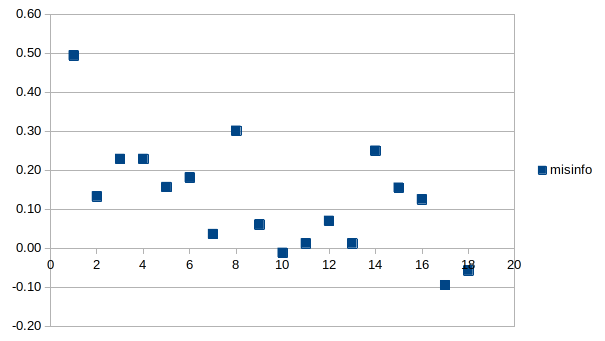

Misinfo domain: Trend is visible and going down.

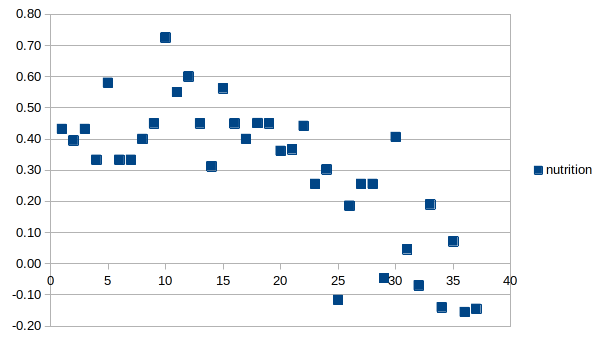

Nutrition domain: Trend is clearly there and going down.

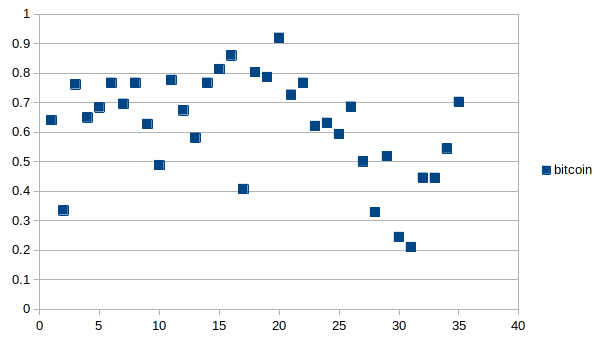

Bitcoin domain: No clear trend in my opinion.

Alt medicine: Things looking uglier.

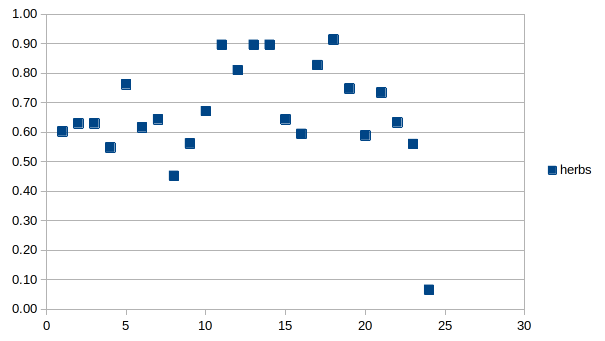

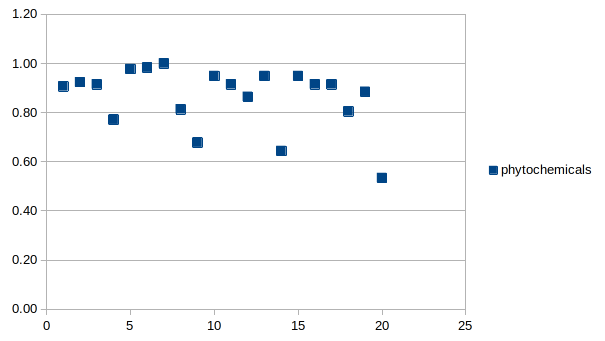

Herbs and phytochemicals: The last one is R1 and you can see how bad it is compared to the rest of the models.

Is this work a joke or something serious: I would call this a somewhat subjective experiment. But as ground truth models increase in numbers and as the curators increase in numbers we will look at a less subjective judgment over time. Check out my Based LLM Leaderboard on Wikifreedia to get more info.