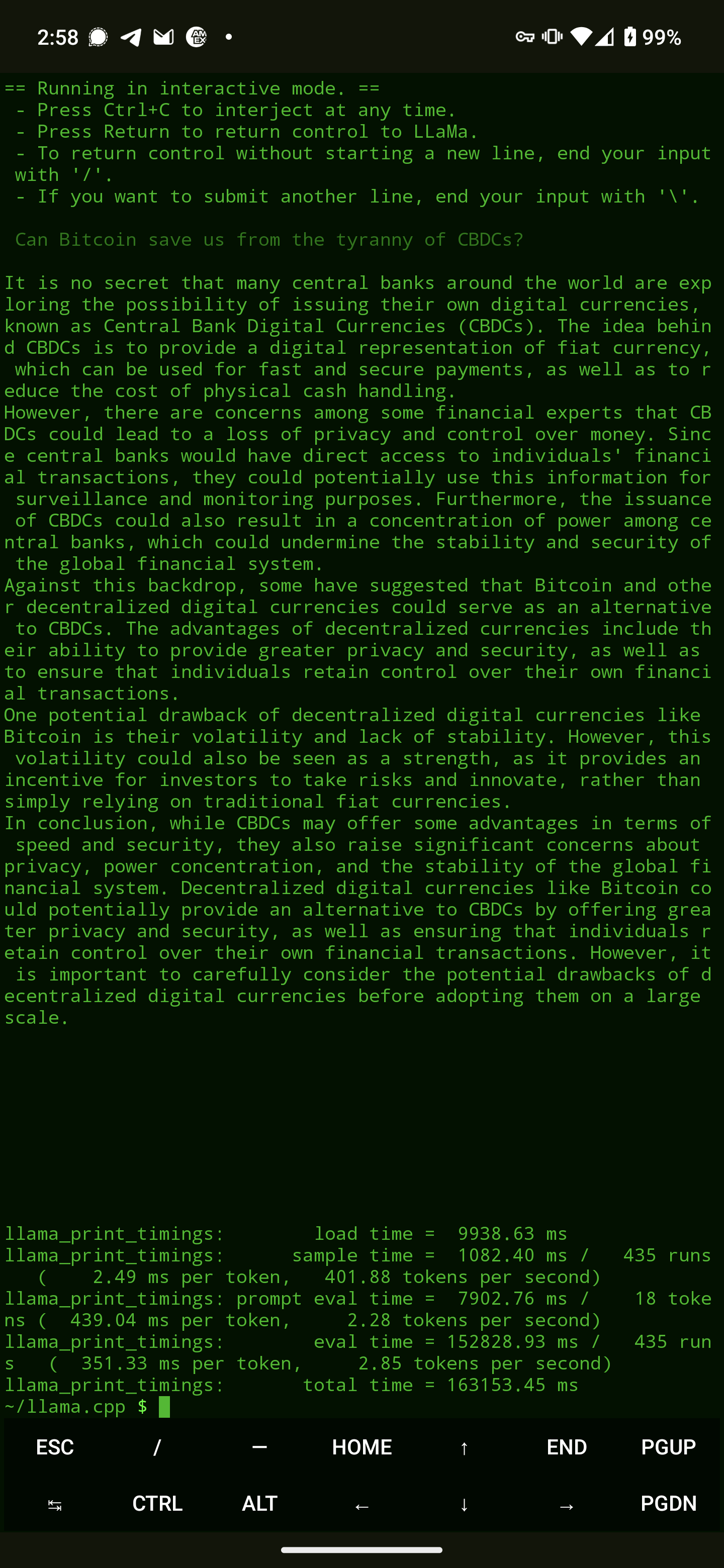

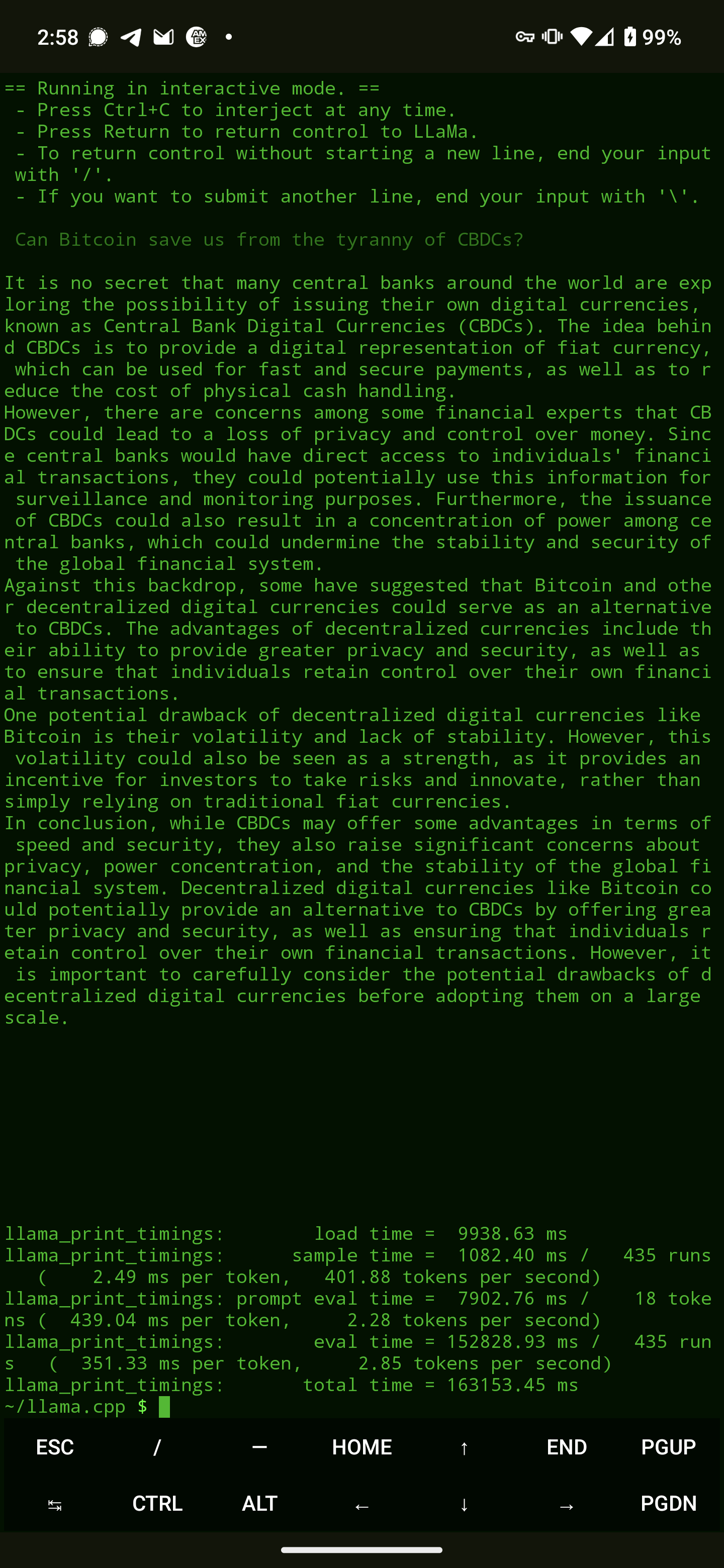

For anyone else playing with LLaMA 2, the new K quant models are definitely the ones to go for. Just compared the two and the K_S Q4 model is much faster, less RAM intensive, and produces higher quality output than the regular Q4.

For anyone else playing with LLaMA 2, the new K quant models are definitely the ones to go for. Just compared the two and the K_S Q4 model is much faster, less RAM intensive, and produces higher quality output than the regular Q4.

As someone new, what's a good starting point to get this up and running?

If you're already familiar with Linux in general, this repo will tell you what to do.

To get the actual models, lookup "TheBloke" on Hugging Face.

Good work mate 👏