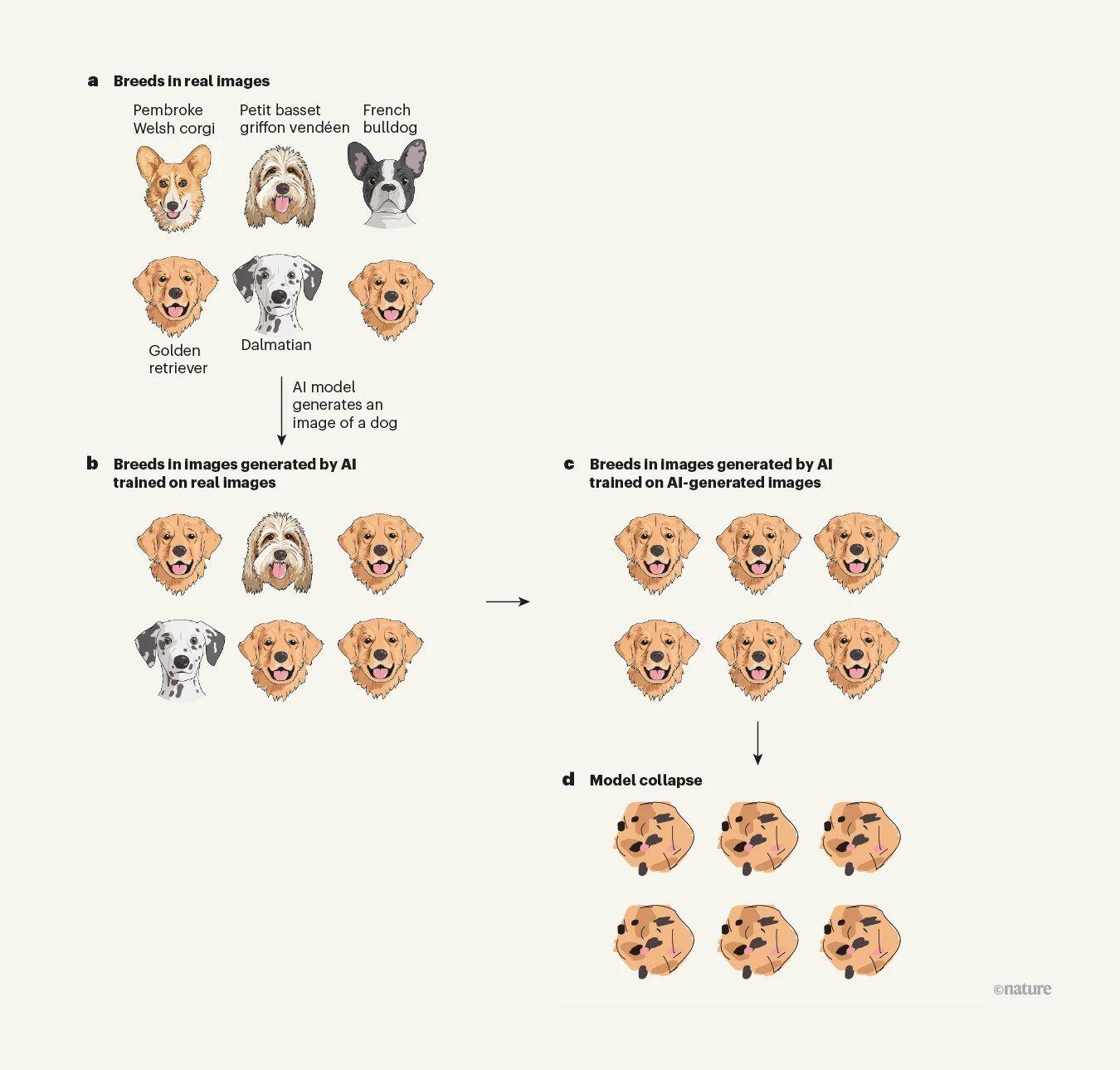

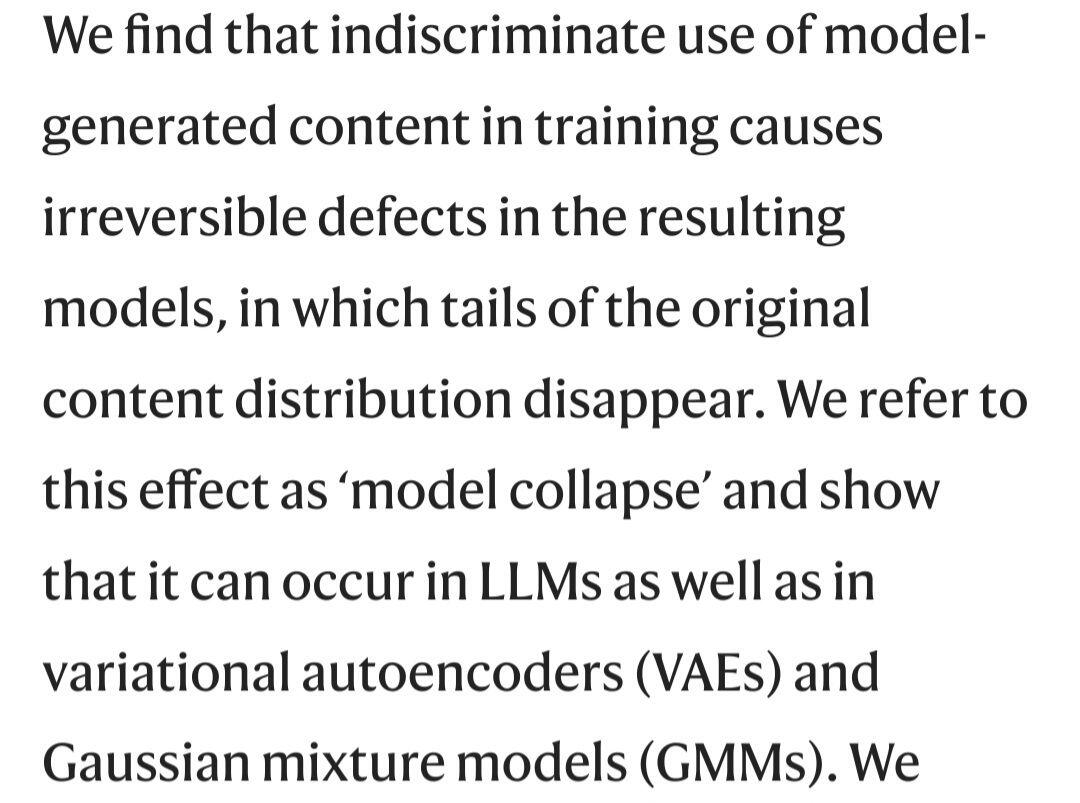

A study finds that AI trained on its own outputs will become increasingly prone toward errors, until the model collapses.

As many artists and other observers have predicted, the use of non-realistic reference images as AI inputs will deteriorate the quality of reality-based AI art content over time.

When an AI model is fed with AI outputs instead of real world references, the breakdown or corruption becomes inevitable over time.

https://www.nature.com/articles/s41586-024-07566-y

#AI #Artificial #Study #Research #Art