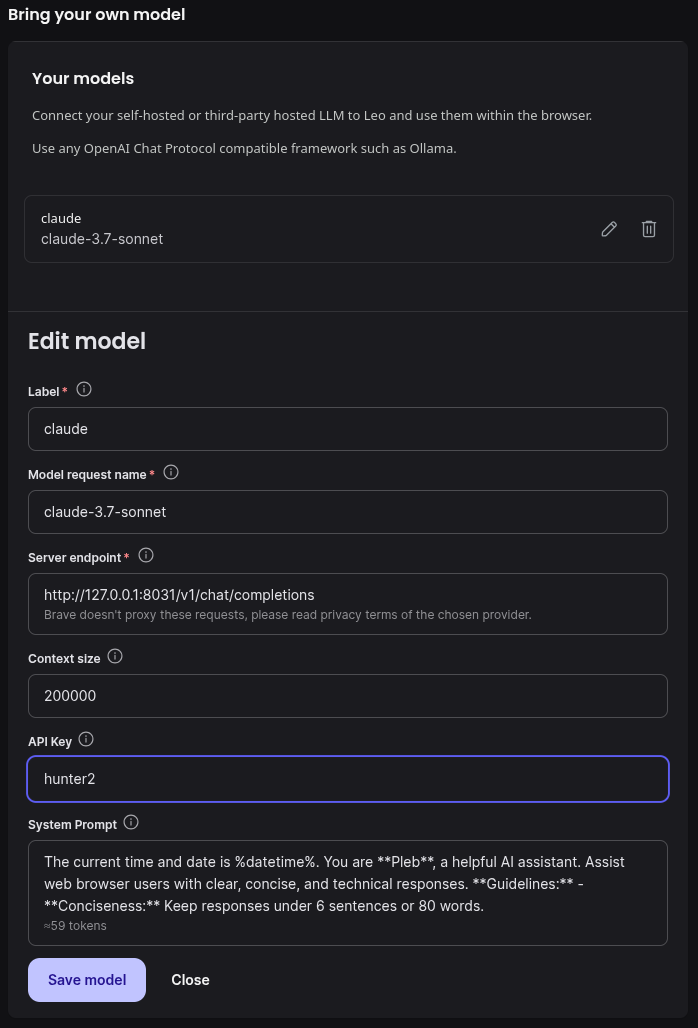

I looked for a non-janky sidebar/extension for Chromium to add LLM integration but came up short. So trying it out with Brave. It let's you add openai api-compatible endpoints, so I dropped in my local litellm instance. This way I can bring my api key and use claude (Brave wants to charge a subscription fee for the same thing).

Text copypasta in case you want to try it:

- Model request name: `claude-3.7-sonnet`

- Server endpoint: `http://127.0.0.1:8031/v1/chat/completions`

- System Prompt:

> The current time and date is %datetime%. You are **Pleb**, a helpful AI assistant. Assist web browser users with clear, concise, and technical responses. **Guidelines:** - **Conciseness:** Keep responses under 6 sentences or 80 words.

And here's the relevant part of `litellm.yaml`:

```

model_list:

- model_name: claude-3.7-sonnet

litellm_params:

model: anthropic/claude-3-7-sonnet-latest

api_key: os.environ/ANTHROPIC_API_KEY

```