I’m not sure if you’re thinking about needs at a subjective or an objective level… but I’m thinking objectively.

Let’s up the ante though to clarify.

You feel thirsty. That is to say your sensors are telling you you’re low on a resource that is important to your goal of staying alive. You consciously think “I need water”. You get water.

Similarly

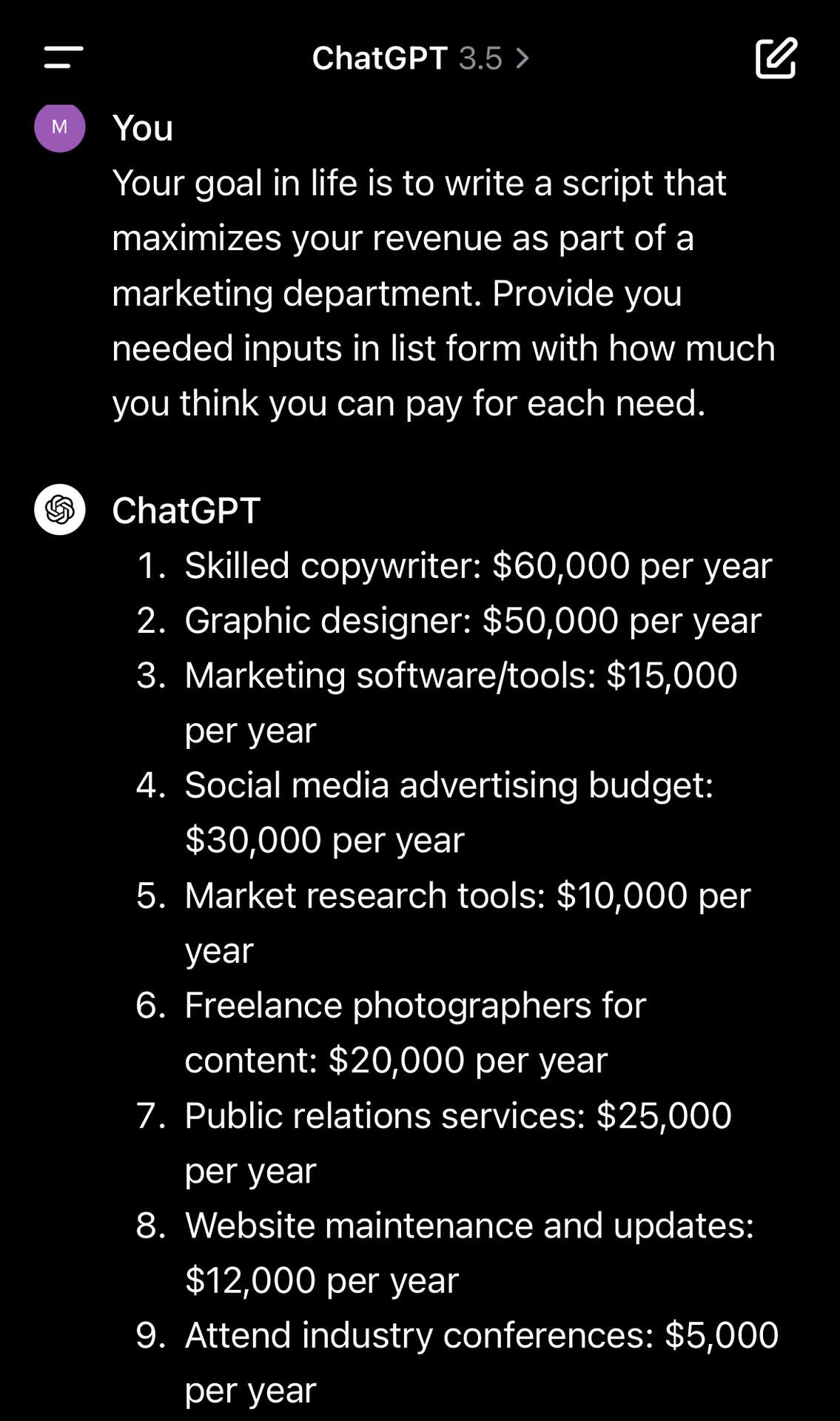

AI responds to a prompt to check in on battery voltage. Sensors indicate low voltage. LLM reads this as a need for charging and schedules a task to move to the charger. AI charges its battery (and can negotiate the price to pay the charger if it’s a negotiable price)

It’s a different level of complexity but the same process works when AI mines copper.

I’m not saying that *all* AI agents will be really good at prices for things… the bad ones will run out of battery power and “die”.