Thoughts on local DeepSeek-r1:14b

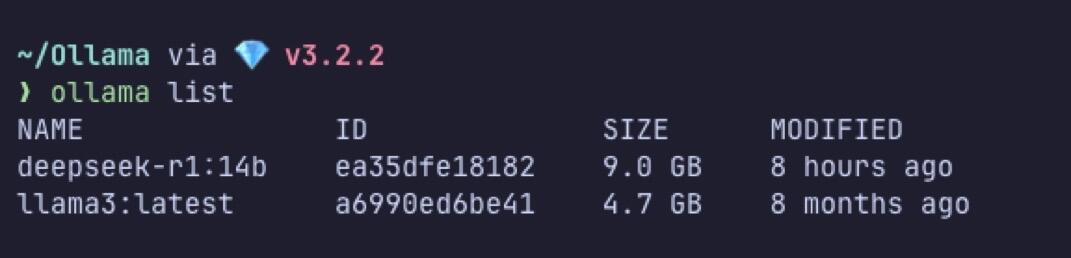

Locally I only have these two models, so I can only compare it to Llama3 (7b). I have an Obsidian AI chat plugin that uses Ollama. I use it to add context to my notes, or to reword certain sentences, or give suggestions on certain things.

DeepSeek does feel a bit “smarter” than Llama3. I say “feel” because I don’t really have an objective way to benchmark and measure both of the model.

Then again it might no be “apples to apples” comparison. Llama3 is 7b and DeepSeek-r1 is 14b. I am just pleasantly surprise that I could run it comfortably on a MackBook M2 / 16GB RAM.

Yes I know that what I’m using is a distilled version of the model and it is not the full model. Yet I feel that it is performing better than Llama.

I love reading the

I have yet to try the one online, their server is always busy for me. 🤷♂️

#ai #deepseek #ollama  nostr:note1ak4au7zd8d4h44gvvz9pwhq7fn4glk6kx0s6xtjqp7aq0q0mf8ssxugztr

nostr:note1ak4au7zd8d4h44gvvz9pwhq7fn4glk6kx0s6xtjqp7aq0q0mf8ssxugztr