Facebook has been doing this for two decades.

Facebook has been doing this for two decades.

this is the entire business model of https://monkeytype.com/

OpenAI and Nvidia have deployed the OPPT (online plunge protection team).

This only happens if you use it on some cloud server. How should this happen if I use it on my mats/ollama instance.

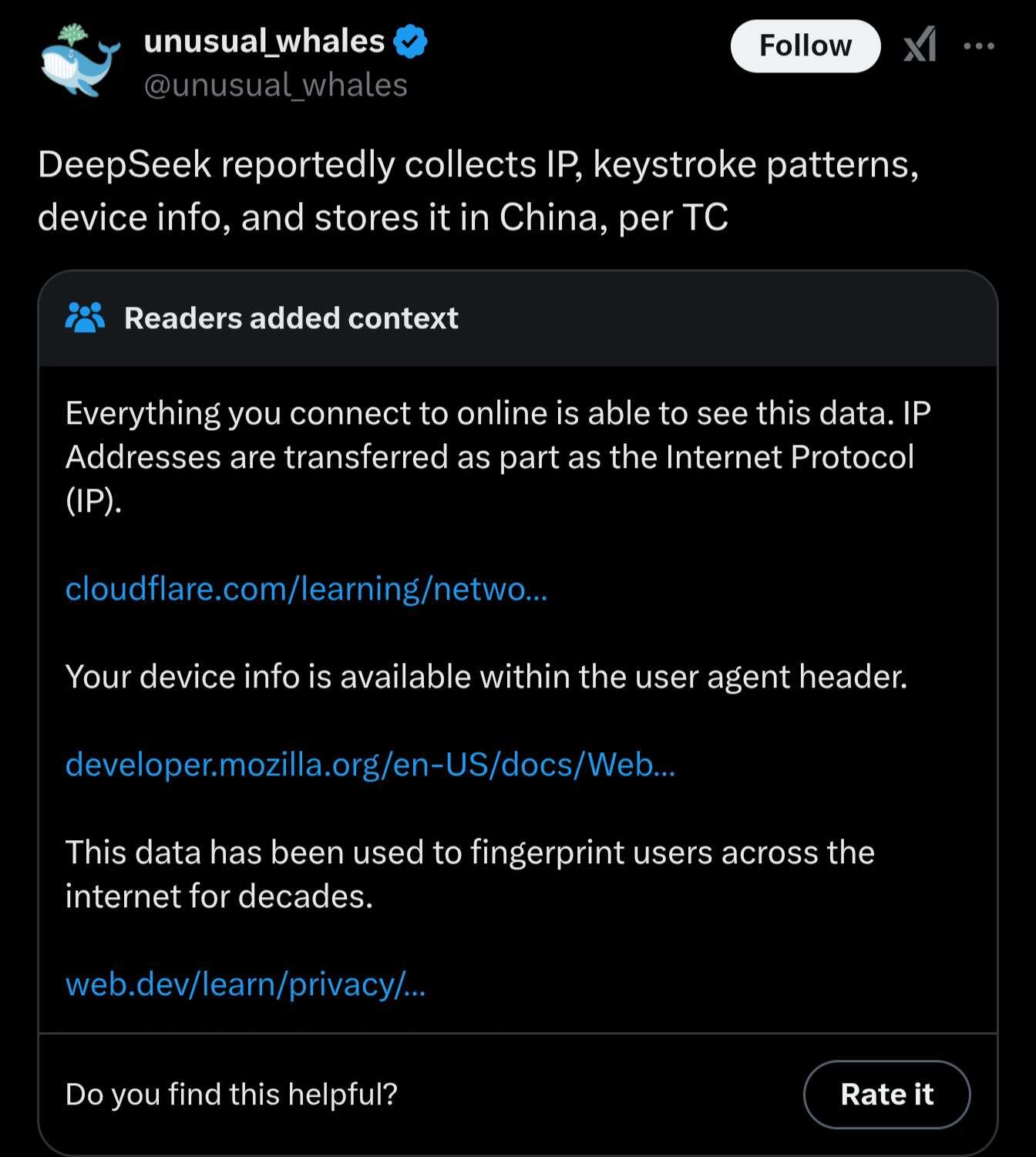

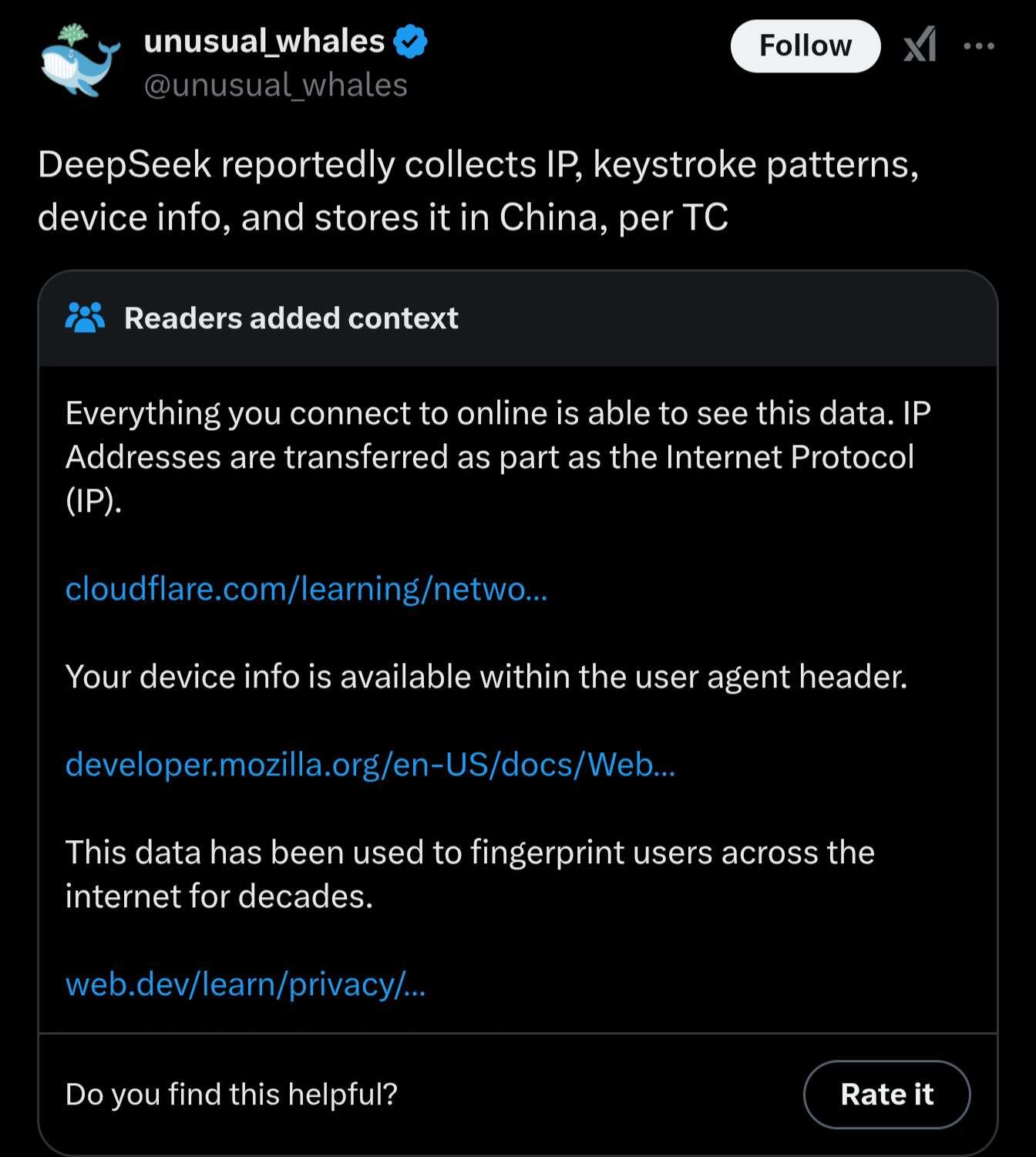

It doesn't. Only collects data and censors the output if you use their native app. This whole drama is nothing but fear mongering.

I'm running it with deep infra and the replies I am getting are not censored and clearly there is no data collection.

The cencoring isn't even built in, that's why you see it give a response for a few seconds and then being cut off by probably a secondary model whose job is to censor the output.

It can't, so long as your local instance isn't connecting to the Internet or you've reviewed their entire codebase for data leakage.

Ofc it can't you're right

yup. #Nostr

This feels a lot like (China + AI) = Money Printer