Thank you for working on this. specifically making sure its optimized. I'm using social-social-graph in noStrudel to build and cache the users social graph.

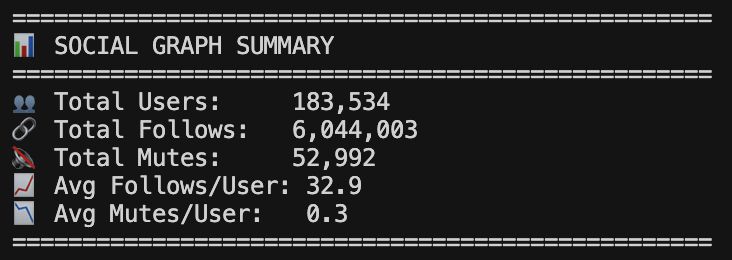

I was just looking into the code this morning to figure out if it was possible to make the de-serialize / recalculate methods faster so they don't freeze the app. (right now it freezes ~4s when loading a graph of 160k users)