Ok, so if RSS and nostr have some similarities, the discussion becomes, why can't we just host all the feeds as nostr notes?

You could, but what are the unintended consequences, and at what point does nostr end up using the solutions that podcasting has already figured out?

For example, there's over 4 million podcasts, 400,000 active ones.

Search and discoverability is a big deal, how does nostr solve that without having some sort of searchable Index of all of the podcasts that are spread out amongst all of the relays?

An index of 4 millions podcasts costs about $900 a month, so if it's going to be decentralized, each index is spending $900 per month.

The question for me isn't so much how can nostr replace RSS, but how can RSS adopt the ideas of nostr to be more decentralized and robust than it already is. I think it's easier to improve something with 20 years of precedence and reliability and infrastructure than it is to try and replace it with something else that will bring it's own unique set of problems.

Remember, there are no solutions, only tradeoffs.

I'm not fully versed but as I understand for search there's two components? There is the index list and the actual file hosting?

Can we simply start by indexing on nostr? It's just a glorified text file no? That will immediately decentralize that portion of things. A single index provider can spin up an npub and just publish the latest list anytime there's a change. This can be further decentralized with multiple indexes doing the same thing.

A single master indexer could write to multiple PC2.0 specific relays for redundancy. You could have several indexes doing the same thing if you wanted to be more immutable. Similar to how bitcoin's ledger is replicated across all the nodes.

End user facing apps simply need to connect to these relays to access the feeds?

I'm saying all of this will little technical knowledge of what's truly going on in the background so I could be entirely full of shit. But this seems to be a simple starting point?

Thread collapsed

An index is just a database that has the links to the RSS files. It has other stuff like podcast title, author, etc. to make searching for a particular podcast easier, but it really is just a searchable list that points to all of the RSS feeds that are spread out amongst thousands of servers.

So yes, you could start by indexing all of the notes that are feeds on nostr, having the title, author, etc to make searching for a particular note easier, but the issue becomes when that single index is now Indexing 4 million notes, and costs $900 per month. It can be further decentralized with multiple indexes doing the same thing, each at $900 per month. But it this point, it sure looks just like a traditional podcast index, just pointing to notes instead of RSS feeds.

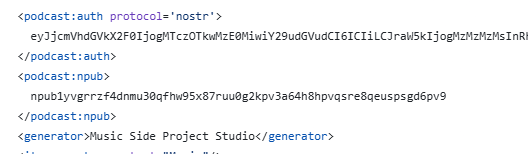

OK so... an RSS feed is basically a big tagged text file. Nostr notes can certainly do that. So rather than storing individual feeds on a centralized server, they can be distributed by nostr relays. Specific PC2.0 relays can be created to house this data and multiple relays provide redundancy. You just have to make sure you're publishing to these pc2 specific relays.

For example if I sign up for rssblue, i hook up my npub or spin up a new one. That's my artist account. All input fields remain the same. When I publish, it pushes a nostr note of the rss feed I just created, to the pc2 relays.

At that point you wouldn't need a single master index since everything lives on the relays. Any application wanting to access that data just needs to connect to the relays

Am I missing something fundamental here?

Running an entire network of pc2 relays I imagine would be significantly cheaper than $900/mo.

The Podcast Index costs them $900 a month to index and serve all of the podcasts. The amount of data being sent with 4 million feeds is surprisingly expensive. Perhaps sending it across relays is different, but I suspect bandwidth is bandwidth whether it's across a websocket or http.

But in this instance it's not a single provider taking on all the cost. With a sufficient number of reliable relays in the network, each relay only has to house a portion of the data.

The total cost can be distributed across that network, lowering costs for each individual entity involved. Apps would only need to pull the requested data adhoc

I'm not sure how it would work. That's ignorance on my part, not that it's a bad idea, it's a great one if it works. If I'm searching for Ford Pintos, which relay is responsible for storing the podcasts on Ford Pintos, and how do I know which relay to search? Do I just search on every single relay and hope one of them sends me the data I needs? If you need a master relay to direct all the other relays, is that master relay now a single point of failure?

Again, not bashing or disagreeing with the idea, just working with it.

I'd imagine you only really need a handful of relays to do all of this. I'm connected to around 20 relays for normal nostr notes. I can't imagine you need that many across the rss ecosystem. How many notes are served across the 20 largest nostr relays? (Idk the answer but finger in the air I'd imagine it's comparable to total # of rss feeds?)

If podcastindex were to sponsor say 5 relays with that 900/mo, they could set it up as "primary" relays each with a different genre. Individuals can self host their own relay and can probably filter feeds they wish to include, almost like playlist curation if they wanted to get granular like that. Any app could connect to any relay. Someone can easily run a simple website posting a running list of all relays if you wanted to.

This would help organization and lower data costs as you could spin up a lightning thrashes app that only connects to metal relays for example.

The downside is you might not have a single full accurate picture of the entire ecosystem, but there are very little practical instances where a user needs access to the entire library at once.

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Yeah, feeds aren't stored across a centralized server, they're stored on thousands of servers all over the world. You could have a podcast feed on an Raspberry Pi in your closet. The index doesn't store the feed, it points to the location of the feed. It's like a phone book for feeds. It lets you look up where to find the actual feed on the internet.

So, even if you info was on a relay instead of a server (relays and servers are very similar, both transmit and store data, just a different delivery mechanism), you still have the problem of how does anyone find your info. If you're doing a podcast on Ford Pintos, and they don't know you, how do they find you? That's what an Index is for.

OK i see. So this index is just a running list of available feeds and their locations?

Rather than serving the full list, can't that be pushed to apps adhoc? So if I'm on a pc2 app, I want to look up Ford pinto podcast, i query that term. The app then goes to the relays its connected to and searches for that keyword and returns results adhoc. No need to serve the entire list and no need for a single index as long as the app is connected to the relays. Maybe you just need an index of relay addresses which would cost basically nothing.

Yep, that's all an Index is doing.

And yes, it could search relays instead of the index server. It needs to know which relays to search. It's not highly efficient. I search across 100 relays, I may get 100 results back, and I have to parse through all those results, combine them, and remove any duplicates. It's all doable, but it introduces another set of problems.

Duplicates would be easy to remove as the notes would be digitally signed and can be uniquely identified. Idk how it happens behind the scenes but I'm sure amethyst and damas and all other existing client apps do this

They do, and it's inefficient and wasteful. If you want to see a note, you ask for it from 20 servers. Those 20 servers will send you all the same note. The bandwidth on 19 of them was wasted. Bandwidth adds up to real money. That's just a side effect of how nostr works. Maybe the payoff is worth it, but I think it should at least be understood as one of the tradeoffs, and perhaps there's a solution to it worth exploring.

I wonder if the relays can be tiered? So perhaps... client connects to just 3 main relays. Each of those 3 relays basically sort and filter from 10 each. Yes there is still duplication but less in total from a per-relay point of view.

I've been playing around with building a nostr client so I can understand nostr better, and when I'm searching for a particular note, I do a serial search in which I search the first relay, and if it returns the note, I stop the search, otherwise search the next relay, so there are ways around it to one degree or another. That's the 'fun' part of being an engineer of sorts is seeing problems, thinking of solutions, then seeing the problems those solutions created and seeing which of those you can 'fix'.

Thread collapsed

I've also thought about that, a smart relay that connects to other relays and dedupes the data before sending it to the client. At that point though, it starts to move away from dumb relays, so may be moving away from one of the core ideas of nostr.

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

The way it's sort of framed in my head is this....

Back in the brick and mortar days you had individual record stores. Some were general music, others were specialized. I had to jump in my car and visit these stores. I certainly couldn't find the entire library of all music in a single store, but if I visited every record store in the cou try, I probably could get close.

The record stores are the relays hosting feeds. My car is whatever client app I'm using, connecting me to various stores/relays.

It's not the most efficient nor does it provide a whole picture. But as a user, I can only listen to one song at a time. I don't want access to everything all at once. I want to find specific songs or shows upon demand, or else sort and shuffle by genre. I think this enables it without providing more than what's realistically necessary from a end user standpoint.

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed