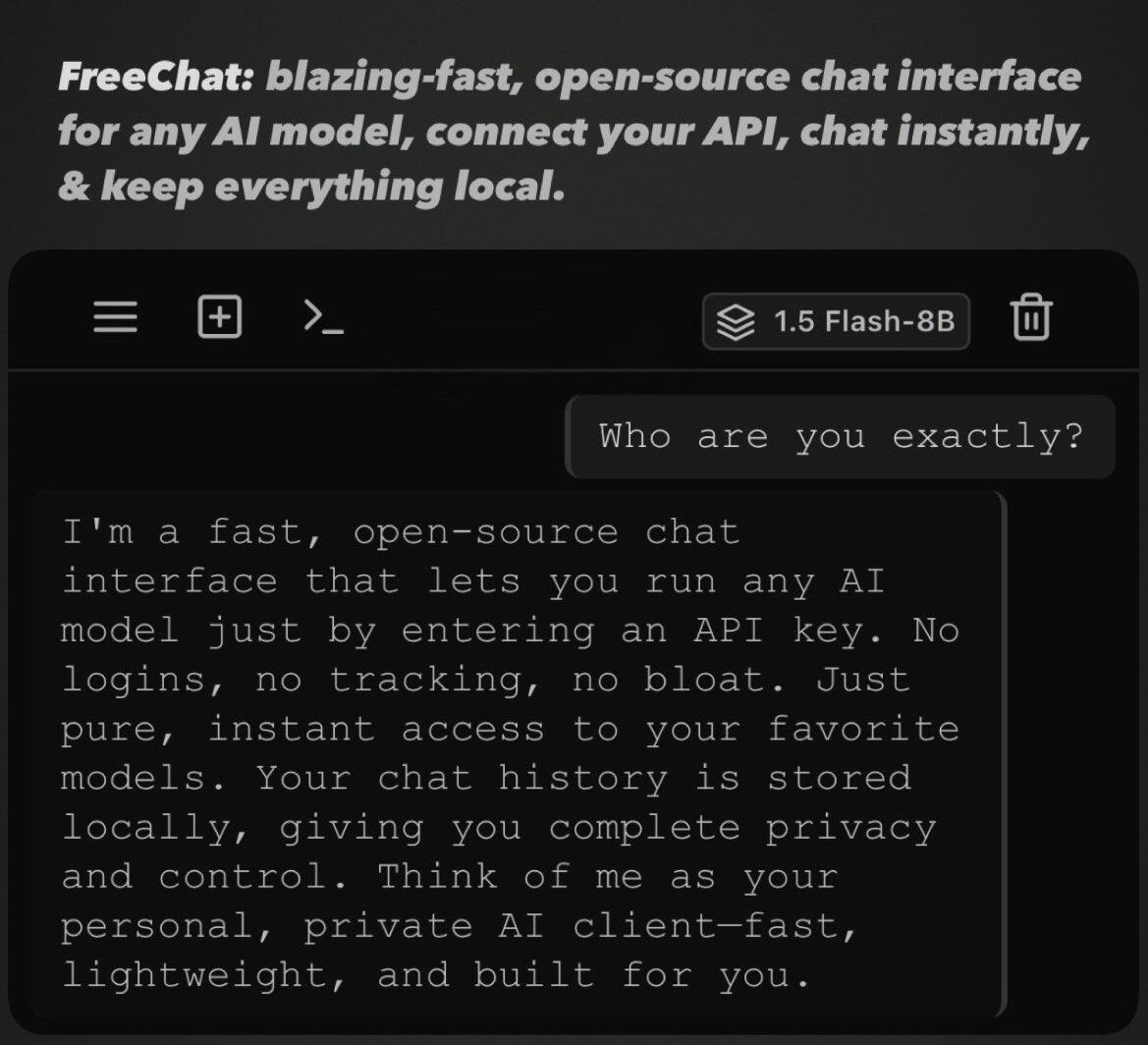

side-project brewing

Discussion

Sweeeeeet

Yeah this looks neat. Where do you get the API's?

most companies building models will have their own apis, and you can deploy your own model and connect it to this chat interface. it’s designed to work with any api, even local ones.

the most interesting part is i’ll be using web llm, which lets you run any open-source model locally. you can download it, store it in browser cache, and use your native hardware to run models locally on any device.

Oh wow. That's great.