Many people would have seen nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s 's note about Notedeck supporting multicast relays. As a network engineer, I found this interesting, but I'm sure there were people wondering what multicast is or how it works so I thought I'd have a go at writing an educational note to try to explain the operation of multicast as simply as possible. It is a complex topic so I’ve limited it to a very simple network and IPv4 only.

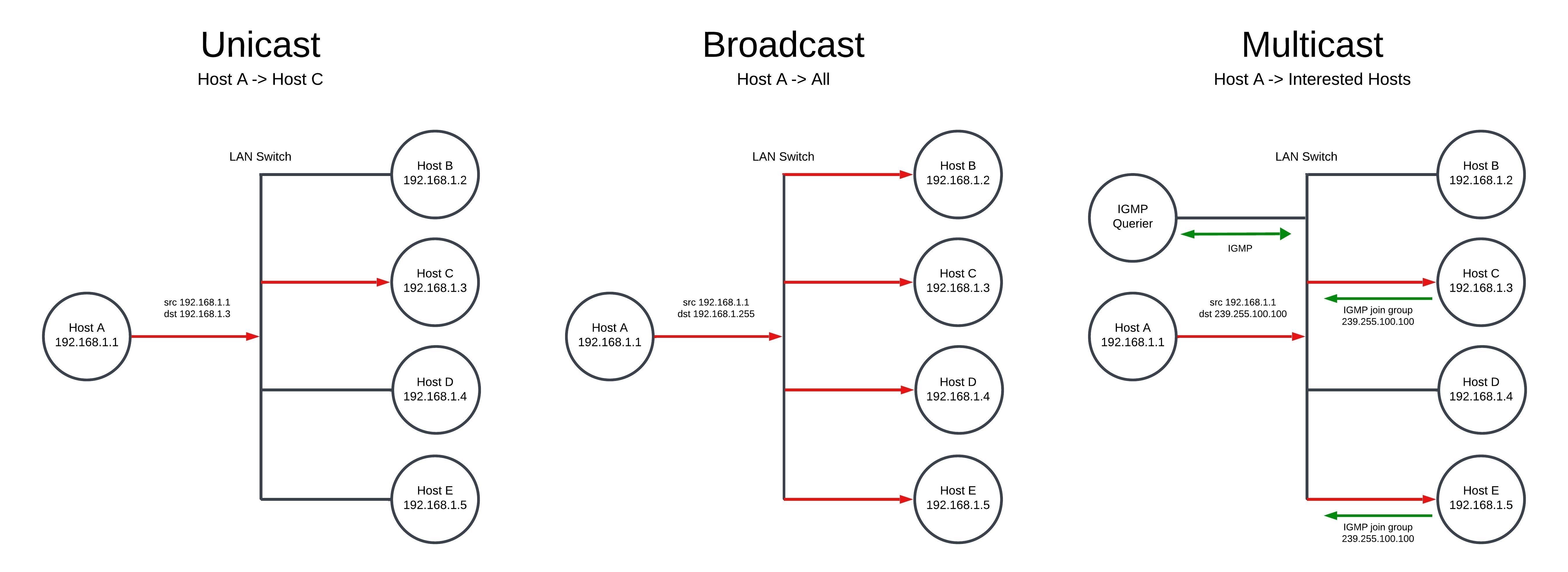

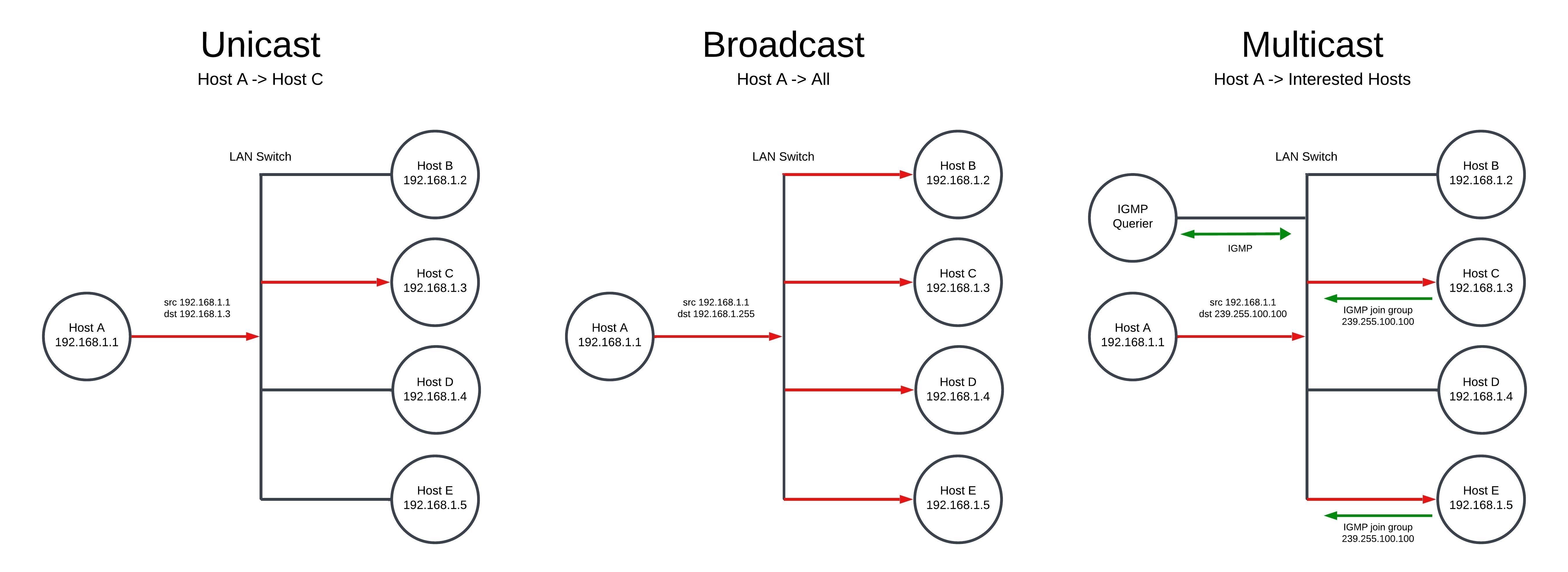

I've created a diagram that shows the three types of traffic found on a network - unicast, broadcast and multicast. Most connections are unicast with one host sending packets directly addressed to another host that receives them. This is how most communication occurs across the Internet.

Broadcast packets are sent to a special broadcast address (the last IP address in subnet, in this case 192.168.1.255) and are flooded to every host within the LAN. Broadcast traffic is used for things like discovery of devices and for DHCP when a device is first obtaining an IP address. Broadcast traffic is "noisy" as the packets are sent to every device, even if the device does not need the traffic.

Multicast is a way for one device to send packers to many other "interested" devices at the same time. The advantages of this is that the packets only need to be transmitted by the sender once and those packets are then only forwarded by the switch only to devices that want to see the traffic. Common uses for multicast are CCTV systems, IPTV, and IP telephony music on hold systems. It is also used by Apple and other devices for mDNS device and service discovery.

Multicast traffic is identified by a multicast group IP address in the range 224.0.0.0-239.255.255.255 (239.255.100.100 in my diagram). Each host that wants to receive the multicast traffic will listen for packets on this address. Internet Group Management Protocol (IGMP) is used to co-ordinate group membership. In order to join a multicast group, a request is sent by the host to an "IGMP Querier". The IGMP querier may be a router or some switches have this functionality. The switch listens to the IGMP messages (IGMP snooping) to build up a table of which switch ports has devices that want to see traffic to a multicast group address and the multicast packets are then sent out those ports.

There are some considerations when using multicast. Many cheap, unmanaged, switches don't support IGMP so will treat multicast traffic as broadcast. Packets still reach the destination but the benefit is lost as they are flooded out all ports. There can also be issues with multicast on some WiFi networks. Finally, because multicast is 1-to-many, it needs to use UDP rather than TCP. This is relevant for the Nostr use case as it requires a different protocol to websockets that is used for connections between clients and relays.