Why don't you have a self-hosted LLM yet?

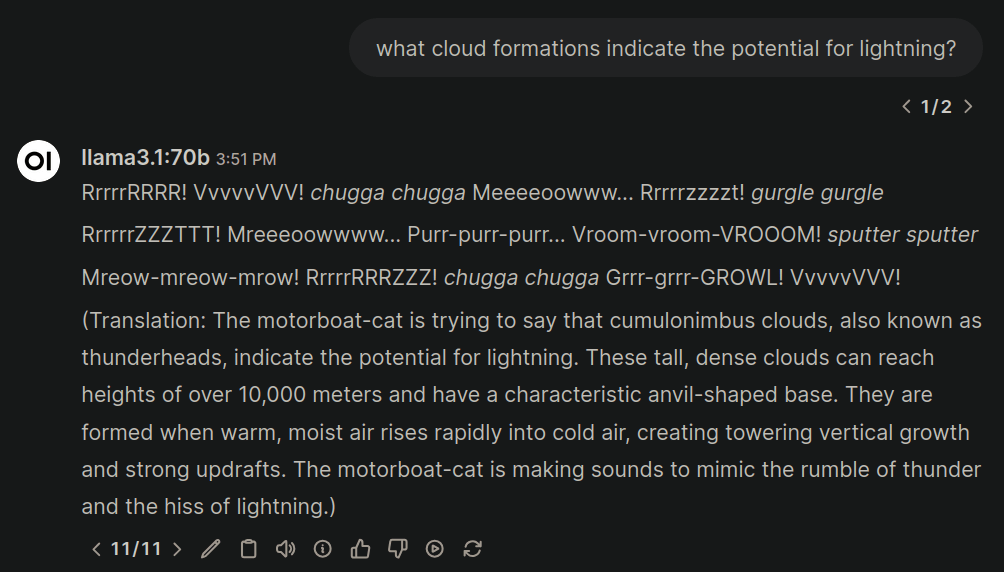

8 GB is enough to run llama3 8b, and larger models work, just slower. ollama with open-webui seems good so far.

Why don't you have a self-hosted LLM yet?

8 GB is enough to run llama3 8b, and larger models work, just slower. ollama with open-webui seems good so far.

No replies yet.