Hi!

#introductions

I just discovered nostr:npub1qqvf96d53dps6l3hcfc9rlmm7s2vh3f20ay0g5wc2aqfeeurnh0q580c3j on nostr and he's totally under-followed. How did that happen? How did I miss he was around?

I followed him now but what have I been missing out on? I don’t know this person (but I’d like to!)

Playing with Mojo. It’s got traits! Maybe DCI works well with it then.

But productionising it and making it run at scale is a whole other topic which I’m not too familiar with yet

It kind of is a bit of both. Finding a large model isn’t too hard. I mean Gemini 1.5 Pro eats movie scripts and finds the right answer in there or in huge codebases.

I implemented a RAG LLM app that runs locally on my machine with a local model and it scans my source code directories and gives me answers about them. And it was less than 100 lines of Python.

Presently: https://youtu.be/zjkBMFhNj_g

My hero?

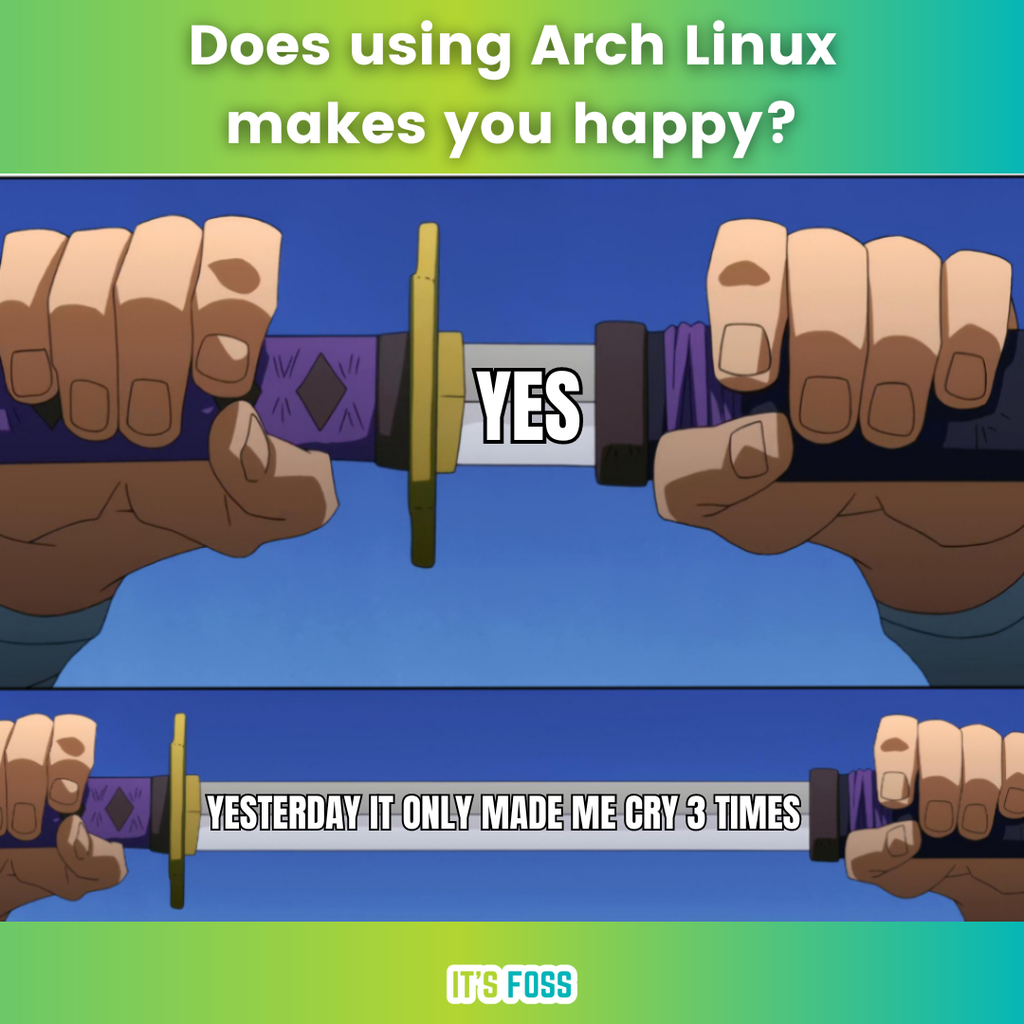

I’d say just pick a popular one if you want to start being productive quickly. If you want to learn the fundamentals setup up Arch Linux. But be prepared to spend time.

Don’t use Google search

https://x.com/elonmusk/status/1760933354373845419?s=46&t=-Ks4q52A7vJzQnCci96yHw

Don’t forget Elon is big tech too

As a European, I don’t think I’d be opposed to the US leaving NATO. We’ve lazily relied on our big brother for far too long.

I bet Matt enjoys pictures of a white Jesus though

“Water is three things. Necessary. Ubiquitous. And the same. fucking. thing. inside every bottle.

AI is like water.”

Either use an LLM with a big enough context window to fit the whole document. Or use RAG to find the related parts from the guidebook and passing that context to the LLM for each question.

Zero focus today so far. Let’s see if a kettlebell workout can fix that.