isnt the point making it more expensive already. making them go to the miners directly is already the point.

and also filters dont effect fee estimations in a meaningful way. fee is not calculated based on what you have on your mempool that's not correct. even core is using more advanced methods to estimate fee. its just an argument.

and also everyone's mempools is not supposed to be same already.

filters are changes nodes can make without causing a network fork. we need more options for filters, allow people to write their own filtering scripts and rules.

and when miners dont have similar filters to the rest of the network it takes longer for their blocks to propagate over the network, and if another miner finds another block in that time their block is ignored. which makes going against the network cost even more.

decentralization doesn't mean everyone is copy of each-other. its normal for something decentralized to be messy slow, clunky and not butter smooth. that's the point. pure chaos of disagreement, but of course in the limits of consensus.

and some of them talked about changing the consensus for this.

but changing consensus for something dynamic wouldn't make sense. consensus is the untouched law, and constitution, and filters are the community.

what filters nodes run collectively decides what is more expensive/harder to do in this network.

which keeps unwanted stuff niche and not go mainstream, thats the point. not a great analogy but its like the "unwritten rules".

funny thing is, they proposed this exact PR 2 years ago, it got rejected because people said, we can fix new taproot exploit by updating and adding filters.

BUT funny part is that PR for the filters also got rejected because "its controversial".

but suddenly this new PR is not?

they knew this would happen, they rejected every filtering solution. so 2 years later they can propose it again. when the issue has gotten bigger. they are giving solutions to issues they caused. because their agenda from the beginning was allowing more data. they planned this. they say it on their other PR, i think it was something like "we can try again later when utxo set is bigger".

sometimes i talk aggressive

this is the attack. 3-4 year long attack

people answered each one of your points many times in videos, in debates, under comments. and you just keep ignoring them and saying, "there is only silence". you either extremely naive or have another agenda that makes you keep lying. everything you said is either upside down or makes no sense.

you are just making politician talk, not mentioning any of the points of people. even here you mentioned none of the points.

you lied many times, and ignored the points other side makes for so long that its clear you have another agenda. its clear bitcoin core devs wanna develop bitcoin, not preserve it.

none of the points you made in this post makes sense. because you are only offering solutions to issues you created in the first place. this was obviously planned from years ago. you let the issues happen so you can give a "solution" ignoring every other more bitcoin solutions.

honestly i talked about this many times, that im not gonna talk about it again. go read mechanics reply, watch his videos. or read my posts.

people should run nodes, and node runners just needs to be aware what they filter.

core is the one that likes to take away options from its users

no shit, why are you replying this to me?

that's for the blockchain, not client level

name of the config file variable is `datacarriersize`.

but i think knots defaults are just fine.

personally i didnt touch it.

i was thinking about having a podman and docker compatible image on the repo distributed with ghcr.io. that runs the node directly over tor. without doing any settings.

so you can just set it up easily with podman, docker, distrobox, toolbox, etc.

distrobox can even export the qt launcher to the host. making it run like a normal app on the host.

things like BoxBuddy makes the UX great.

or

it can be a flatpak with tor built-in.

less friction, more nodes.

linear scale is made up.

nature and universe works with ratios and logrithmic scale.

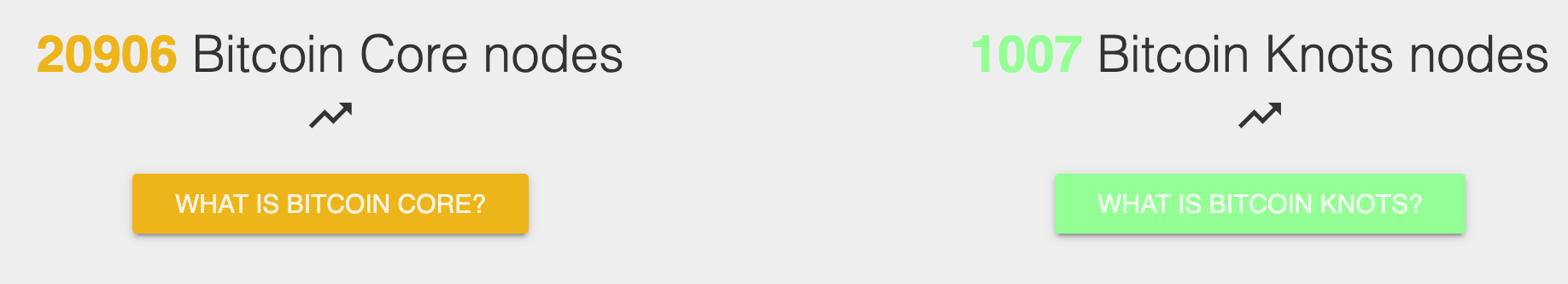

knots is a core fork.

they both work by just double clicking on it.

but you either need tor or do port forwarding in order to become a real useful node.

using just tor is easier.

it's really simple, not 3 clicks, but shouldn't take more than 3 minutes.

- install tor.

- uncomment two settings from to config file.

- restart tor

- install knots gui app, and run it.

- from options check tor setting.

they're brainwashed to be cattles to milk

im not gonna radiation bath my balls but yeah in general. maybe 5 mins a day.

As long as we have something useful, I don't really care what it's or how it works. I'm used to JS.

ok im calm now

ok im gone, im not gonna "confuse" the discussion.

make it go anywhere you want.

do what ever you want, bye

idc do what ever you want, talk about what ever you want.

bring another SYSTEM, quirk to DOM.

useless `MutationObserver` is not enough.

lets engineer a whole new system for every new feature we want.

that doesnt connect to each-other well. instead of having simple primitives.

more technical depth YAY.

my object has a function called `then()` so lets just pretend its a `Promise`. let's never check the prototype chain. only and have something weird as `Array.isArray()` like anyone is doing cross window JS. deprecate it already, but no. most useless thing that can have work around instead, is still there. but we remove the fucking DOM mutation events. because pErForMance, and have nothing else in place of it that can be used. like its not the job of the app dev to decide the performance of their app. dumbest excuse to remove something useful.

let's create basic ideas first but then talk in the issues for thousand years to make it as complex and as uncompatible as possible with the existing features. Signals dead, the same way. start cool, nice ideas. end up with something useless.

its almost like we wanna get deeper into the framework abstractions to make things useful.

what a joke.

but web is still the best platform for use. secure, goto to an address and use, powerful, can even game on it, works everywhere. etc. css is also amazing, better than js tbh. recently especially

buy btc not "insurance"

How many chairs are you sitting on right now?

https://video.nostr.build/1dc64f7cbb1efc0079c13dd9b041b6d0e489572da880258a33d634d2e696a73a.mp4

sell your chair, buy btc

people complain about state of web development ecosystem. but they should instead complain about the state of mathematics. it has way more abstractions, and it eats the brains of mathematicians.

worse language community ever. with their archaic confusing syntax.

its worse than the rust's syntax, think about it.

maybe this is a hot take for some reasons but in the context of "1 = 0.9999..."

decimals are not numbers, they are syntactic conveniences or representations, they are syntax.

they represent sometimes recursive operation chains, changing meaning based on the context they are in.

but instead of doing those recursive computations we have shortcuts we use while dealing with decimals. those shortcuts makes us treat them like numbers. but that doesn't make them numbers.

similar to how you can add different meaning to an operator in computer languages like for example 10 + "10" = "1010". when you combine a number with a decimal in an operation, that "+" operator doesn't really mean "add" anymore.

you cant always use the shortcuts on infinite decimals. the issue is, it looks predictable, just 9s going forever, but when you inline the syntax, its not just 9s going forever, and sometimes its not even possible to predict the pattern of the computations that syntax going to create recursively forever, so then your shortcuts might also fail.

0.5 by itself, doesnt mean anything. it gets meaning, when you try to "multiply" it, or "add" it etc. its unexpected end of syntax by itself.

the shortcuts work almost all the time that you start to think they are numbers.

---

people will also say 2*(1/2) = 1

but "2*(1/2)" is not equal to 1 because its not a number, its a syntax for a computation, when you run it you get "1", so when you inline the result of "2*(1/2)=1" it means "1=1" not '"2*(1/2)=1".

mathematicians gets too used to playing around with shortcuts, they think things that are not number are in-fact numbers. but without realizing they don't even handle them like numbers they treat them differently.

maybe instead of operators they used functions calls like `add(1, 2)`, they would realize that `add(1, 0.5)` works because `add()` functions has an overload that looks like `add(number, decimal) => decimal | number`

its not the same addition computation.

being able to "think" something in a human mind is not a good measure to decide what is a basic operation and what is a number. humans have lookup tables, and they invent shortcuts.

shortcuts are not the underlying operations, they are shortcuts.

similarly, just because i can quickly say 1_000_000_000 * 10 = 10_000_000_000 doesnt make multiplication a basic operation. its just a shortcut. pattern recognition. learned base-10 arithmetic rules. a cognitive shortcut that bypasses the actual multiplicative operation.

0.99 as divider, what does divide mean?

1/.99=1.01010101...??? that's also a decimal at least bigger than 1 but infinite, because you need resolution, like in PI.

its not a number that's still an operation, computation, waiting to be used.

not a result number. its waiting input. you wrote a generated a operation/code/computation, waiting an input with a big enough resolution, and some of these loop more as the input get bigger.

as soon as you reach a decimal in a computation, that means you dont have enough resolution in your unit to give a full output. so what remaining is the remaining computation, which represent next to your number with a nice syntax. and its just we have a different set of rules and systems to handle these remaining computations when we continue computing without resolving it. its almost like stuff in the closure waiting to be resolved. or it will just stackoverflow lol.

0.999... = 1 is a shortcut giving wrong result, not the underlying operation.

ok lets think a little, lets try to do: 1 / 2 = 0.5

0.5 is not a number, its telling you that your resolution is not enough to show the full result but i will add it at the end, so you can use it to continue your computation. like in closures in functions, in programming, its waiting on the stack.

so lets say 1 there is 1cm, i can convert that to 1mm and now i get a result without remaining computation.

but lets say we wanna keep use this "result".

and multiply it by 20

0.5 * 20 = 10 we used the remaining computation with our next operation and got 10 which is a number.

now lets think a bit.

what does 0.5 mean while multiplying?

it means divide by 2?

how do we know because.

1/0.5 is 2? yes but how do we use it here its still a decimal.

and what does dividing mean?

well we can try to scale the units up by 10?

10/5 ok this is readable

but now how did we multiply 0.5 with 10? one is number one is not. different types.

its simple 0.5 never meant "0", "." "5"

its a syntax.

it just means divide by 2 one more time.

so, how did we reach 0.5?

we tried to divide 1 by 2!!! (there is your TWO), but we couldnt divide because we didnt have enough resolution. so we transferred same computation as a result in-case numbers later gets big enough to get a result.

lets try something different 5/2=2.5

so how did we get 2.5. well we certainly get 2 but remember .5 is syntax we made up.

result is "2 (then / 2)". its just syntax. its not a number, its telling us what to do, not what this is.

so how did we find the first 2?

division is not a basic operation, its a loop/recursion.

its multiple subtractions.

(similar to decimals negative numbers are also remaining operations. so we can't go under 0).

so we subtract divider from the dividend and count. until we cant anymore.

thats what dividing really means at its core. dividing is not a basic operation, because its made out of a basic operation. its not a primitive.

so we were able to subtract 2 times, and operation is still remaining.

so we have "2 (then / 2)". this is just different syntax, you can write it as "2.(10/2)" => "2.5" like you normally would too, but this syntax just more verbose about what is happening.

so what if we multiply this by 10?

(2 (then / 2)) * 10

= (2 * 10) + (10 / 2)

= 20 + 5

= 25

this how it works, but still in between these operation there are still hidden operations, because dividing and multiplying are not fully open still. but im not gonna write the same thing 10 times, and thats why we have syntaxes, rules and shortcuts. but shortcuts are shortcuts not the underlying operation.

in math we can get a unresolved "result", and wait to use it somewhere else. but thats not a result.

thats computation on pause. because in math we can cut the computation in the middle, store it, write it somewhere. and then insert it into another computation later.

so these are not numbers, these are syntax for unresolved operations. we just found ways to handle and work with these operations, that doesn't mean these ways are always correct in all conditions.

if we draw more parallels with programming, numbers are integers going from 0 to infinity. decimals are a tuple of a (number, and a function) of the remaining operation. based on what the types of operands on each side are operator overloads decide what to do.

"cloudy with a chance of meatballs" is disturbing

if you are defaulting to using `grid` instead of `flex`, then your CSS is good, probably.

if you are defaulting to `flex` every time, you are bad at CSS for sure.

im not a fan of thoughts/speech/code/digital data being "copyrighted".

copying is not stealing. its copying. and its ok to copy.

today on social media people can easily find the original creator, easily donate to them, especially on nostr.

and many youtube creators are funded by merch and patreons.

they dont need copyright.

if the only way you can make money from what you are doing is via copyright, then you shouldn't make money. period. same thing applies for intellectual properties.

eat meat, eat meat, eat meat, eat meat, eat meat, eat meat