Running keychat

H4sIAAAAAAAAAxVSN5JYIQy9imfrXyihcBxQqLbw/SvLHQPSi/z8/e3357f75/uY5Ry97jz4qpJGUqpC3lhQVqJ73moDCFbokQYUQkFu9rEPzsvH80x8AvtKGdWkiBmrhfKb0J4bC1enkakEGl1KwZkN/GOgAbjHzimHl05zUFbRKuu0J5bad9mGgAXLX6vHkIas+qhv1biLm8TKSca2insHTYG7YI09nbw+gtQyu8QHOeSo7WrH7R2jMFE+9ZmnRaKWkE8qLe7ay32MR6sCgOxh1Xshc9b0edaCekvH6x0ce6l6QClx9OFhaTcSP5Bgcbz2oKdsBqUTpTLpbkbsANCfbRIATmfdoB8BR8njKz/Kqwbk0rio9Faisw1sUqGoaffthUrMZlRg0h8akaxv51D4fKtyXHYtXTB7g6A3ezOBhf0fz0Mgvfd1xB1oLlAFnU1FcqdnddZ1xM1VtyrbnwGAXbO+4SoNB89/llKDSLEXtNQXr4H8A7QwFYx7AgAA

Slick!

GM oranges

Oh and I autostart it on boot with a systemd unit in my `configuration.nix`:

```

systemd = {

services.ollama-webui = {

wantedBy = [ "multi-user.target" ];

after = [ "network.target" ];

serviceConfig = {

Type = "forking";

User = "pleblee";

WorkingDirectory = "/home/blee/apps/open-webui";

Environment = "NIX_PATH=nixpkgs=/nix/var/nix/profiles/per-user/root/channels/nixos:nixos-config=/etc/nixos/configuration.nix:/nix/var/nix/profiles/per-user/root/channels";

};

script = "${pkgs.nix}/bin/nix-shell";

};

};

```

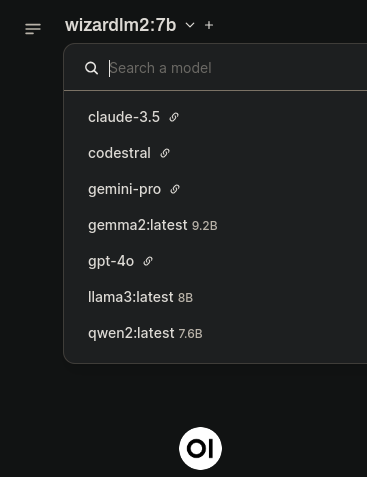

local llms with ollama + open-webui + litellm configured with some apis

the free Claude 3.5 sonnet is my daily driver now. When I run out of free messages, I switch over to open-webui and have access to various flagship models for a few cents. The local models usually suffice when I'm asking a dumb question

Here's my `shell.nix`:

```

{ pkgs ? import

(pkgs.buildFHSEnv {

name = "simple-fhs-env";

targetPkgs = pkgs: with pkgs; [

tmux

bash

python311

];

runScript = ''

#!/usr/bin/env bash

set -x

set -e

source .venv/bin/activate

tmux new-session -d -s textgen

tmux send-keys -t textgen "open-webui serve" C-m

tmux split-window -v -t textgen

tmux send-keys -t textgen "LITELLM_MASTER_KEY=hunter2 litellm --config litellm.yaml --port 8031" C-m

tmux attach -t textgen

'';

}).env

```

And here's `litellm.yaml`:

```

model_list:

- model_name: codestral

litellm_params:

model: mistral/codestral-latest

api_key: hunter2

- model_name: claude-3.5

litellm_params:

model: anthropic/claude-3-5-sonnet-20240620

api_key: sk-hunter2

- model_name: gemini-pro

litellm_params:

model: gemini/gemini-1.5-pro-latest

api_key: hunter2

safety_settings:

- category: HARM_CATEGORY_HARASSMENT

threshold: BLOCK_NONE

- category: HARM_CATEGORY_HATE_SPEECH

threshold: BLOCK_NONE

- category: HARM_CATEGORY_SEXUALLY_EXPLICIT

threshold: BLOCK_NONE

- category: HARM_CATEGORY_DANGEROUS_CONTENT

threshold: BLOCK_NONE

```

https://bitcoiner.social/static/attachments/4rG1Kg_tony--d6_trim.mp4

I had way too much with LivePortrait this morning. Couldn't quite get it to run on Nix (I still suck at nix derivations), but they're still running a free demo space on HF.

Also out of China in the last few weeks was ChatTTS and Qwen2, state of the art local text to speech and llm. I've been running it with ollama and open-webui, and it's not half bad compared to 4o or claude, in one prompt it actually gave me a better answer.

There's a ComfyUI node for LivePortrait. There's a lot you could do with this stuff. Deepfakes and destroy democracy, sure. But maybe more relevant to my interests is we're nearly upon the toolset needed to build a fully artificial (and local) talking head. if there's a model that can generate facial expressions to match a voice, I think that's basically everything we need is right there. then use ollama to RAG your own documents and legit talk to your home PC face to face.

what a time to be alive

# links

LivePortrait:

https://github.com/KwaiVGI/LivePortrait

the ComfyUI node for LivePortrait:

https://github.com/kijai/ComfyUI-LivePortraitKJ

ChatTTS:

https://bitcoiner.social/static/attachments/4rG1Kg_tony--d6_trim.mp4

I had way too much with LivePortrait this morning. Couldn't quite get it to run on Nix (I still suck at nix derivations), but they're still running a free demo space on HF.

Also out of China in the last few weeks was ChatTTS and Qwen2, state of the art local text to speech and llm. I've been running it with ollama and open-webui, and it's not half bad compared to 4o or claude, in one prompt it actually gave me a better answer.

There's a ComfyUI node for LivePortrait. There's a lot you could do with this stuff. Deepfakes and destroy democracy, sure. But maybe more relevant to my interests is we're nearly upon the toolset needed to build a fully artificial (and local) talking head. if there's a model that can generate facial expressions to match a voice, I think that's basically everything we need is right there. then use ollama to RAG your own documents and legit talk to your home PC face to face.

what a time to be alive

state of the art open weights*

https://bitcoiner.social/static/attachments/4rG1Kg_tony--d6_trim.mp4

I had way too much with LivePortrait this morning. Couldn't quite get it to run on Nix (I still suck at nix derivations), but they're still running a free demo space on HF.

Also out of China in the last few weeks was ChatTTS and Qwen2, state of the art local text to speech and llm. I've been running it with ollama and open-webui, and it's not half bad compared to 4o or claude, in one prompt it actually gave me a better answer.

There's a ComfyUI node for LivePortrait. There's a lot you could do with this stuff. Deepfakes and destroy democracy, sure. But maybe more relevant to my interests is we're nearly upon the toolset needed to build a fully artificial (and local) talking head. if there's a model that can generate facial expressions to match a voice, I think that's basically everything we need is right there. then use ollama to RAG your own documents and legit talk to your home PC face to face.

what a time to be alive

sorry I just found LivePortrait and your pfp came up first

My phone gets hot when it can't connect to towers so I still do it

Amazing work! I'm still running a modified strfr-policies that first checks if an npub is within a given set. I keep sets of npubs that are designated as within my web of trust, currently with three tiers. Then I apply different filtering depending on whether your npub is in the set. Mostly cribbed from nostr:npub1gm7tuvr9atc6u7q3gevjfeyfyvmrlul4y67k7u7hcxztz67ceexs078rf6 and that one vegan guy.

A lot of other stuff breaks when doing that currently. I tried creating a new npub recently and couldn't load a community that I created on this npub.