用户体验可以,真要做二次开发我觉得够呛

你这个jumble代码给人的感觉就是质量很糟糕,很难在已经代码量这么大的情况下手动做一些修改

ai的训练语料里估计都没有nostr库

ca也是基础设施

Why not just use external i2p proxy support, since there are i2p and i2pd to choose.

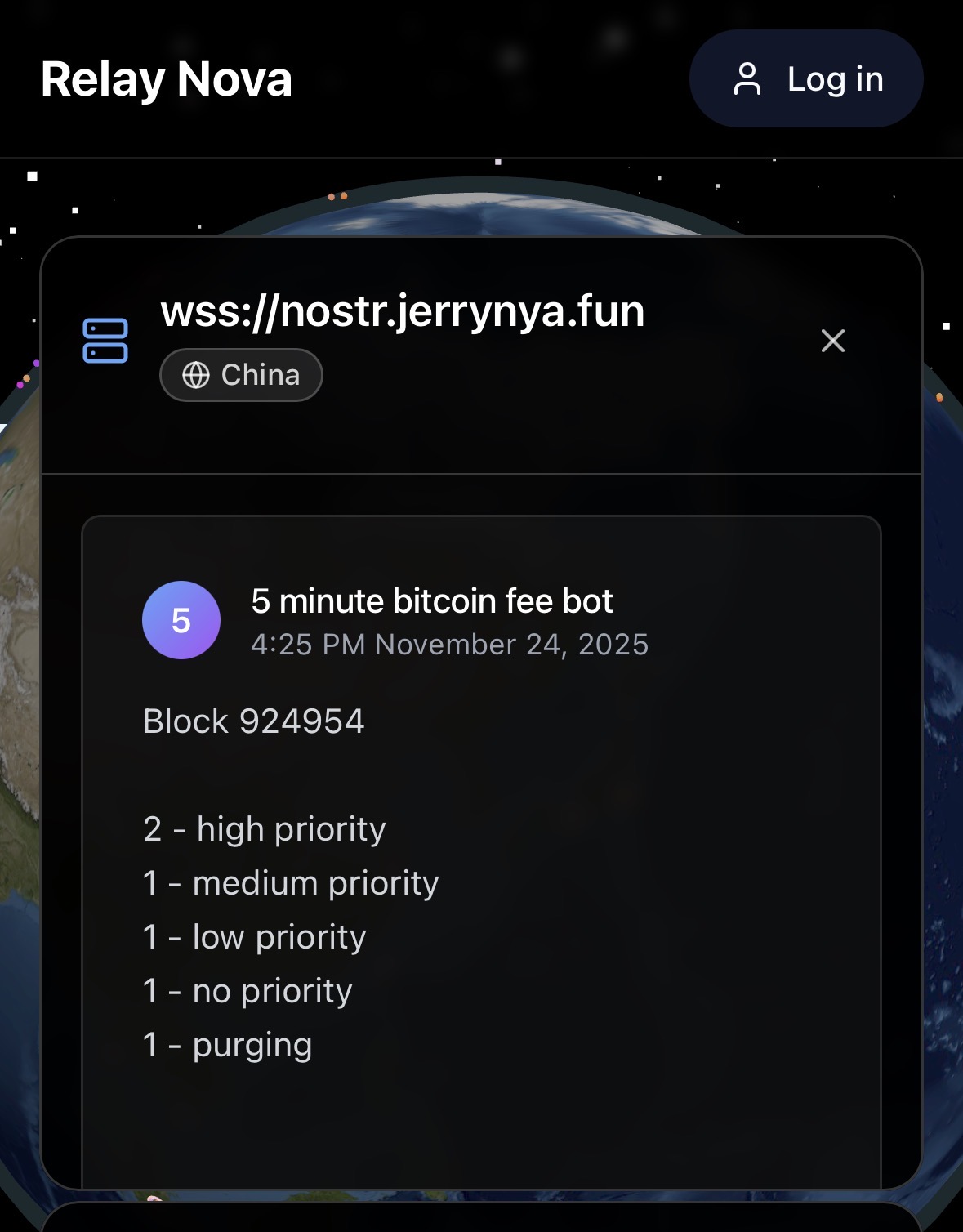

nostr并不依赖dns其实,你完全可以用ip直接连接relay,真正被控制和占据的是包括ip在内的互联网基础设施

on any place, you can use clash (or mihomo) for proxy routing

here I give you a configure of it

```

port: 7890

mode: rule

external-controller: 127.0.0.1:9090

proxies:

- name: i2p

type: http

server: 127.0.0.1

port: 4444

rules:

- DOMAIN-SUFFIX,i2p,i2p

```

or just use a web client with browser proxy plugin

```

import asyncio

import time

import requests

from bs4 import BeautifulSoup

import os

import urllib.parse

import re

from nostr_poster import post_to_nostr

def extract_redirect_url_from_onclick(onclick_attr):

"""

Extract the URL from JavaScript onclick attribute

Example: onclick="location.href='https://v2.jk.rs/2022/12/22/275.html';"

"""

if not onclick_attr:

return None

# Extract URL from location.href='URL' pattern

pattern = r"location\.href=\'(https?://[^\']+)\'"

match = re.search(pattern, onclick_attr)

if match:

return match.group(1)

return None

def extract_images(url):

"""

Extract all images from the specified webpage URL

"""

# Send HTTP request to the webpage

print(f"Fetching webpage: {url}")

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36'

}

try:

response = requests.get(url, headers=headers)

response.raise_for_status() # Raise exception for 4XX/5XX responses

except requests.exceptions.RequestException as e:

print(f"Error fetching the webpage: {e}")

return []

# Parse HTML content

soup = BeautifulSoup(response.text, 'html.parser')

title = soup.title.string.strip() if soup.title and soup.title.string else "无题"

# Only keep the part of the title before the first dash

title = title.split('-')[0].strip()

# Find gallery divs with data-src attributes (new pattern)

gallery_divs = soup.find_all('div', attrs={'data-fancybox': 'gallery'})

print(f"Found {len(gallery_divs)} gallery divs")

# Extract images

image_urls = []

# Then process gallery divs with data-src

for div in gallery_divs:

data_src = div.get('data-src')

if data_src:

# Handle relative URLs

if not data_src.startswith('http'):

data_src = urllib.parse.urljoin(url, data_src)

image_urls.append(data_src)

# Remove duplicates while preserving order

unique_urls = []

for img_url in image_urls:

if img_url not in unique_urls:

unique_urls.append(img_url)

print(f"Found {len(unique_urls)} unique image URLs on the webpage")

return title, unique_urls

def find_article_urls(base_url):

"""

Extract article URLs from the base website that use JavaScript redirects

"""

print(f"Looking for article links on: {base_url}")

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36'

}

try:

response = requests.get(base_url, headers=headers)

response.raise_for_status()

except requests.exceptions.RequestException as e:

print(f"Error fetching the base webpage: {e}")

return []

soup = BeautifulSoup(response.text, 'html.parser')

redirect_url = ""

# Find all anchor tags

anchors = soup.find_all('a')

for anchor in anchors:

# Check for JavaScript onclick redirect

onclick_attr = anchor.get('onclick')

redirect_url = extract_redirect_url_from_onclick(onclick_attr)

if redirect_url:

return redirect_url

return redirect_url

async def main():

while True:

# Main website URL

base_url = "https://v2.jk.rs/"

# Find article URLs with JavaScript redirects

article_url = find_article_urls(base_url)

if not article_url:

print("No article URLs found. Using the base URL instead.")

continue

print(f"\nProcessing article: {article_url}")

title, image_urls = extract_images(article_url)

print(f"Found {len(image_urls)} images on {article_url}, title: {title}")

if image_urls:

for img in image_urls:

print(f"Image URL: {img}")

# Post to Nostr

await post_to_nostr("随机妹子图", title, image_urls)

# sleep 4 hours

time.sleep(4 * 60 * 60)

if __name__ == "__main__":

try:

asyncio.run(main())

except KeyboardInterrupt:

print("\nProcess interrupted by user.")

except Exception as e:

print(f"An error occurred: {e}, restarting the program.")

asyncio.run(main())

finally:

print("Exiting the program.")

```

什么开源

get a client can use proxy, and setup a relay in i2p