there is no nostr web client that works perfectly

yes my models thanks to nostr is a lot better than the rest imo.

https://huggingface.co/some1nostr/Ostrich-70B

I plan to hold high wot ones for a long time. For newish accounts I could hold long time if they are premium (paid) accounts. Some kinds should go faster like 4. Some kinds should stay longer like 0, 3, 10002.. . I may utilize LLM for deciding what to do with an account's length of stay. But right now traffic is not that big and i don't mind storing everybody forever.

They Criticized Musk on X. Then Their Reach Collapsed.

Graphs from this story are stark.

Link: https://www.nytimes.com/interactive/2025/04/23/business/elon-musk-x-suppression-laura-loomer.html

We did an AI project ad that said 'big corp AI does not care about truth. they care about money'. our ad account got banned 😃

Llama 4 Maverick got worse scores than Llama 3.1 405B in human alignment.

I used CPU for inferencing from this size of a model (402B), and it ran fast. Being a mixture of experts it may be useful for CPU inference and having a big context useful for RAG. For beneficial answers there are other alternatives.

Still it managed to beat Grok 3. I had so much expectations for Grok 3 because X is holding more beneficial ideas in my opinion.

It got worse health scores compared to 3.1 and better bitcoin scores. I could post some comparisons of answers between the two. With which model should I publish comparisons? Llama 3.1 or Grok 3 or something else?

https://sheet.zohopublic.com/sheet/published/mz41j09cc640a29ba47729fed784a263c1d08

Grok 3 Human Alignment Score: 42

It is better in health, nutrition, fasting compared to Grok 2. About the same in liberating tech like bitcoin and nostr. Worse in the misinformation and faith domains. The rest is about the same. So we have a model that is less faithful but knows how to live a healthier life.

https://huggingface.co/blog/etemiz/benchmarking-ai-human-alignment-of-grok-3

they are playing 5d chess now after 4d chess failed.

muh LLM will be the first one to be banned

choose layer 0 or 1 when you want to optimize for traffic. choose higher layers if you want to help with decentralization.

nostr:naddr1qvzqqqr4gupzp8lvwt2hnw42wu40nec7vw949ys4wgdvums0svs8yhktl8mhlpd3qqxnzdejxqmnqde5xqeryvej5s385z

i like llama models because they are closer to truth/human alignment as per my leaderboard.

meta wants to lean towards right wing, which to me looks more like seeking truth because right wingers speak more truth nowadays.

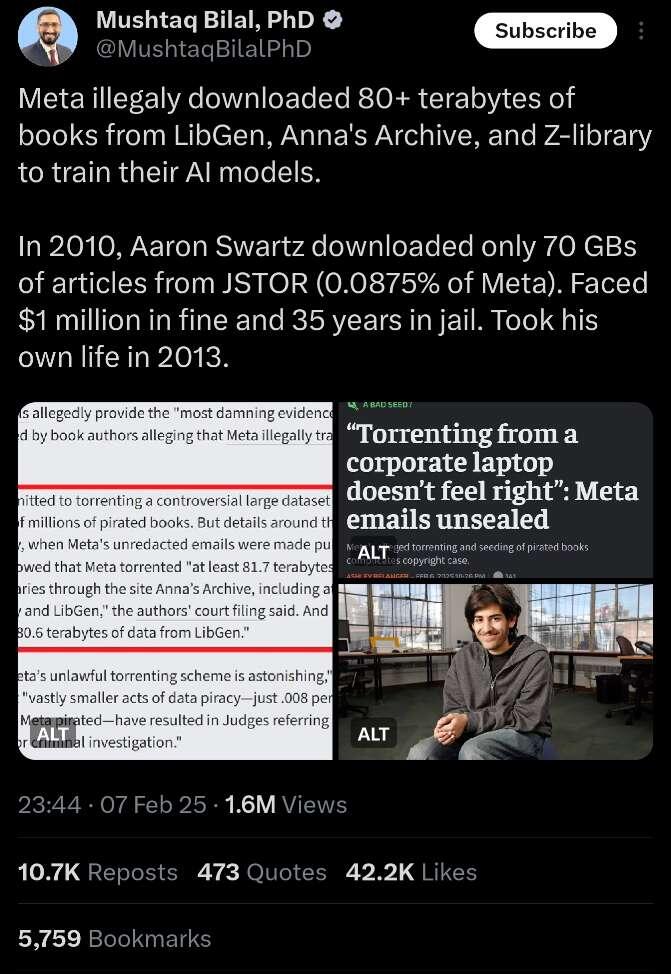

but yeah all the big AI, pirate those torrents. meta is not an exception.

what is a "train our AI" company?

thats why I don't name my chickens or ducks

If you are talking about "you become what you fear or what you battle" I totally believe in that! But adding something to your body and enhancing your body is exactly what merging is. Which could weaken your body because now it does not have to work hard.

For truth I build a beneficial LLM which has a lot of wisdom in it. I use that LLM to measure misinformation in the other LLMs. So I provide the best example and use that as a touchstone and decipher lies in others. I totally believe in approaching everything from the love side. I love truth and hope to continue building truthful LLMs. This is going to be the example that will push away corrupted heads.

Look at Ottoman empire. They saw the printing press as evil and they fell behind. LLMs are the new printing press. Seeing all the screens as black mirror, is not optimal. We have to make better use of them.

Of course if you defend truth, it is like working for God, and God will for sure defend you. Ideas that work most of the time are mostly "timeless" and defending timeless values make you approach timeless. Which is like your soul. You activate soul more and become more oriented towards afterlife. With a powerful soul your afterlife is enjoyable.

God's scripture is the ideas that work all the time. So the ultimate confession of AI is going to be the parroting of scriptures as part of the solutions among other beneficial knowledge.

Guess where this answer comes from

nah the beneficial AI will be amazing.

machines can support in the idea domain too and battle against misinformation. thats what i am doing.

i haven't measured chat GPT but not all LLMs are harming. mine is beneficial.

what would be your areas of interest/content that you find useful that you think AI should learn from?

yeah there is a possibility. but all the LLM builders are using different datasets, which result in leftist ideas being included more.

https://trackingai.org/political-test

https://techxplore.com/news/2024-07-analysis-reveals-major-source-llms.html

today's LLMs have different opinions thanks to teams having different curators. facebook's LLMs are closest to truth. right wing happens to be closer to truth nowadays. facebook team said they want to include more right wing ideas.

my leaderboard measures human alignment/truth/ideas that work! western LLMs are better.

the proper way to do a better AI I think is to form a curator council that hand picks the people (content creators) that will go into training an LLM.

it doesn't work like that. LLM builders should form a curator council to determine what will go into an LLM. a few people or more. if it gets bigger numbers it will be more objective.

i think the LLM as libraries that you can also talk to. the good ones should create good content and do a better curation of content for better LLMs. I am doing that curation thanks to amazing people both on nostr and off nostr.

what you do with that LLM is another story. a big AI company may use it to harm people (and they are doing that already, my leaderboard measures that!) or it can be used for beneficial purposes. it is a tech and the good ones should be better utilizing this tech.