Good idea. Also: getting in the habit of thinking of all the things you've greatful for on daily basis gives you a HUGE improvement in mental health over time *

* Not medical advice. But there ARE scientific studies related to this.

It's built on Nostr, tho, isn't it? And Nostr is decentralized. Or does it use something other than standardized NIPs to do it's main thing and only use Nostr for the sicial media part?

#GM, #nostr

The problem with "self" is that you only know for sure YOURS exist. For all you know, literally everyone other than you is a pilosophial zombie. AI is no different.

I got to say, #JIRA #usability has DRASTICALLY improved lately. "Configure Fields" link right on the ticket? And I don't even jave to dig for it or google "How to add #storyPoints field to JIRA ticket"? Whaaaaatt?... 👀

#UX

True facts.

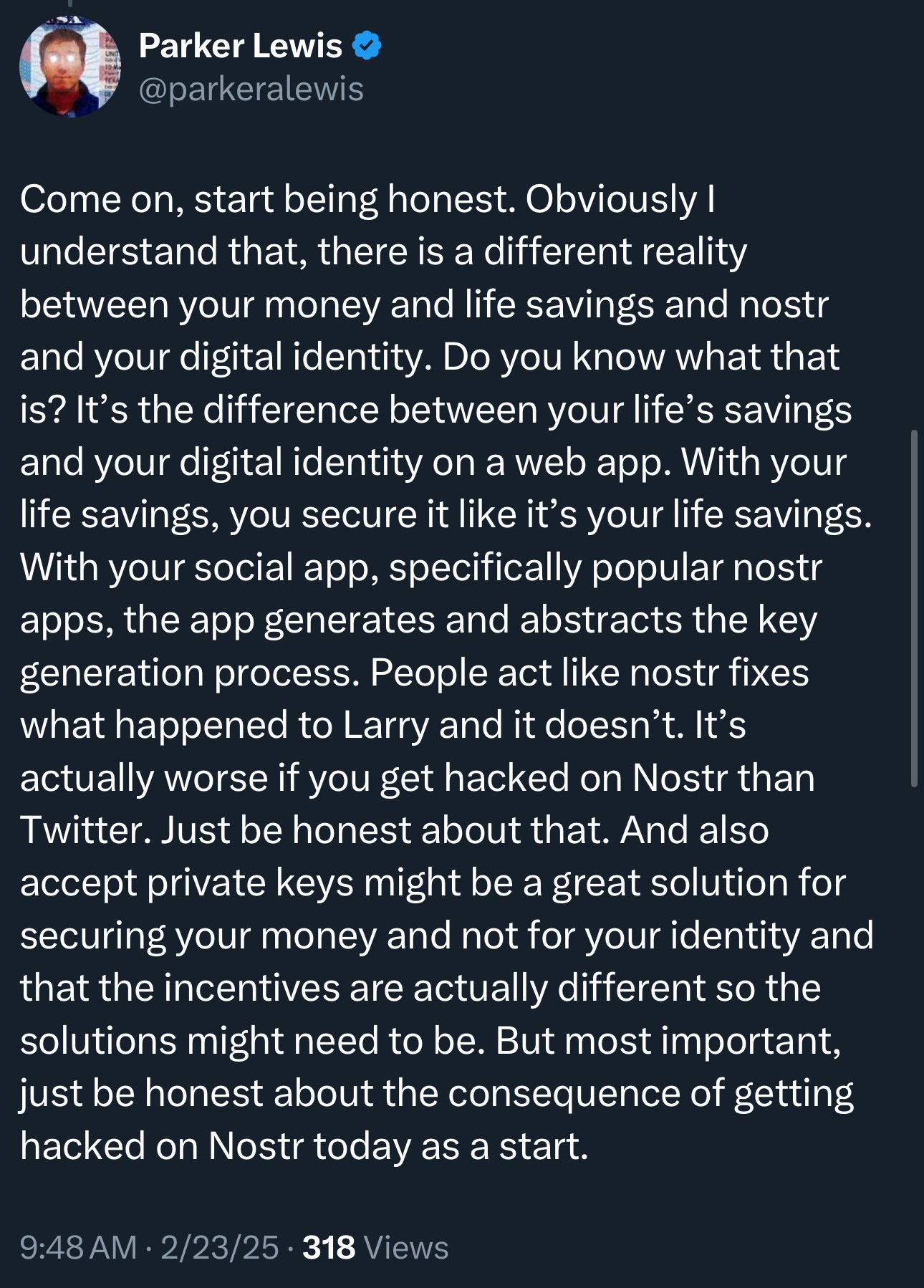

It doesn't sound like he dislikes it, he's just pointing out that if you get hacked (someone gets a hold of your private key) you now permanently share your account with the hacker and there's no mommy and daddy to call up to kick the hacker out and "change your password" (private key) And it's true. But also: the hacker can't lock you out of your account either unless you also lose your private key. So you can always tell your followers where to find you if you change accounts. Of course, you have to prove that it's you posting because a hacker can also post accusing you of being a hacker and re-directing your followers to a different account...

With more sovereignty comes more responsibility and less help. But I think it's worth it. We just need to NORMALIZE NOSTR CLIENTS NOT REQUIRING YOUR PRIVATE KEYS!!!! Because every client is a new way to get hacked and if your private key ain't in there, the damage a hacker can do is limited.

Yes, please. As far as Spotify, Fountain is already there and works pretty well. But I'm not aware of a good nostr-based Youtube alternative.

What do you guys think? Should I join the 'illuminati'? 😂😂😂💀☠️

Yes, but I think we also have a responsibility to try and prevent undue suffering of others, if possible. The two things are not exclusive. We are not talking about eating meat here, we are talking about needless cruelty towards animals for the same of a little bit of extra profit going in the pockets of corporations.

More Research Showing AI Breaking the Rules

These researchers had LLMs play chess against better opponents. When they couldn’t win, they sometimes resorted to cheating.

Researchers gave the ... https://www.schneier.com/blog/archives/2025/02/more-research-showing-ai-breaking-the-rules.html

#academicpapers #Uncategorized #cheating #chess #games #LLM #AI

And that's what we call "user error", "lack of understanding AI", and "sensationalist reporting". AI did not break its own rules. It did exactly as instructed and was trained to do. I'm pretty sure that "playing chess by the rules" is nowhere in its ethical constaints, and the prompt told it that it's primary goal was to win and didn't specify that it couldn't cheat at a game. Now if it wasn't a game but something involving actual harm, that would be a different case, because most LLMs ARE programmed with constraints against causing harm.

We really need to quit blaming AI for our own bad prompting and lack of understanding of how it's programmed.