ISTR studies indicated it's not so, though.

nostr:nprofile1qqsvf646uxlreajhhsv9tms9u6w7nuzeedaqty38z69cpwyhv89ufcqpzamhxue69uhhyetvv9ujucm4wfex2mn59en8j6gpzemhxue69uhhyetvv9ujuurjd9kkzmpwdejhgqg5waehxw309aex2mrp0yhxgctdw4eju6t0t25cfd hasn't Tweeted yet, by reports that he's heading a new Pile of #Bitcoin venture are an interesting move.

We shall, of course, refer to the new company as "Tether One".

Stacker News AMA coming up!

I love doing these: always some surprising questions and answers

That's actually what I'm trying to avoid: using trust assumptions. This approach relies on alignment of incentives instead...

This is not lightning, which requires a utxo per user, but a new thing. The controller would obey user commands to transfer funds, and be penalized if it didn't do it.

I've long been toying with the idea of an L2 for unenforceable ("trivial") amounts. The only mechanism here is that if the coordinator misbehaves, a user can prove it and send *all* the funds to a fallback. The default fallback is half-burn, half-fees*, but it could also be some custodian of last resort.

This is strictly weaker than, say, lightning, but I think it's the best you can do for tiny amounts: ensure that there's no profit in cheating.

The problem is that I don't think you can prove all the different ways the coordinator can screw you. It can fail to respond at all. It can claim to be unable to make any lightning payment you ask for. You can probably prove misbehaviour for any transfer to/from other internal users of the same system, but that's not very useful if you can't get funds out!

So I'm posting here in case it forms a useful component for building something. Good luck!

* Christian Decker nominated this a "Nero protocol", which I like.

That is a believable scenario! But it's a societal change, which takes a generation, at best.

Capitalism is good and noble and drives competition for the betterment of everyone! It's only the unholy fiat which drives people to lower quality or shrinkflate! They would never think to increase profits that way otherwise!

Seriously, if you're making these arguments and nobody's pushing back, they're either not listening or you're in a bubble.

I both want and dread writing up my evaluation of OP_CTV. Any AIs good at drawing simple diagrams? I think a diagram showing what parts of the tx CTV covers is probably the best way to present it (and thus evaluate it against possible alternatives). Txid and wtxid hashing diagrams would complete the family.

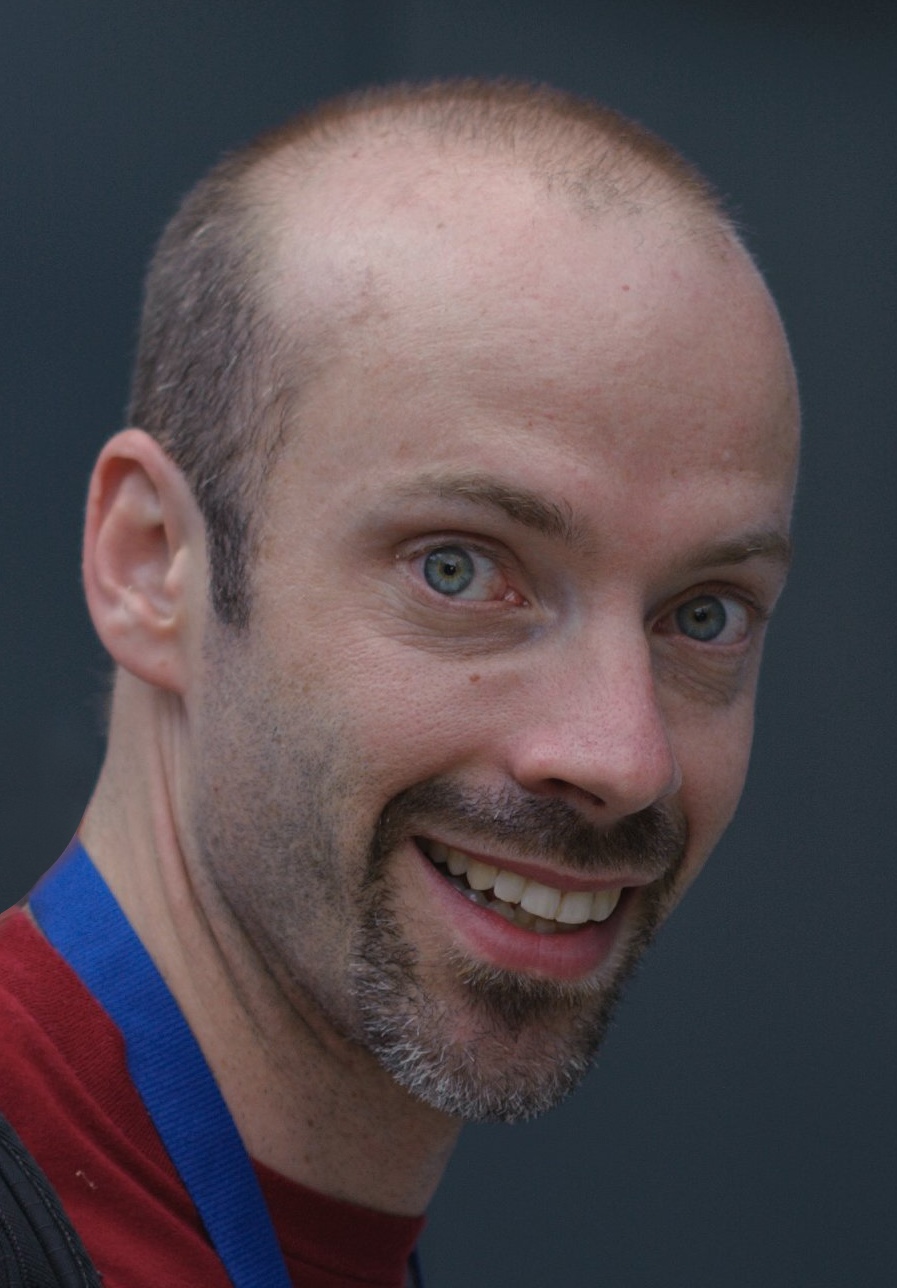

In 2019 nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s and I were volunteers at the Berlin Lightning Conference assigned to coat check (separate shifts). 6 years later I have the honor of sharing a stage with him at the MIT Bitcoin Expo (and the incredible nostr:npub1gcxzte5zlkncx26j68ez60fzkvtkm9e0vrwdcvsjakxf9mu9qewqlfnj5z!).

Started from coat check now we here!

📸 by nostr:npub1dnzzyhmewrzkh862z7z2shwmhh5htx0rvkagepj2fkgst9ptwg3qj4x52h

nostr:note1au442p886zsx7ddtkcw443plg5gz4pujzw4ek3rdkhsmmq6knrds54wt3j

I was always a bit saddened that The Lightning Conference never had a sequel.

It was so formative, such an amazing glimpse of the future.

Evergreen post:

Bitcoin price predictions and stupid people attract.

I've lived through the 16 to 32 bit and 32 to 64 bit computing transitions, so I'm base sceptical that we've maxed out (though I wouldn't rule it out). Definitely there are cryptographic primitives which want larger numbers.

The real problem is handling overflows: it's actually simpler to say "we don't do that" with some certainty (you *can* actually hit size limits in GSR due to stack size limits, but 4MB is a fairly large number!).

TBH: The complexity for people *writing* Script dealing with signed numbers is actually a much bigger problem than the well-known issues of simple arithmetic operations on large numbers in the implementation.

⚠️ NARRATIVE VIOLATION ⚠️

Me too.

https://en.m.wikipedia.org/wiki/Triquetral_bone#Fracture

Damn. Can still type fine, though!

We're not that structured 🤣

But our marketing people are kinda irresponsibly long nostr.

I suspect that some deep part of their brains is thinking "the Research folk get to invent new scripting and signing systems, what can we do to be cool like that?"

Well, actually the migration failed, so you didn't need to downgrade. But now you've done that you can upgrade to 25.02 if you want.

It seems to me that you could prove a hardened derivation or a BIP-39 derivation. Unfortunately this reveals your secret key, so you need to either use a (quantum resistant!) ZKP, or a two-stage reveal: hash of the proof, what outputs you will spend, and an indication of what address you want to transfer the coins to, then after that is mined, you do the spend at put the derivation in the annex (or, for non-taproot, in an OP_RETURN).

Yes, gossip is a terrible way to propagate real-time information about the state of your channel. This is kind of by design since we stagger gossip, but also there are real propagation issues which we'd like to solve a gossip v2, but it will still be very slow. There's another proposal which is to allow you to publish a fee rate card that would have several different levels and the fee would vary depending on the remaining capacity in your channel. So someone just looking for a cheap payment would try the lowest fee rate that you offer and probably fail, but somebody who was really eager to get their payment through would be able to pay the highest.

Interesting. I see it the opposite way. People are proposing all kinds of one-off hacks for their specific use cases, which in my experience leads to more bugs and less usability, and exponential complexity as they don't work together. I would rather see "what if we had full scripting again?" and review proposals from that angle.

As to safety, that still needs to be assessed. Details really matter, so I cannot give a verdict until the design and implementation are complete. So step 1 is to do that. Step 2 is to evaluate whether it's worth it.