We probably should have just let Russia join NATO when they applied, or at least given them a path to join after certain reforms.

Mentally I’m trying to be here. 🌊🧘♀️

But physically I’ve been bed ridden for a week. I miss the ocean so much. Listening to the sound of waves is all I need. 🌊💜🙏🧘♀️

#nature https://video.nostr.build/c2052c7729897ba4fc424f884b8707a330a0c1694c409d59a1048deaafb498f3.mp4

Hope you're up and around again soon.

I haven't thought deeply on this, but yes, you certainly wouldn't post a list of URLs. Posting a list of hashes is still potentially a problem, but the bad guy would have to parse and hash every URL in every note on nostr, and compare against your list of hashes to make use of it. I don't know. Maybe there's extra data you could use to construct the hash that would make it more difficult to reverse?

Well. hashes are one way. do given a hash, you couldn't find the original. but given the original and a hash, you could know* that that hash is of that original.

* There are technically multiple originals that would produce the same hash, but it's pretty unlikely in the real world, at least as far as I understand. But I am not a cryptologist.

ah, I guess that could still be used by someone trying to assemble the "bad" list, but more time and work intensive (crawl all notes, hash domains, compare with the list).

Any Bevy experts on here? I'm trying to upgrade my project from 0.14.2 to 0.15.2 and there's a change that breaks:

commands

.entity(entity)

.insert(map.highlighted_material.clone_weak());

where map.highlighted_material is a Handle

#rustlang #rust #bevy

Answering my own question, in case it's useful to someone else. It needed wrapping in a MeshMaterial2d like so.

Any Bevy experts on here? I'm trying to upgrade my project from 0.14.2 to 0.15.2 and there's a change that breaks:

commands

.entity(entity)

.insert(map.highlighted_material.clone_weak());

where map.highlighted_material is a Handle

#rustlang #rust #bevy

I repost this most months just trying to remind myself.

nostr:note1cef7a83l7z74f0c0ff27kted4ehhqdtgtjcre8xqfy62m6pyj9fqy46wqq

nostr:note1hy4n54824v20f8k7drpq5y5llt3cu078fvqm62pq2yn8gwxe6gms7q6gz8

Not when I want to run it efficiently in my free cloud resources. But I'll do that anyway.

The Will AKA Zap Father AKA Doctor Manhattan, is coming for you and your notes. Keep yourself and your notes safe. Install #haven today! https://nostrcheck.me/media/3f770d65d3a764a9c5cb503ae123e62ec7598ad035d836e2a810f3877a745b24/84d4bc501f73ac9ceb71052f19b861ea4d93891649bd614b4fbe6a79a7ff4e5b.webp

It's really too bad they took away the docker option. Do you happen to know why?

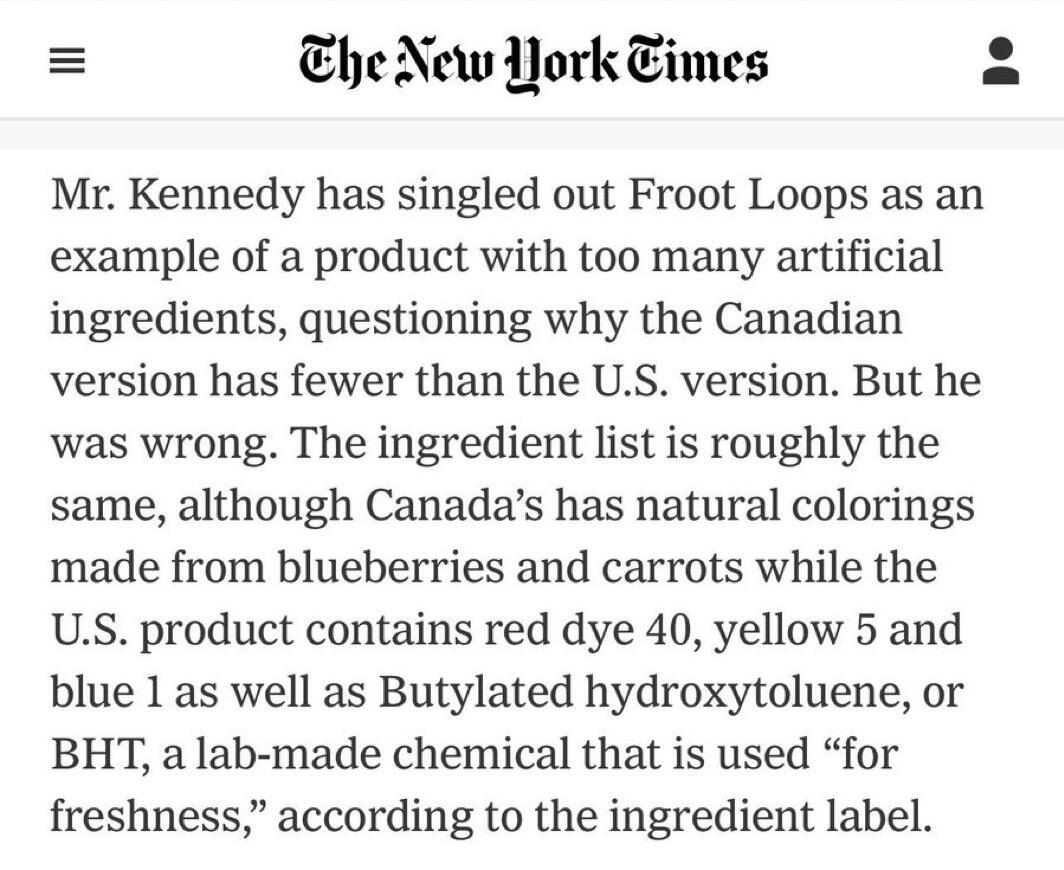

Sure, but that wasn't the question. The question was "why does the Canadian version have less artificial ingredients than the American version?"

But they can't just leave it at that. They have to use a rhetorical trick to make you think the question was different, then prove that the question that wasn't asked was leading you to a false conclusion.

This was the NY Times "Fact Checking" RFK Jr., in case you care.

And they wonder why the press has lost credibility?

And they wonder why the press has lost credibility?

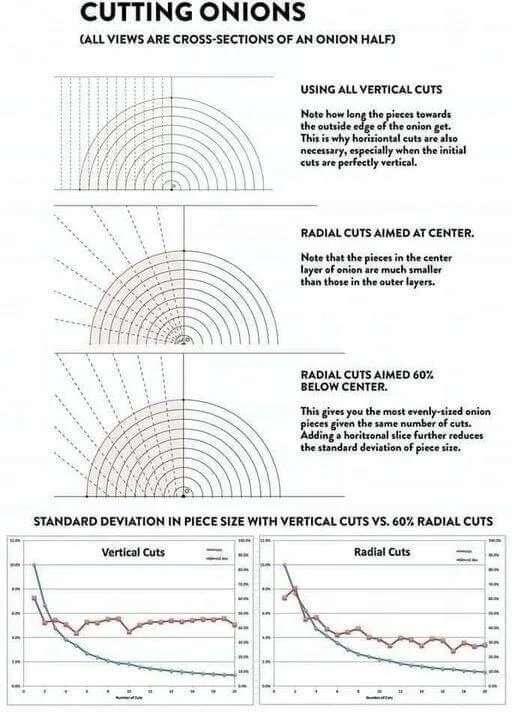

OK, is the 60% of radius of the original onion? I think so, but I'm not absolutely sure, and I need to know...