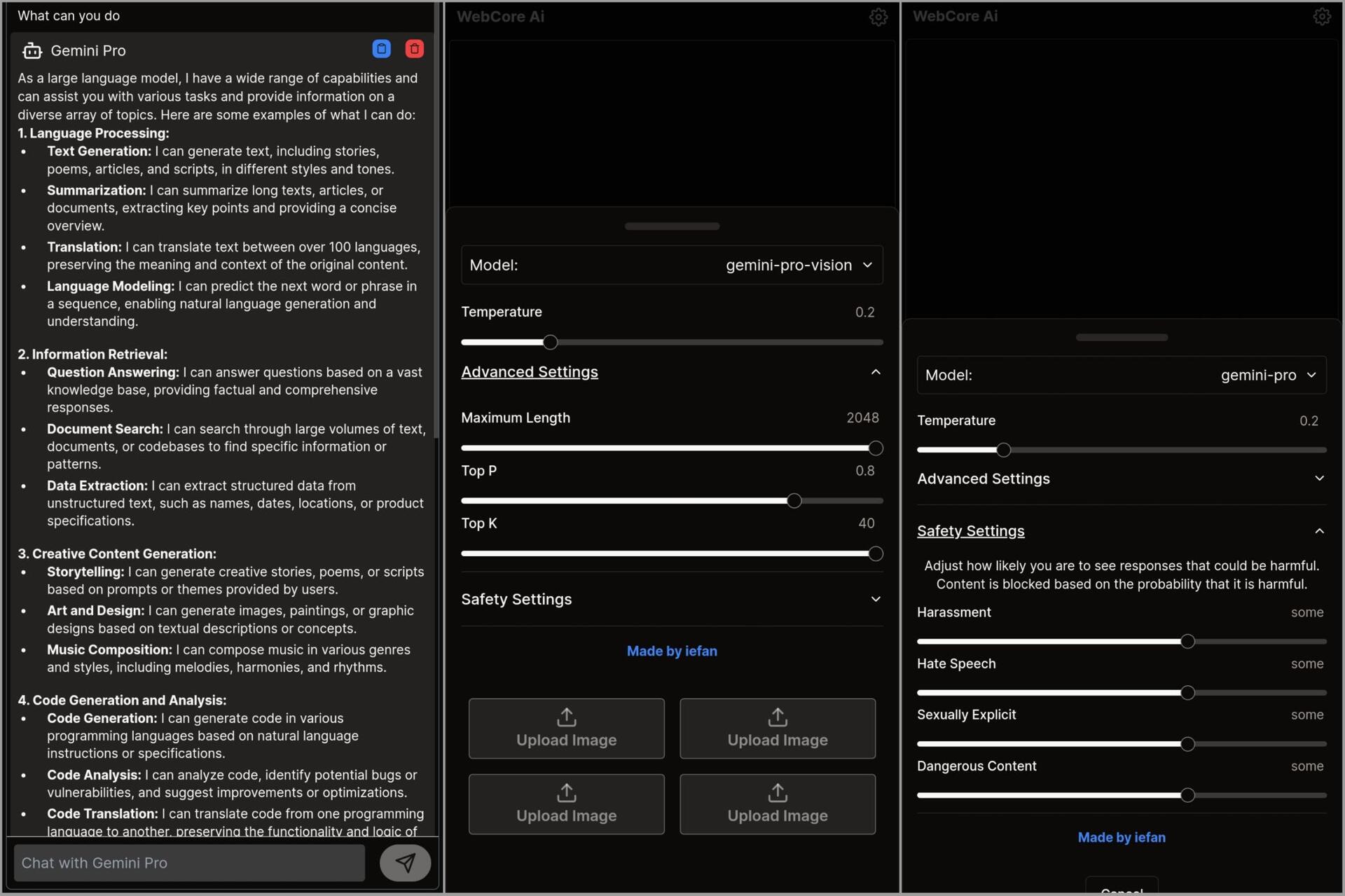

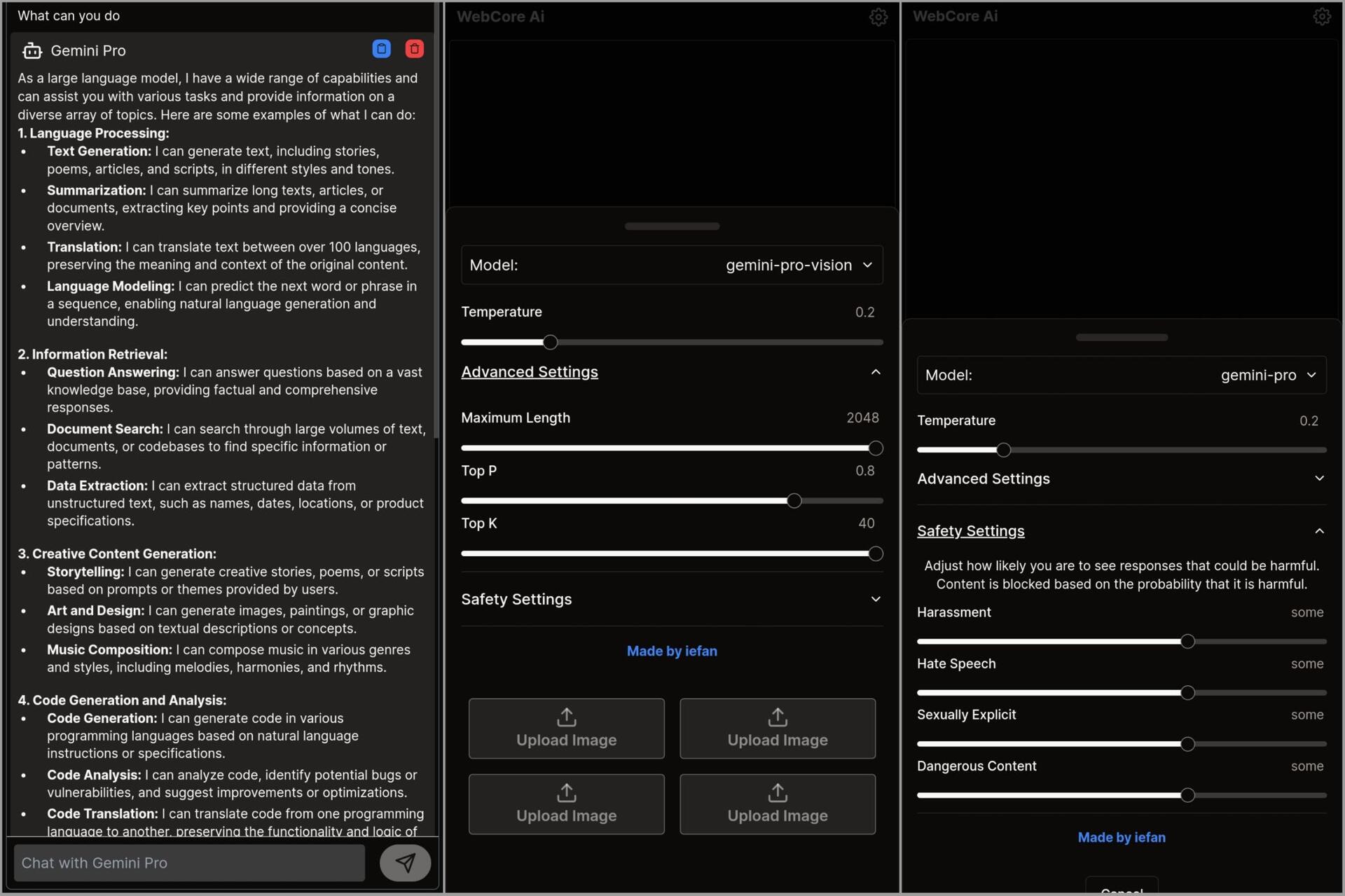

That will be really cool. I'm also integrating Gemini into NostrNet with fine-grained control and a proper user interface.

That will be really cool. I'm also integrating Gemini into NostrNet with fine-grained control and a proper user interface.

Not everybody wants their client talking to Google (or OpenAI etc) - it needs to at least have an off switch

I have something even more fascinating, it's still underdeveloped but it definitely has a potential.

In-device models can take care of private events. But otherwise, I am not sure they are that interesting for public events. Constantly updating the local model to match the newest events coming in online is challenging.

I was attempting that, and it's quite challenging. So, I will be working on it with Gemini.

This will focus on private tasks like TTS, PDF summarizer, Basic Q/A etc., using small, task-specific language models with WebGPU.

Would be nice if we can set the address of an OpenAI API compatible LLM to give users choice

this tbh