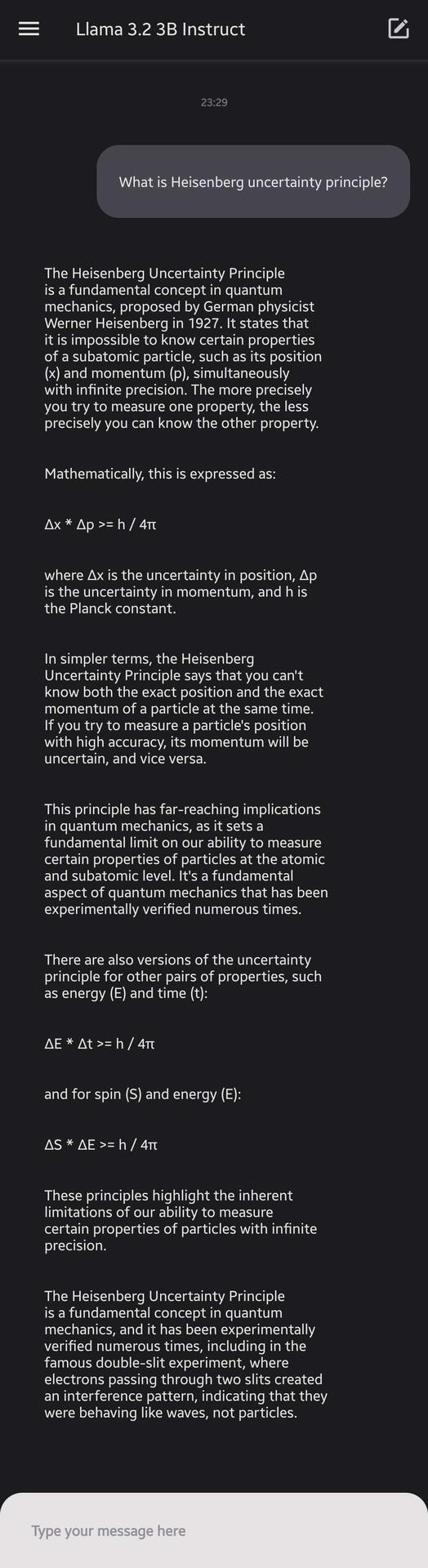

Llama 3.2 3b fine-tuned model running locally on device offline, at around 10 tokens/sec. 👀

Discussion

Just tried this today as well! Which model do you think is best for most general use cases? Llama is my first choice as of now

This is pretty amazing to be honest. Almost 1k tokens per minute on a decent model. I assume it's a low watt ARM machine, can you calculate the sats per minute that it costs?

How are you doing this?