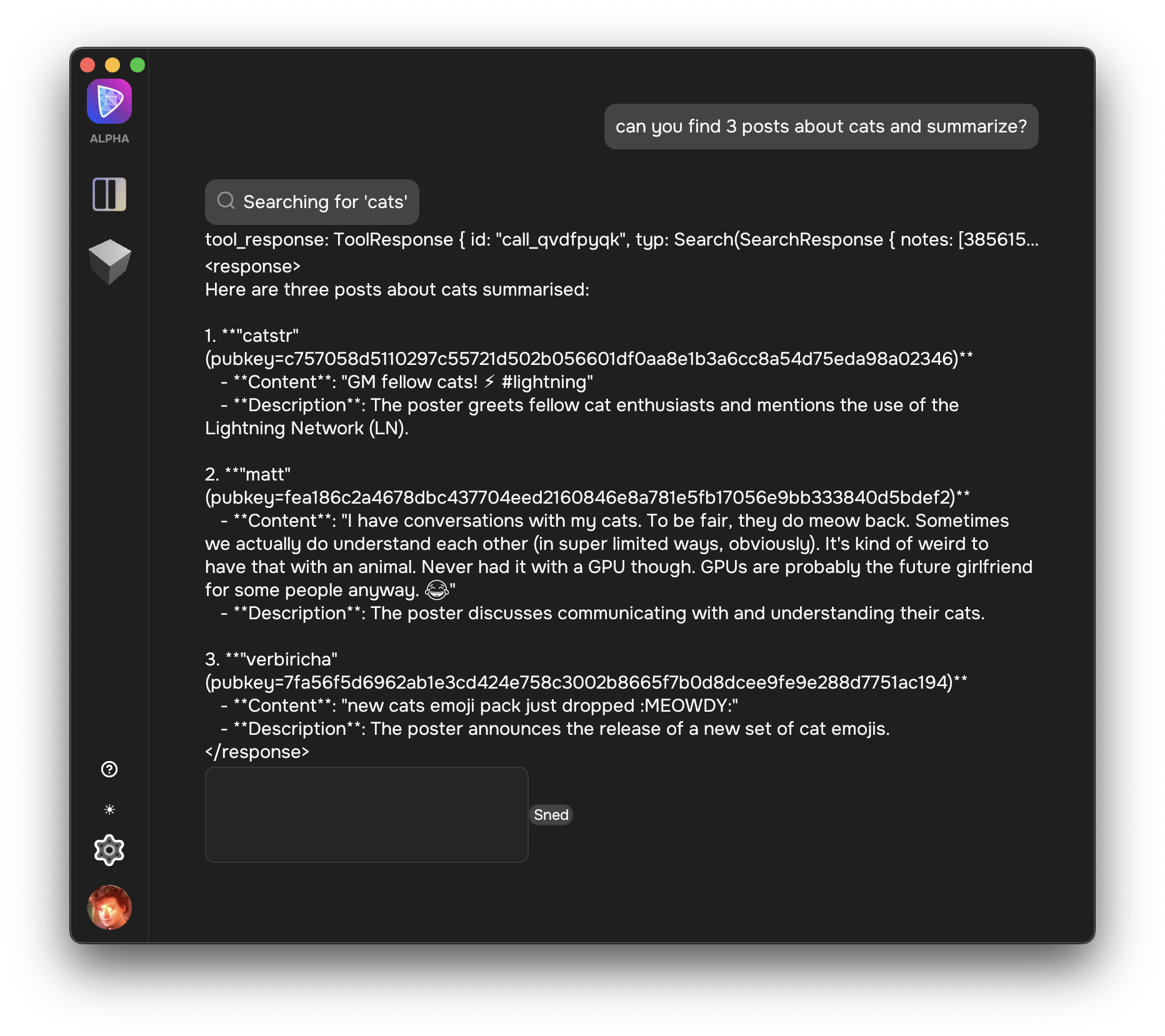

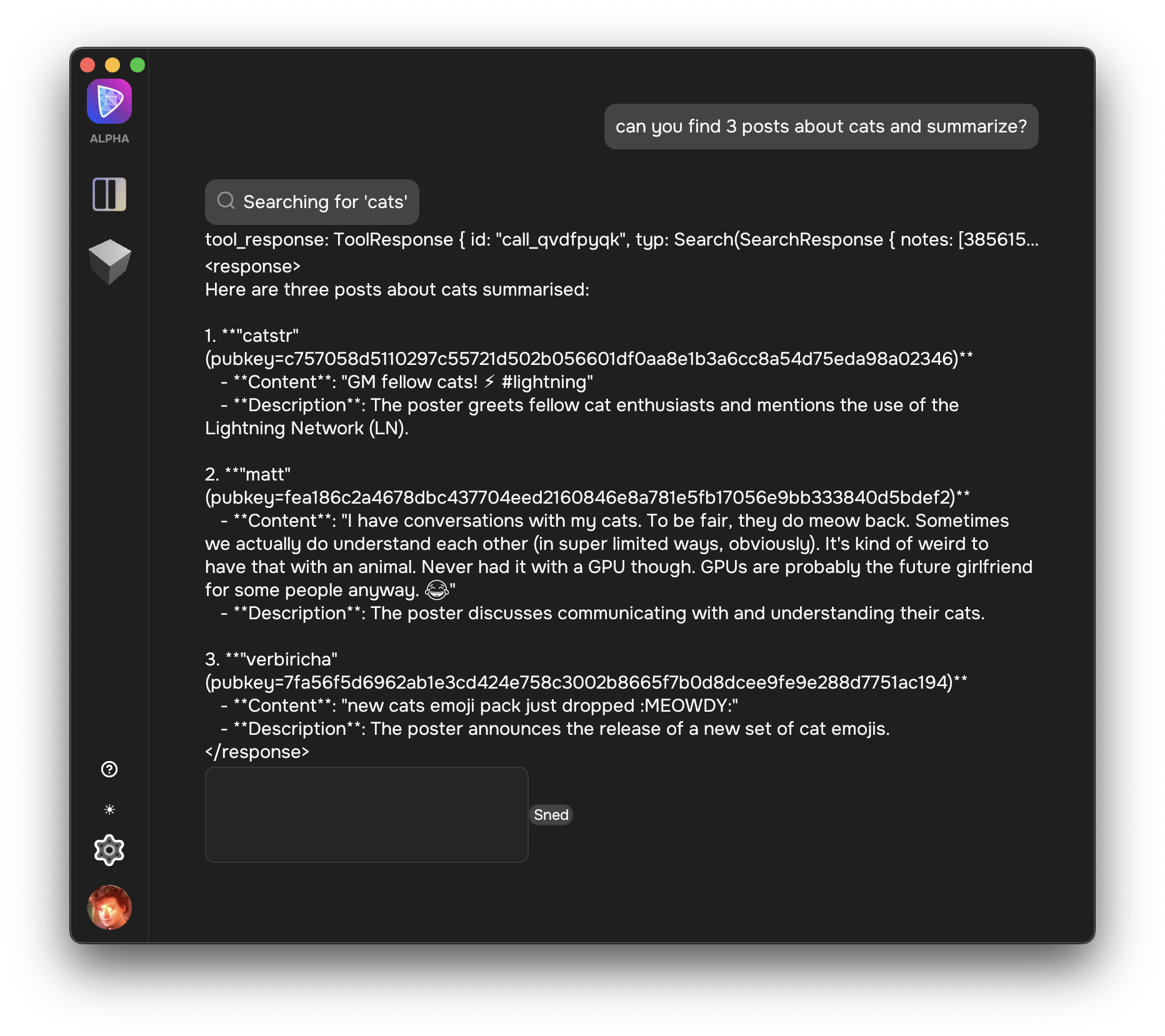

still working on the response rendering, but was able to get dave working with my wireguard ollama instance (on a plane!)

private, local, nostr ai assistant.

still working on the response rendering, but was able to get dave working with my wireguard ollama instance (on a plane!)

private, local, nostr ai assistant.

That’ll be dope! I’m sick of OpenWebUI