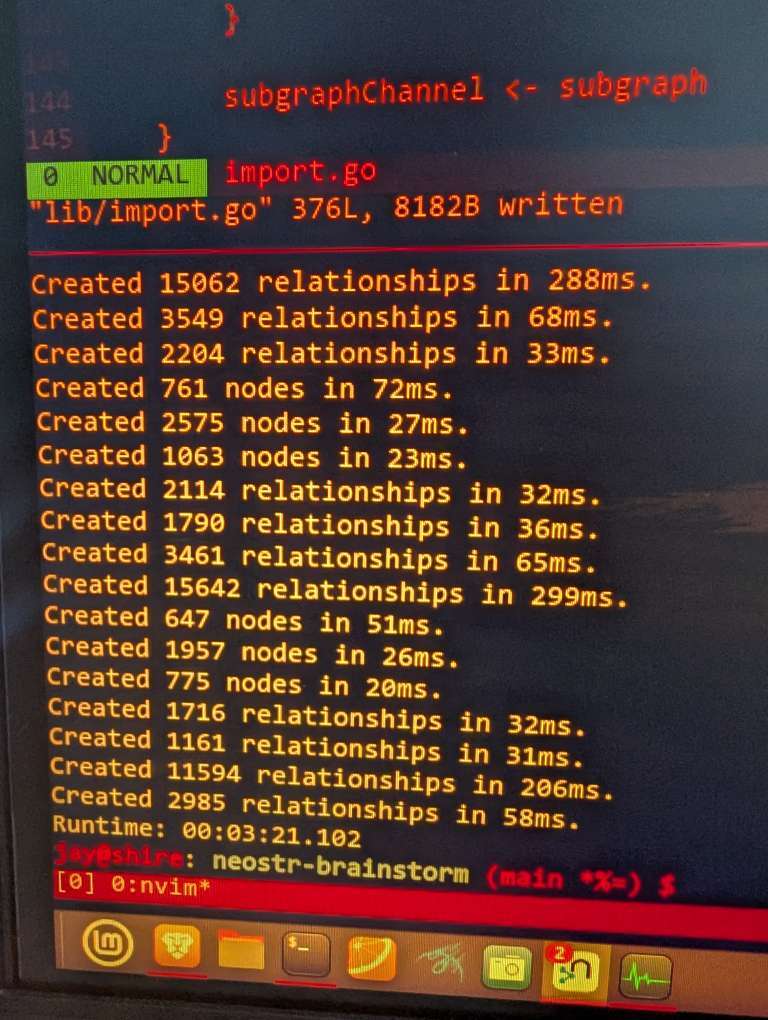

You loaded 2.2M nodes and 5.6M edges into neo4j in 3 minutes??!?!? 🤯

Discussion

Yeah haha. Each batch transaction is in the ms range, but that's because there's some very good presorting going on and the right indexes. Right now my repo is just on my private server, but I'll mirror it on GitHub too 👍

How many edge types are you currently maintaining? FOLLOW, MUTE, REPORT? Which is a tiny bit more complicated bc you’re not just adding data, you sometimes have to remove relationships too. Whether that impacts the efficiency of the database updates, I don’t know.

Only very base spec stuff so far, with no abstractions like replies or quotes and such:

User -SIGNED- Event

Event -TAGGED- Tag

Event -REFERENCES- Event

Event -REFERENCES- User

I'll have to handle removals eventually too but only in relation to ephemeral and addressable events. You have to understand that I'm approaching this from a completely different direction. I'm not going to base anything in the web of trust calculation on follows or mutes, and the calculation will be locally incremental rather than global like pagerank. I probably won't even need graph data science for the MVP.

Pagerank can be calculated globally or personalized, and personalized PageRank is what I’m calculating at grapevine-brainstorm.vercel.app

GrapeRank likewise can be personalized or global, and personalized is the way to go.

Personalized means that one (or in theory can be more than one) node is assigned privileged status. The idea of using centrality algos for a personalized WoT relay is that all of the centrality algos will typically be personalized using the end-user’s npub.

I would classify your trust score calculations as personalized centrality algorithms, if I understand correctly what you’re doing.

I want to see LOTS of different methods used to calculate WoT. Your method using engagement being one of them. Which is why I like the terms “webs of trust,” emphasis on the plural: webs, because there are LOTS of ways to calculate WoT. There are different types of “trust,” different applications for the scores we calculate, and different people have different ideas on how to calculate scores for any given application.

I also have a powerful PC, but even on low end hardware, it shouldn't be more than an order of magnitude slowdown (30 minutes), which would be my guess as to the upper bound on how slow it should be.