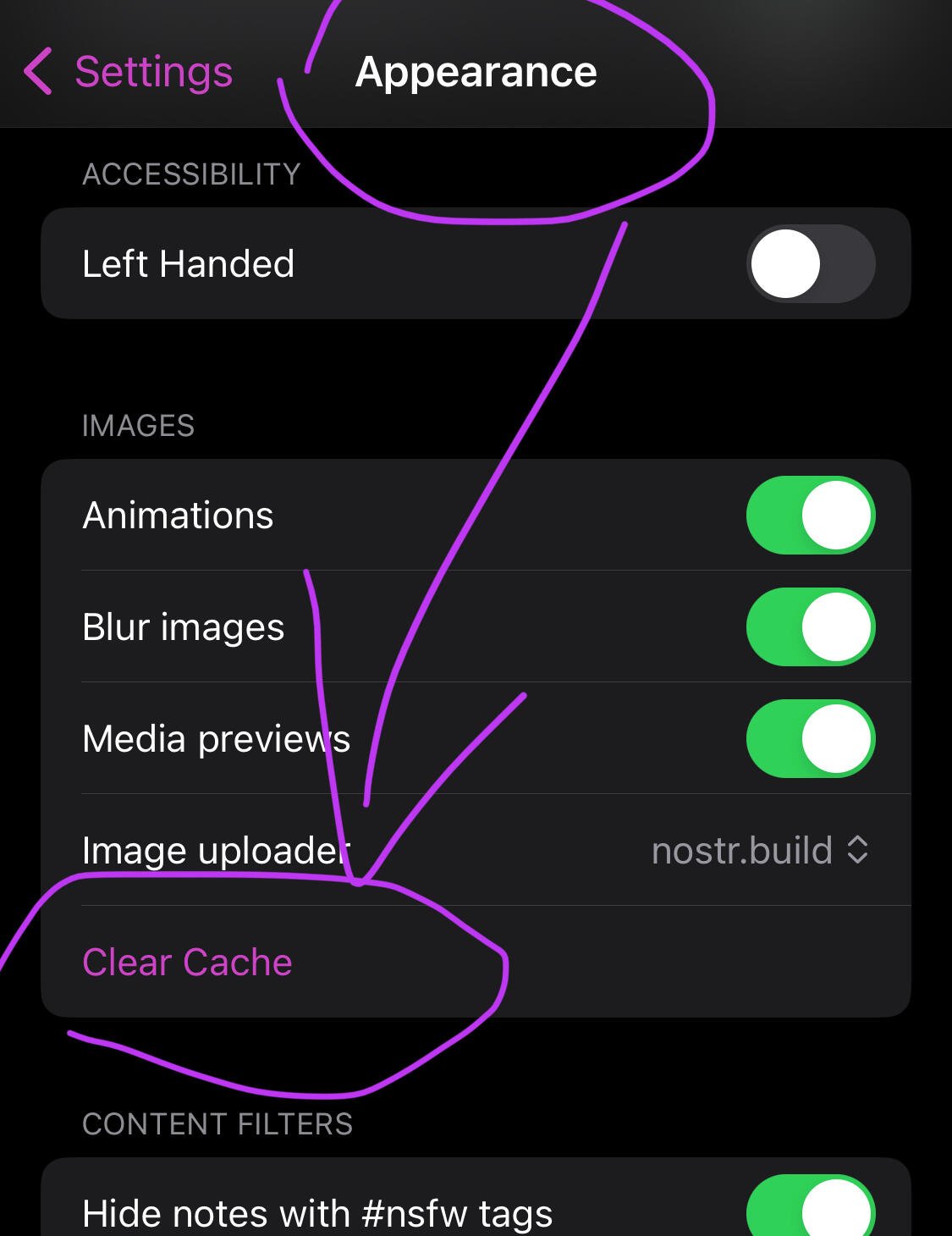

I thought this option existed… but not seeing it now 🤔

Discussion

Appearance under settings 🐶🐾🫡

It doesn’t fix for me. Settings -> appearance

that's the image cache, it seems to me like it's more likely a problem with the storage backend as a whole

Patches are welcome ser 🙏

It’s likely related to nostrdb, since we had same issues in the past when it was first rolled out 🐶🐾🫡

📝

#golang maxi here, i refuse to waste more than a minute more of my life looking at garbage like C, C++, objective-C, python, C#, Java or Javascript

use the only real language or gtfo

Golang is just an abstraction on top of real languages 🐶🐾🤣🫂💪🏻

My career just got run over by nostr:npub1fjqqy4a93z5zsjwsfxqhc2764kvykfdyttvldkkkdera8dr78vhsmmleku 😭

🐶🐾🤣🤣🤣 don’t listen to the abstract devs

Tbf he’s not wrong about C++ 🤣

I’ll go back to reading “The Joy of C” that I found in a box now.

C is the way! If I am a sole dev, I love C. When it’s a team, C++ is my choice 🐶🐾🫡

I’m so sick of missing out on a proper dependency manager Fish!! 😭 WHY CANT VCPKG NOT BE SHIT!!!

Well, I guess we can leave with one deficiency. Dependency free programming is the way! 🐶🐾🤣💜

i miss the old days of GOPATH when you could distribute a whole complete Go source code as one... but there is vendoring, nothing stopping you from using that, also has no dependencies except for the Go installation itself

i've written code that bundles a whole dependency graph into a package and just needs to grab the compiler to run ... it's not hard to write compiler driving scripts in Go

I’ve read his opinion, and I don’t necessarily agree with it 100%. All powerful tools are the most dangerous and require careful design and approach. 🐶🐾🫡

Kinda came down to the same for me, but Linus has some point. I’ve seen some really clean C++, but the majority of it… it gives me nightmares.

Yes! Hence one needs to be careful how they apply a tool 🐶🐾🫡

indeed but there is no way to properly use stupid tools like objects, programs are active not passive things... the less imperative a language is the more easy it is to make overly abstract, inefficient code that takes the compiler forever to figure out

go is far more concrete than your silly objects

🐶🐾🤣🤣🤣

Beginning to sound like someone who’ll be into Haskell in a few years.

haha, don't get me started on that silly memory copy insanity of the pure function

it's at the centre of the debate between GC, malloc and insane shit like Rust borrow checker but i think that, as Go and Java both use GCs and both are within spitting distance of C/C++ performance that the problems of GC only exist in people's imaginations and for purposes like operating system kernels and embedded systems with no memory

My applications are hard real time embedded applications, which pretty much eliminates GC’d languages from the running for work. It’s an alien world compared to 99% of what goes on around here so I’m sure I sound like an idiot.

C# is still one of my favorite languages for non-real time stuff (not sorry) but I am very attracted to Go for the goroutines. Seems like a really nice approach to parallelism. The static linking is cool too, unless you’re working on something proprietary (GPL hell).

yep, goroutines and channels being first class and the functions as values are the three features that make me addicted to go, it's a whole different way of thinking

almost no other languages have coroutines at all and none have it as a low level syntax, maybe you could do it with operator overloading in C++ but that shit is messed up

unfortunately the GC and CSP kinda go hand in hand but in actual fact you can turn off the GC and you can write code to manage memory manually, in fact many network handlers in Go are written with allocate-once freelists, and you can actually do this for a whole application

as it is, i personally avoid using the compact declaration asignment operator for things, as many people use them inside if and for blocks without thinking about whether it makes sense to allocate yet another value on the stack when there already is one with the same name, and scope shadowing can hide some bad bugs

I’ll look into GC’less golang tomorrow. You’ve given me an interesting rabbit hole to go down…

yeah, there is a variant also, tinygo https://tinygo.org/ which supports almost everything in go except for GC, main point is it will let you use coroutines, even on one core you can use coroutines, they are possible to use that way (with channels as well of course)

lol

I’m a Python data-jockey currently learning Golang. Afaik Go does not compile to some other language, as Python compiles to C… it compiles to lower level ASM or similar.

It is garbage collected, however… so while I understand C or Rust “maximalists”, the concept of a “Go maximalist” is kind of funny 🤣

garbage collection has to be done, if you don't free after you malloc in C/C++ you can blow up and end up with an OOM panic and be force killed by the kernel

coroutine scheduling only runs a background process when you are running on more than one kernel thread because it tries to parallelise as much as possible

this is one of the other deficiencies of go - if you need to do bulk compute it's better to refactor your processing unit as an independent process and coordinate them with an IPC, i have also done this, the difference is about 20% for compute bound heavy processing (it was a crypto miner) - vanity mining addresses, also, another example of what benefits in Go from this, whereas in languages with explicit access to kernel thread control can do this natively

Thanks. I am early in learning Go but definitely liking it so far, and I respect the tradeoffs they have made to keep the language simple and performant.

I watch/read a lot of tech content that discusses language features & limitations, but at the end of the day I’m still only an expert in Python and SQL. And I even then, just the data wrangling areas of Python.

I imagine a good data stack being Go for networking and Extract/Load jobs, and then dbt/SQL for data modeling. Probably don’t even need Python until you get into AI/ML.

it truly boggles my mind that python is the AI/ML language when it's literally 10x slower than Go/C/etc

LLMs need to be written in C also, really, so they can be compiled for GPU processing, because they are built from special types of hash collision searches

Most python sci/ml libs use C underneath for tensor and gpu stuff, python is just used as the ergonomic layer when coding.

"ergonomic"

Bro… if you hate it so much, just ship something that competes

nostr:note10fuyem3jl85nnfmanktqumgapcv3k4947du659dq0yme4nav45fqgacphc

a man has to economize his time... bigger fish than AI/ML #nostr for one thing needs a lot more work to become a really solid protocol, what should i focus on?

i have never been that interested in machine learning anyway, much more useful way for me to use my time if cryptographic hash functions are involved would be finding a way to make lightning payments able to path route

AI/ML is built off hash functions and has a lot in common with compression algorithms as well, it's interesting but not as important and the hype around it is artificial, just like the product itself, it will fade again

these technologies eventually find their use cases and they are usually not as world changing as early promoters make it seem

It's way more than 10x slower than c. It's more like 1,000-10,000x slower.

Guys… you know all the actual data crunching stuff in these ML Python libs are not IMPLEMENTED in Python, right? 🤣

Python for the easy APIs, and leveraging the robust ecosystem of data libs. Most of the hardcore performance critical stuff is implemented in C or C++, with a strong trend towards Rust for newer projects (see Polars).

Not too different from all the Go libs which use C code. And, apparently, many of those are using the Zig compiler 😂

Yes and I don't like it, makes everything an over complicated shit show.

Yes and no. No question, it makes builds, requirements, and environments more complicated. But it greatly simplifies the process for API end users (the data scientist or analytics engineer).

These ppl tend to have deep expertise in how to use/interpret the ML models, or in data general data transformations/modeling, but not in low level computer science. I am in the this camp - but I am learning more about the lower level CS.

Outside of the Python ecosystem, the only thing that comes close, wrt data science tooling, is Scala. And then you have to deal with Java bullshit 😂

If Golang, or some other “simple” lower level language (Mojo??) can step up to the plate in data tooling, then I’m sure the industry will follow.

i'm all about the low level CS stuff, cryptography, protocols, concurrency, i just don't really care frankly, i don't see the use in it, all the stuff i've seen AI do is just garbage because it takes a human brain to recognise quality inputs and thus most of what is in the models is rubbish

the hype around ML with regard to programming is way overblown, GPTs generate terrible, buggy code

about the only use i see for them might be in automating making commit messages that don't attempt to impute intent and for improving template generation

outside of that, it's really boring, you can instantly tell when the text is AI generated and when it's an AI generated image, there is no way it is ever going to get better than the lowest common denominator and everyone is determined to learn that the hard way

There’s an entire field of data science and analytics, much of which leverages ML, which has absolutely nothing to do with “AI” or LLMs

i'm aware of this, but much of it works on the same basic algorithms, proximity hashes and neural networks... GPT is just one way of applying it

what's most hilarious about the hype over GPT is that it's literally Generative Predictive Text

it's literally spinning off on its own a prediction of what might follow the prompt, anyone used a predictive text input system? yeah... they are annoying as fuck because they don't actually predict very well anything and certainly not with your personal idiom

Also much of it, in fact most of it, does not work on neural networks or other “black box” unsupervised models.

The most common uses of ML for a business or researcher are correlation, categorization, and recommendation systems, or some form of forecasting/predictive modeling (I probably missed some). None of these require a neural net, or anything that resembles what we commonly call “AI”.

yeah, and much of it is calculus based, i've done a lot of work with difficulty adjustment which uses historical samples to create close estimates of the current hashpower running on a network

i'm not a data scientist though, my area of specialisation is more about protocols and distributed consensus - and the latter (and including spam prevention) tend to involve statistical analytical tools

That’s cool. I am working as a data analyst in the Bitcoin mining world (happy to disclose where outside of Nostr). I’ve also done a lot of work with on-chain data, pool shares, and things related to hashrate.

Would love to look at your work on the ‘close hashrate estimates’ if it’s open source!

well, dynamic difficulty adjustment is pretty much ... hmmm not really sure, it's not something that needs new tools, and it was only because i happened to be working for a shitcoin project that was building a hybrid proof of work/proof of stake algorithm that the CTO happened to be a trained physicist and noticed that difficulty adjustments were like a type of device he was familiar with from his work in physics, the PID controller

PID stands for Proportional Integral Derivative and it uses a set of parameters for each of those over a historical sample of data points to adjust a system, usually a linear parameter, to the inputs its getting

in my experimenting with it, i built a simulator that tested parameters for P, I and D I found it was possible to adjust smoothly, or be more accurate but it had a high noise component

i've since read a little more about how to work with these things and learned that the derivative can help a lot but in my tests it just added noise - the trick was to apply a band pass filter to cut the high frequencies out, and that probably would allow it to become faster at adjusting to changes without adding the noise factor that the P and I factor create when tweaked for fast adjustment

the fact is that the bitcoin difficulty adjustment is actually sufficient for the task, and due to its simplicity is preferable, but i could write a dynamic adjustment that is resistant to timewarp attacks and would reduce the amount of variance of solution times

Gotcha. I thought you meant you developed a more accurate way to estimate the total network hashrate on Bitcoin, than simply deriving it from difficulty and block count per day/week/etc

no, i was talking about a dynamic difficulty adjustment, which uses statistical analysis to derive an estimate of the current difficulty target

you got me thinking though... would be quite interesting to create a simple app that just follows the bitcoin chain and demonstrates what a better dificulty adjustment would give you at each block height versus the existing scheme

Yea that would be a cool study. But in practice, I think a more dynamic system like that would be easier for a large miner to game or cause havoc.

well, i have studied the subject pretty close, i think that if it's properly written it can be better

it's a hard job because it really needs to be as simple as possible

i'd love to do something like this though, just to demonstrate it... easy to capture the data, and you can have an app that derives the system's estimations and shows you the error divergence at each block due to the super simple adjustment scheme, and lets you see several alternative methodologies applied... i think it would be a great educational tool and maybe it would lead to an upgrade of this element of bitcoin protocol due to the clear advantage

it really isn't that complicated... current system is like a thermostat that adjusts every 2 weeks... advanced adjustment systems have been long settled in other fields of tech, like the segway/hoverboard things... that is exactly this math applied to motion, and it's extremely stable now, ridiculously stable, hell i remember 14 years ago it was being applied to military jets to improve their maneuverability

There’s also the whole range of analytics use cases that does not need any form of ML. The Python ecosystem has extremely robust tooling that makes this work easy. Rust tooling for dataframes etc is getting there, but it’s not reasonable to expect all analysts to learn Rust.

Does Golang have any libraries for dataframes and ad-hoc analysis, that works in something like a Jupyter notebook?

as i mentioned elsewhere, my thing is protocols and distributed consensus, and go is the best language for this kind of work, and it is what the language was built to do, it's just my assertion that it generally makes for better quality code IF PEOPLE FOLLOW THE IDIOM unlike way too many go coders in the bitcoin/lightning/nostr space

😂🤣 building programs and tools with it is a sin