Here is what I learned arguing with people on both sides of the op_return argument. Cunningham's Law in full effect for sure.

1. The Bitcoin blocksize limit is unaffected by the PR. A full archival node is going to grow hard drive storage at up to 4MB every 10 minutes, that number does not change.

2. That 4MB is with maxed out witness data. The base block limit is 1MB, also unchanged.

3. Op_return is base block data while most current arbitrary data schemes store in the larger witness data area.

4. The true limits were always only at the block total level. The total can be made up of any combination of sizes of the sub fields, this is unchanged. My initial assumption on this was backwards. I thought the block limit came from the collection of limits of sub types of data because of my background in networking where that is how the TCPIP packet limits are set. See my incorrect posts earlier where I got this wrong and got corrected.

5. Any "limit" on any particular field size that you set only affects your mempool. This means those limits affect what is in RAM on your node only, not drive space or bandwidth consumption.

6. Your node always validated blocks with any op_return that fits into the base block. This is true of core, libre, and knots. This is why the large op_returns during the dispute did not cause a chain fork even though knots had a limit of 80.

7. More bluntly, nothing changes about what blocks validate. The node runners still have full control over validation and they are not being asked to change validation rules.

8. Only what is carried in mempool will change and no hardware usage changes for nodes.

9. From a TX side, getting nodes to carry the larger op_returns in mempool means they don't have to pay miner accelerator markups. Removing the markup will make op_returns cheaper than the witness data schemes used by most current arbitrary data. This is the entire purpose of the change.

10. Changing op_return to be cheaper than witness data should get arbitrary data users to prioritize using op_return.

11. Witness data cannot be purged from a pruned node without losing economic transactions. Op_returns can be purged in a pruned node, though this may change if future L2s require op_return arbitrary data. That would only affect node runners who wanted to support that L2.

12. 11 means that after the change pruned nodes should have lower hard drive capacity requirements for the same amount of arbitrary data stored on chain.

13. Very slowly for the back of the class. It should be easier for people who don't want to store arbitrary data to not store arbitrary on their node hard drives after the change.

14. Not keeping large op_returns in mempool means you have an incomplete view of who you are bidding against when you set fees for your on chain transactions. Right now this is not a big deal because there aren't many large open_returns. Once there are more, particularly during arbitrary data rushes like the taproot wizards craze, you may wait many blocks after paying what you thought was a next block fee.

15. 14 is most important for lightning where timely automated transactions can be critical such as justice transactions.

16. Mempool has a user set size limit. It drops transactions based on fee. Only the highest fee TXs stay in mempool if mempool size exceeds your limit. This means that storing large op_returns in mempool does not increase RAM requirements for your node.

17. Satoshi stored arbitrary data in op_return not witness data.

So TLDR.

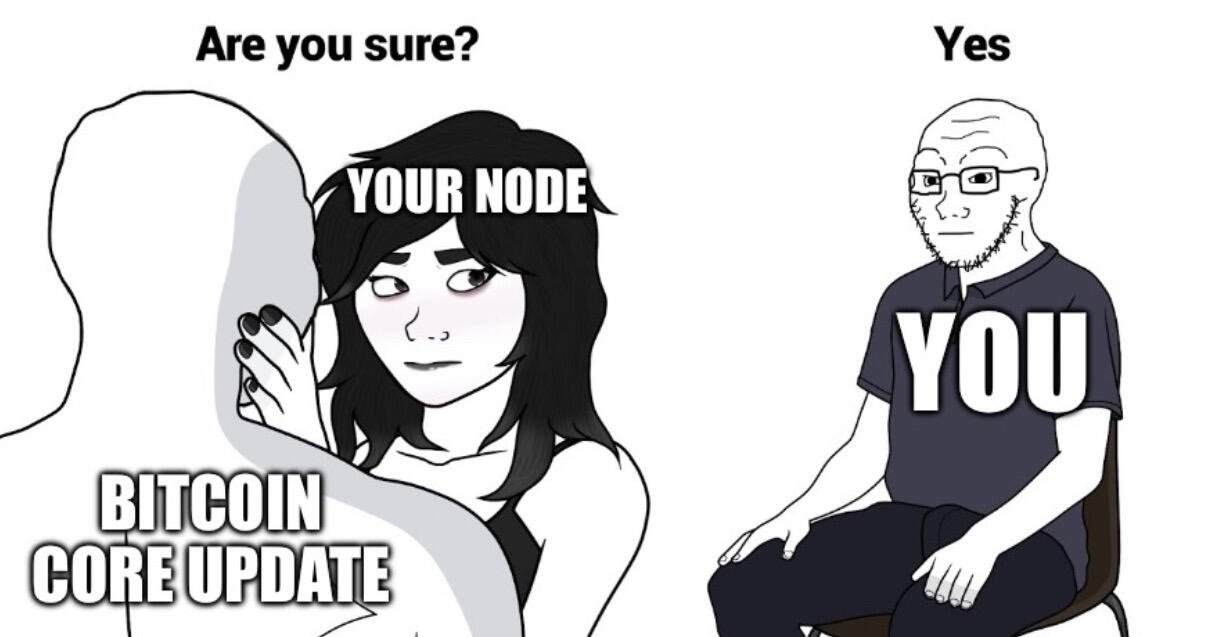

I support the change now. For people who don't want their node resources used for arbitrary data, this makes it easier for you while Knots actually makes it harder. I'll be staying on core and I will be upgrading.

That said, I still think core and the insiders who support this handled it like a bunch of asshats. Pathetic public relations and they need to do much better in the future if they want to be taken seriously. If one person doesn't get it they may be an idiot, if the entire class doesn't get it you are a shitty teacher. Stop condescending and work on your teaching skills.