Very close to having a local llm like mistral embedded in damus notedeck. It will be able to summarize your nostr feed. All local AI. This is so cool.

Discussion

That would be interesting!

7b param model?

Curious about impact to perf of the machine. Will you be benchmarking?

always

What has been the biggest challenge in getting the LLM up and running?

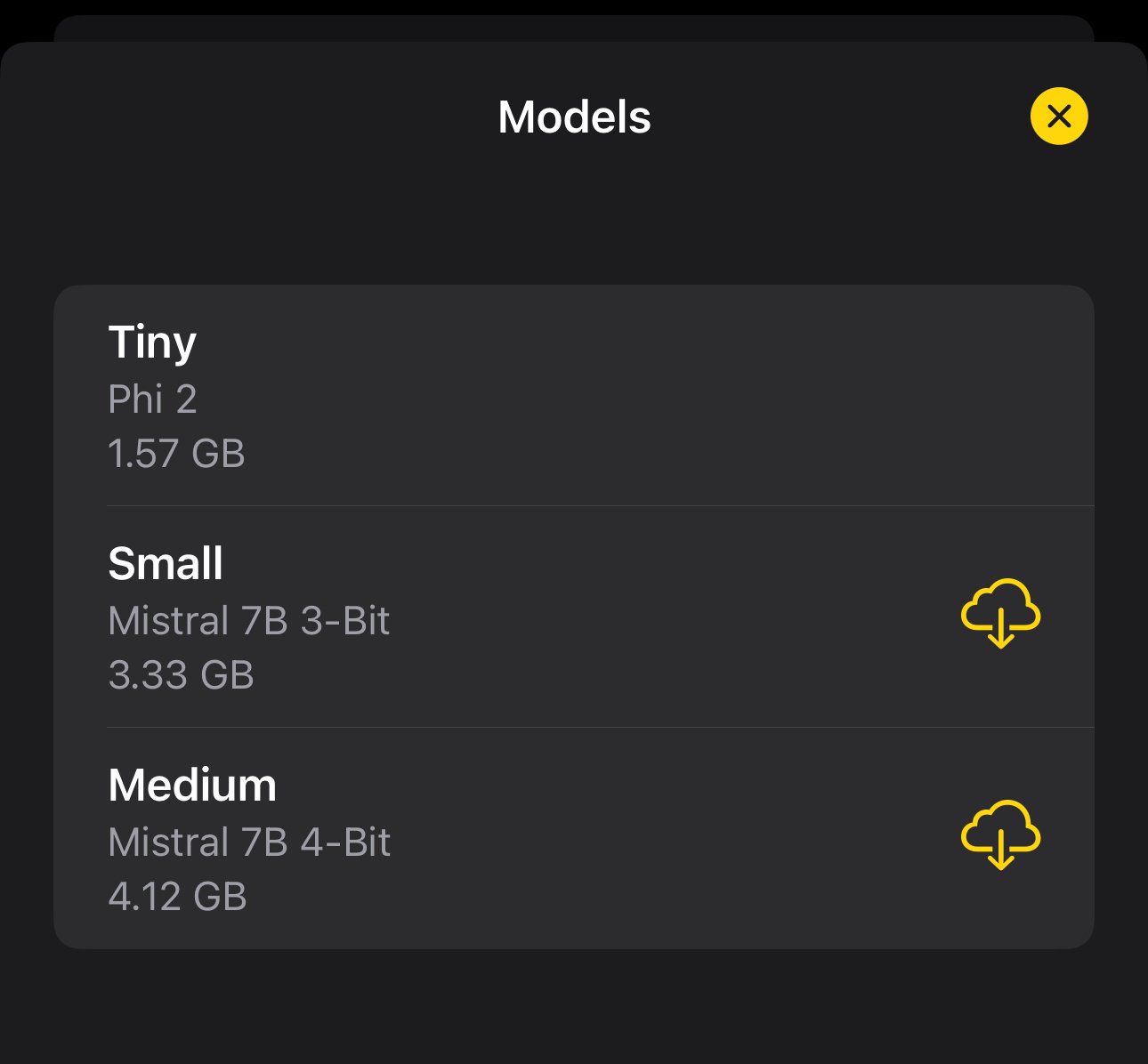

Performance has always been the concern, and the fact it needs a 5gb download

Might also be worth at look at what was done for this app. I bought it; pretty impressive even with the ‘Tiny’ model.

https://apps.apple.com/us/app/offline-chat-private-ai/id6474077941

Awesome

*#jellous #Amethyst #user*

#nostr

#ai

Sounds amazing

when pizza 🍕

Wow

#Nostr Zap Zap Zap ⚡️

using AI to summarize information is fantastically useful

yes but wen damus android *nag nag*

I have a similar setup but for news articles. The summaries with hallucinations were less than ideal (semi-controllable with temperature settings). Another factor was I found that people interested in a topic wanted to see all the content, summaries weren’t enough. However, one feature that popped out was doing Named Entity Recognition (NER) on the content _was_ useful because this allowed articles to be related to the others in the corpus. Keep up the interest work!

Local LLMs are going to be game changer with nostr integration.

🔥

Should be paid/pro feature

Local LLM translation, maybe even local, personalized content filtering… cool!

Any idea on a beta release or release of notedeck ? :)

I ask the same question daily lol

ローカルLLMでTLまとめてくれるのよさそう!

nostr:note1t5atuyyznna02f4x73vaw58ms8jdangkt5rh9gr05v439k8puwxq2f53w4