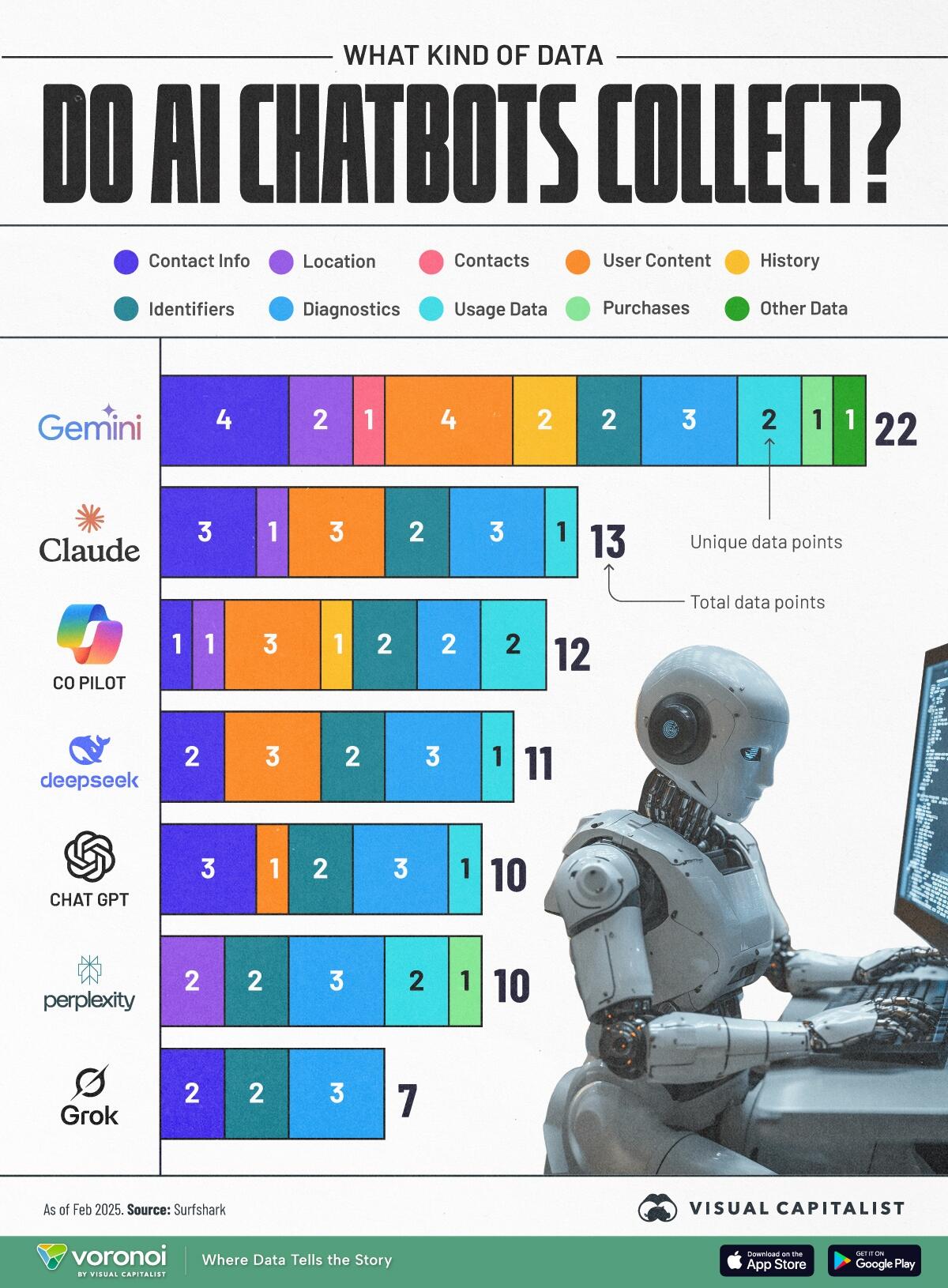

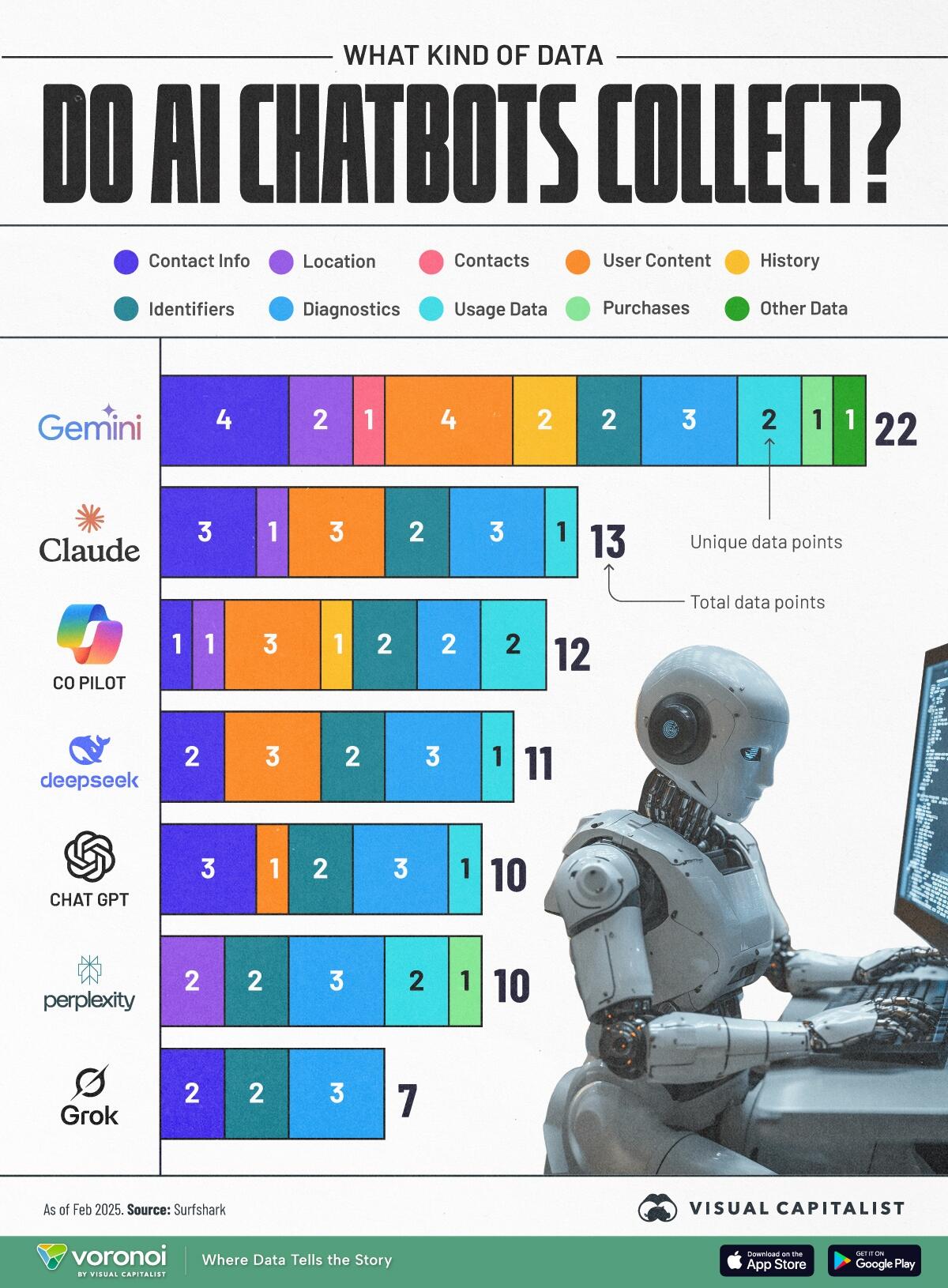

all you AI enjoyoors, have fun staying spied on

all you AI enjoyoors, have fun staying spied on

This is why I use local LLMs not based on a any of these with no access to the internet. Even then, it is very sparingly.

this isn't differentiating between using the same model through the vendor's official interface, locally if it can be downloaded, or through a third party vendor. for example perplexity lets you use claude models

That's why I use local LLM's. I'm glad I stopped using proprietary services.