Well shoot. Call me e/acc then :)

But for real. The above assumes we maintain control. Were I to assume that, I’d totally agree with you. I think the main difference in our views is that I would put my confidence in that assumption at less than 100%, and also small enough that I’m not comfortable simply behaving as if it is 100%.

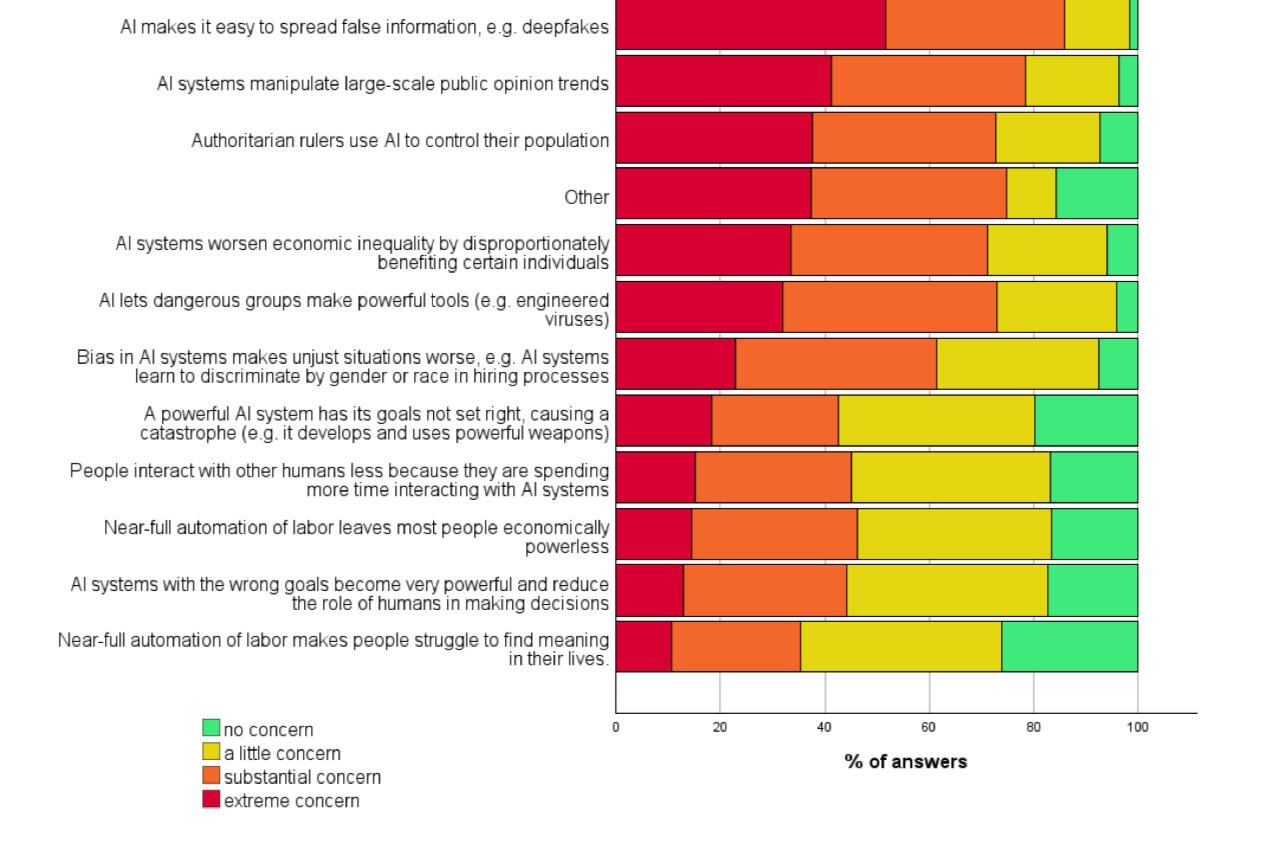

And the sci-fi outcome is not exclusively thought to be possible by people who don’t understand LLMs. Don’t know if you’ve seen survey results from AI researchers, but you see the full range of predictions from them as well 🫂