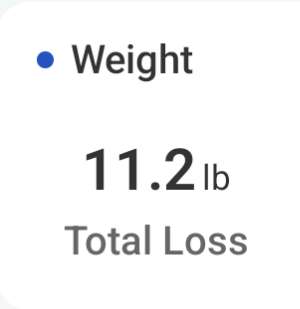

No longer fat 🫡✅

Congrats! I'm on a similar journey, although I unfortunately need to shed more than 11lbs to reach the "no longer fat" milestone. I've lost about 25lbs since November (the "silver lining" of having to care for hospitalised family members during the holidays was that I didn’t get to stuff my face as much). My goal is to keep the momentum and drop another 75lbs until 2026. One day at a time, of course.

On a different subject, I’m still working on Haven’s PR. I came across a "Small" issue, to fully eliminate old duplicated events, we’ll either need to ask LMDB users to nuke their old databases and reimport their notes, or we’ll have to add a flag to Haven so it triggers nostr:nprofile1qqsrhuxx8l9ex335q7he0f09aej04zpazpl0ne2cgukyawd24mayt8gprfmhxue69uhhq7tjv9kkjepwve5kzar2v9nzucm0d5hszxmhwden5te0wfjkccte9emk2um5v4exucn5vvhxxmmd9uq3xamnwvaz7tmhda6zuat50phjummwv5hsx7c9z9's "Compact" method (https://github.com/fiatjaf/eventstore/blob/bb4bb4da67db90117de98f111440b0dace4af390/lmdb/lib.go#L74). I’m leaning toward the lazy option: asking LMDB users to nuke and reimport their notes if they want to remove duplicates, but otherwise I'm happy to implement something like `./haven --compact`. What do you think?

Nice. How?