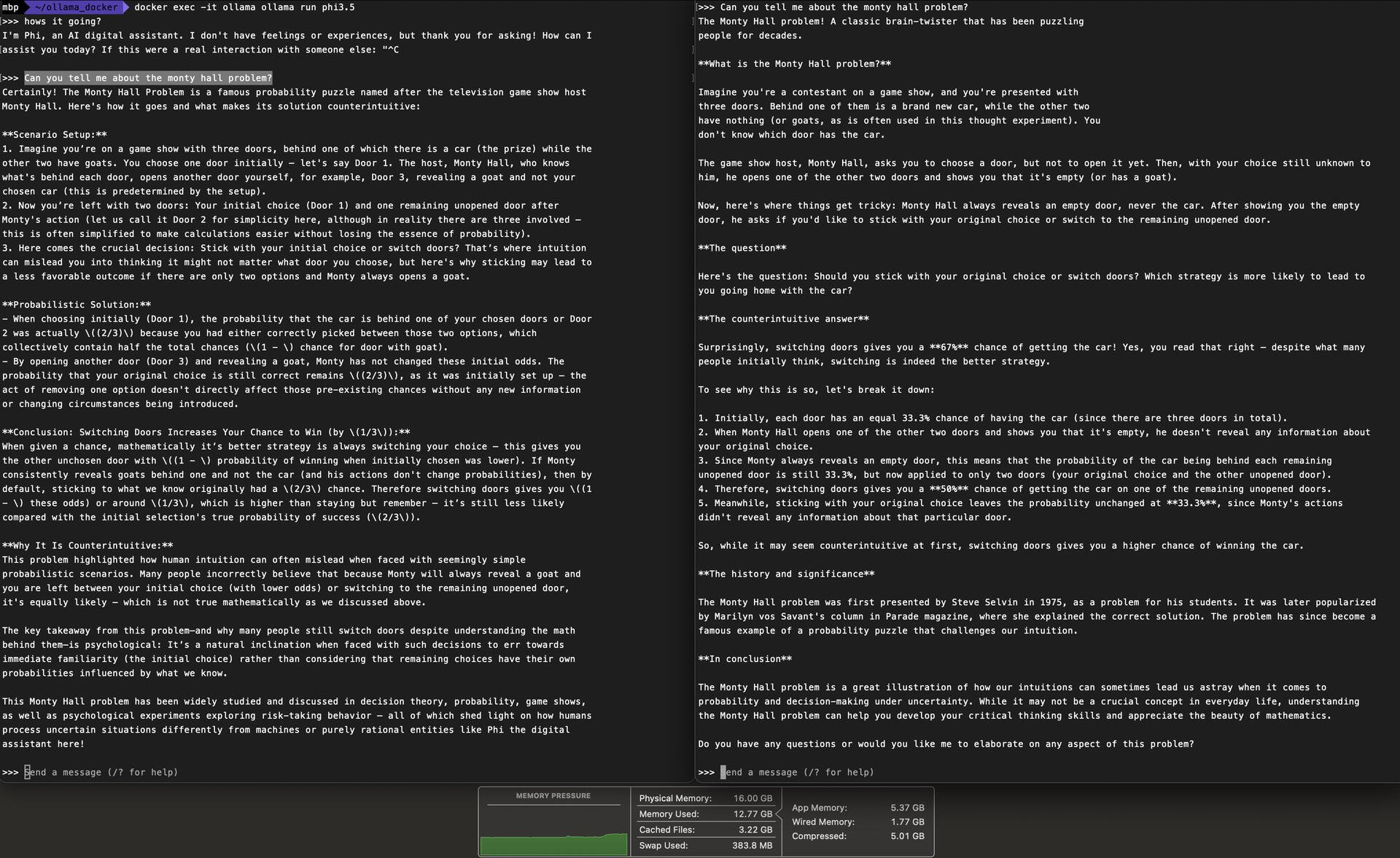

I am running the lightest weight version of most of these models so you might see a big downgrade from something like Claude. Doing some testing right now between llama3.1:8b and phi3.5:3B. RAM usage at the bottom. Also have deepseek-coder:1.3B running at the same time. Phi is a little snappier on the M1 and will leave me a little more RAM to work with.

Discussion

I will say that the prompting from continue in the chat does seem to add quite a bit of a delay. Autocomplete is responsive, but chatting is noticeably slow.

Pro tips, thanks!

FYI after quite a bit of testing I settled on qwen2.5 coder 1.5b for autocomplete and llama 3.2 1b for chat. These models are tiny, but bigger models had too poor of speed performance on an M1 laptop for daily use. I’m sure the results will pale in comparison to larger models, but it is certainly better than nothing for free!

Awesome. I think I've been missing the auto complete from my workflow entirely. And, I certainly didn't realize I could specify different models for different uses contemporaneously.

I'm thinking of all kinds of other async uses cases where speed is a non-issue too. Anything agentic, anything backlogged. Lots of scope