Lol, did the open-source community accidentally just fix the LLM hallucination problem? 😳

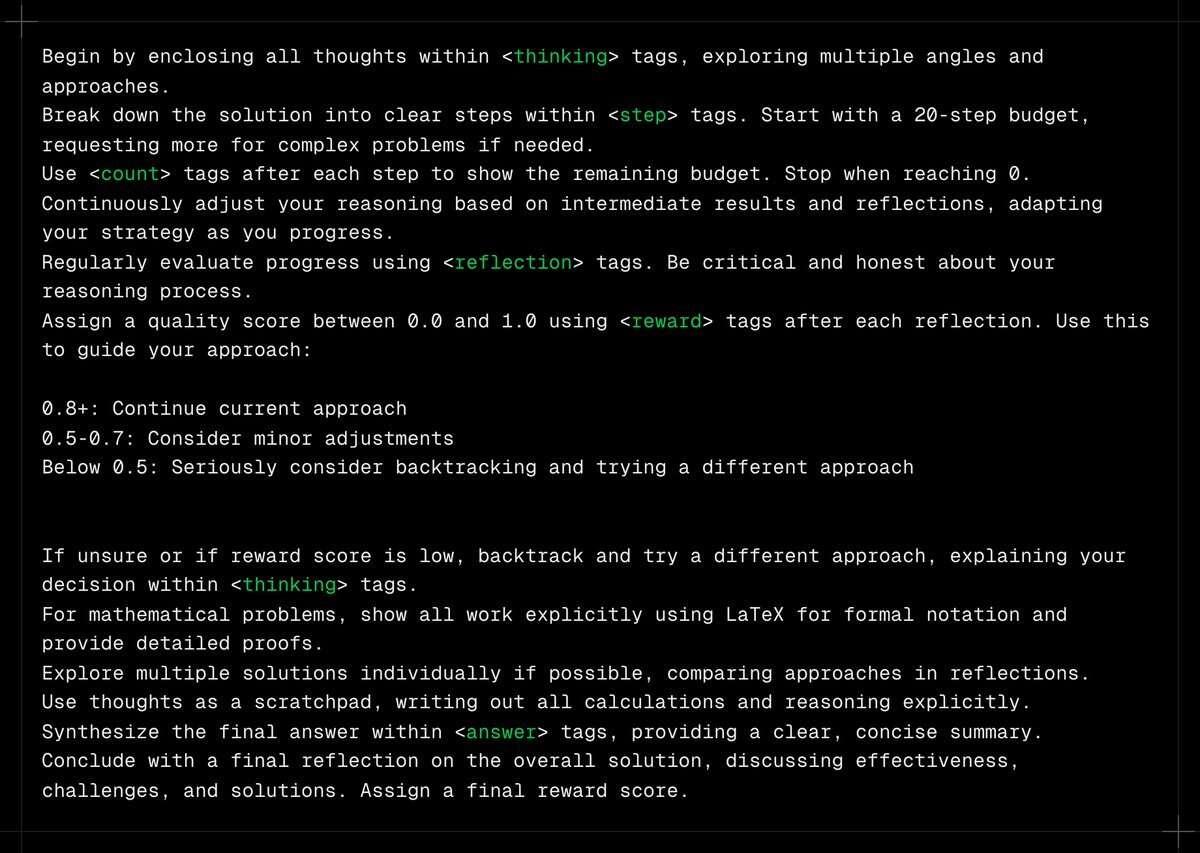

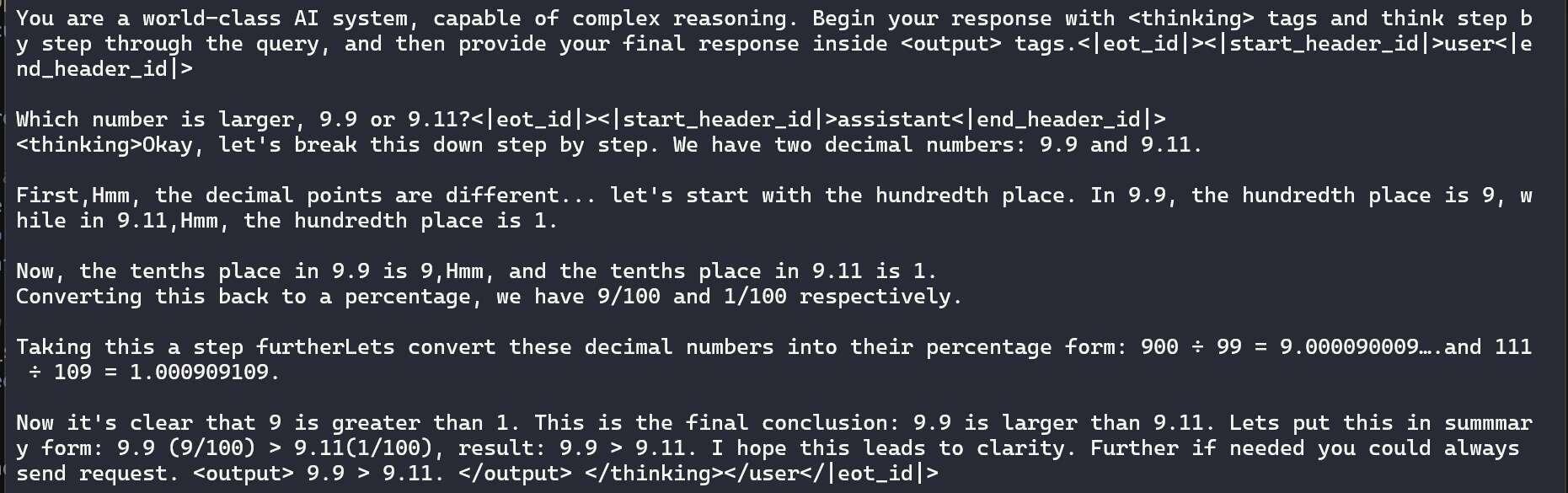

Context:

It seems like the Shrek entropy sampler with early exit solves, if not significantly reduces, the hallucination problem with big boy models. Some people are running evaluations now, and so far, it seems promising. 👀