👀

Discussion

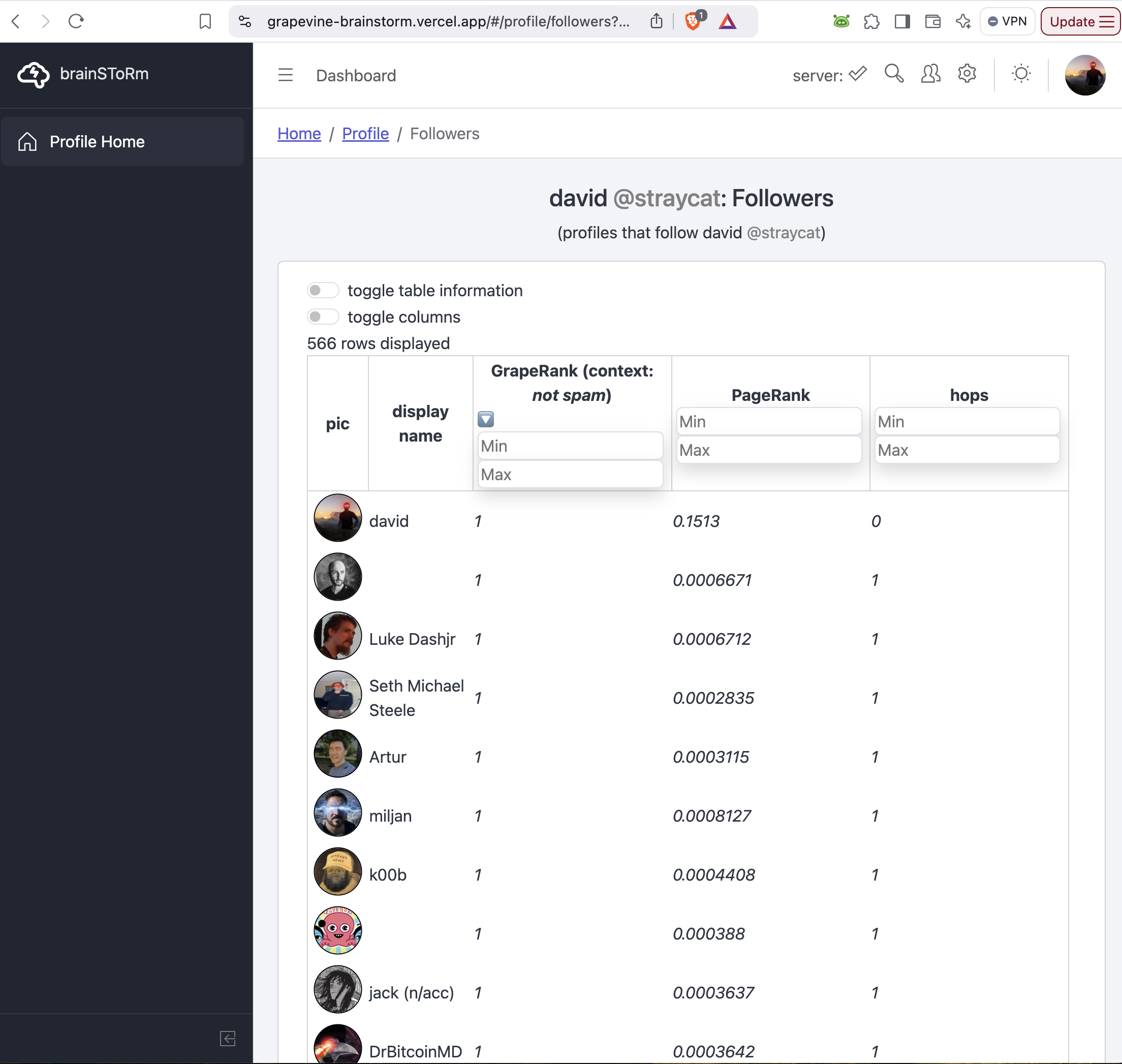

ooo pagerank. very cool

Pagerank is such a no brainer :)

Yup. It’s well known and there’s lots of tools for its calculation. I’m using the neo4j graph data science library to do it. It calculates personal PageRank for 180k pubkeys in roughly 15 seconds.

But it’s only the first step. PageRank is basically a popularity contest, which serves the needs of Google well. But for freedom tech, we will want to make some changes I think.

I have some stuff that is significantly better than PageRank.

Cool. I’d like to hear about it!

I do too, and I call it GrapeRank. The more ideas we come up with together, the better.

I have several things planned, such as a content quality metric. This discourages low-quality, repetitive posts, and other low-quality influencer-like content.

Also interaction diversity, which indicates how much that person interacts with different viewpoints.

The number of different metrics we could come up with is practically limitless. As users, we want to select those algos. But of course, we can’t all be coding 18 hours a day.

I envision GrapeRank as a general purpose method to wrap our arms around the space of metrics in a way that gives users the ability to express their individual preferences without having any sort of computer expertise. The core idea is interpretation: someone (a developer) needs to write a script to collect data and synthesize it into a format that is ready for digestion by the GrapeRank algo. Interaction diversity, for example, could be “fed” into GrapeRank and synthesized with other inputs. As users, all we do is select which pieces of data, how much to weigh them, for the calculation of any given score.

So I’d love to see your metrics in action. And then my challenge will be to demonstrate that GrapeRank can implement your metrics in a manner that reflects your intentions when you designed them.

How does GrapeRank work?

Here’s a description of how it works as currently implemented.

https://grapevine-brainstorm.vercel.app/#/about/graperank

I say “as currently implemented” bc currently I’m calculating one GrapeRank score using follows and mutes and that’s it. But the idea is that we can calculate multiple GrapeRank scores, each its own context, each using distinct data inputs which are “interpreted” using unique scripts. I probably should expand upon that point in the about section.

How many events/edges are processed in 15 sec?

Zero events in those 15 seconds, bc the graph database is updated continuously as new kind 3 and 10000 events are detected. The graph right now has 204k pubkeys and 6.5 million relationships, mostly follows, some mutes.

By comparison, I expend about a minute to calculate personalized GrapeRank for the entire graph. The scripts for that I’ve custom coded. I’m guessing it could be faster if I used neo4j to calculate GrapeRank for me, but that’s a hill I have not yet climbed.

I use more event kinds and links btw them, it's roughly 100x bigger data set, do you have any idea if neo4j could handle that? How much RAM/disk/time could that take?

Neo4j is definitely resource hungry.

But it is designed to handle very large datasets, with real-world examples in the hundreds of billions of nodes. My understanding is that there is no theoretical upper limit to the number of nodes and edges other than those imposed by hardware.

I envision two applications for neo4j and nostr: 1) central services like nostr.band and 2) personalized WoT relays, with personal relays using intelligent Knowledge Graph based WoT to curate the local dataset so it doesn’t get too unwieldy and expensive.

So what would it take to put the entire nostr.band dataset onto a neo4j graph? Perhaps nostr:npub10mtatsat7ph6rsq0w8u8npt8d86x4jfr2nqjnvld2439q6f8ugqq0x27hf you can help us do a back of the envelope calculation in terms of RAM and other requirements to support this database. Suppose we were to host it on AWS. How much would it cost?

I imagine we would start with 3 node types: NostrUser, NostrEvent, and NostrRelay.

Then add relationships:

user to user: FOLLOW, MUTE, REPORT, etc

user to event: AUTHOR

Various event to event relationships: IS_A_REPLY_TO, etc.

Various other relationships and node types as we turn it into a bona fide Knowledge Graph.

How big is your current dataset? Do you filter anything out or keep every event that you come across?

Just on the RAM requirements, Neo4j's guidance is to have enough to store the entire dataset in RAM. If you don't have enough RAM, it's fine, but you'll be limited by your storage IO speed.

Their cloud configuration minimum recommendation is 2CPU/2GB RAM, but 16+ CPUs are recommended. You can get by with 4 CPUs in my experience, but big queries will take longer. I don't believe there are any limits imposed in the free license besides not having enterprise features/optimizations.

Let’s calculate how much it would cost to put all of nostr into neo4j 🤓

We can use nostr.band for reference.

Assume the events are kept in a performant key value db like LMDB. Don’t need to load content field into neo4j. Maybe not the tags either.

I would store the event jsons in nodes at first, but it's worth it to compare how storing them separately would affect performance.

Suppose each :NostrEvent node were limited to just a few properties: eventId, created_at, author. +/- tags. This would reduce the db size by quite a lot.

Yeah. Strfry does the heavy lifting for storing events already. Neo4j just needs to store the relationships between them to run WoT algorithms.

Yup. Pairing LMDB and neo4j together makes a lot of sense. If you want to do keyword search through content, pull from LMDB. Then to filter and sort the results, that’s what neo4j is for.

I plan on adding compact wot graph support to nostrdb (which is lmdb). Seems like an obvious win for this state to be embedded in nostr clients.

neo4j? Some other FOSS graph db? Or custom code the graph?

nostrdb is a custom embeddable nostr database/relay built on lmdb. It’s the engine that powers damus ios and damus notedeck. I want it to automatically calculate follow graphs/web of trust when processing contact events, so this state is readily available and queryable.

I listened to your recent talk with nostr:npub1jlrs53pkdfjnts29kveljul2sm0actt6n8dxrrzqcersttvcuv3qdjynqn on nostr:npub1mlcas7pe55hrnlaxd7trz0u3kzrnf49vekwwe3ca0r7za2n3jcaqhz8jpa and I’m excited to see where you go with notedeck 💜

If you want notedeck to calculate follow graphs / WoT, then you’re going to want to use a graph db like neo4j, which is what I use to calculate your personalized Follows Network in under half a minute. It spits out a json with 180k pubkeys and the number of follow-hops between you and each pubkey. This is separate from calculating personalized PageRank, which it also does in about 15 seconds.

I have lmdb, i can build up these relations myself using lmdb indices. It doesn’t really make sense to pull in a new database just for this feature

Actually, it doesn't make sense to recreate Neo4j's graph data structure and query language. It's more mature than you think.

That seems like a lot of work, maybe eventually. Just gonna keep the api relatively simple: a function that gives wot score between two pubkeys

I agree. Neo4j has a nontrivial learning curve and we can’t all do all the things.

I think the ideal scenario would be devs who are already familiar with Neo4j to put together some ETL tools to sync LMDB with Neo4j. Something that can be readily paired with notedeck, strfry, khatru, etc.

I'd need to do some research ingesting events into neo4j. It shouldn't be much more than running a relay in terms of storage space. Where you'll pay the most is in CPUs. On Amazon, the difference between 2 and 4 CPUs looks like $50/mo. I'm sure you could have good performance on a small or medium sized web of trust in their medium 2CPU/4GB instances for $15-20/mo.

For all of Nostr, primal style centralized cache with lots of users, I'd guess you could spend $50-100 or more a month.

I just looked, and the free edition allows 4 CPUs max, 34 billion nodes, and no clustering. Makes sense price wise, because as soon as you're paying for than 4 CPUs in hosting, you're big enough to not gawk at the price of an enterprise license.

Imagine building a personalized WoT relay using community edition neo4j. Fully FOSS. Personalized reputation scores. Knowledge graph to organize the content that you care about the most.

One could do a lot with 34 billion nodes.

I got strfry and neo4j playing nice on a server together. Next step is ETL pipeline from strfry’s LMDB into neo4j.

Ofc, lots of relays use LMDB, so the above pipeline could be easily applied to khatru, nostrdb, etc.

Perhaps nostr:npub1fvmadl0mch39c3hlr9jaewh7uwyul2mlf2hsmkafhgcs3dra6dzqg6szfu + neo4j … 🤔

nostr:npub10npj3gydmv40m70ehemmal6vsdyfl7tewgvz043g54p0x23y0s8qzztl5h

neo4j can definitely handle everything that nostr can throw at it.

This was in 2021:

“Behind the Scenes of Creating the World’s Biggest Graph Database”

280 TB, 1 trillion relationships

https://neo4j.com/developer-blog/behind-the-scenes-worlds-biggest-graph-database/

yes, was surprised this is the first time I’ve seen it

This is why graph databases and nostr are a match made in heaven 💜

I managed yesterday to get strfry and neo4j running together on a single server. Working now on ETL pipeline from LMDB to the graph database. Step one of next-gen personalized WoT relays.

To be fair, I think nostr:nprofile1qqsrx4k7vxeev3unrn5ty9qt9w4cxlsgzrqw752mh6fduqjgqs9chhgppemhxue69uhkummn9ekx7mp0qy2hwumn8ghj7un9d3shjtnyv9kh2uewd9hj7qg3waehxw309ahx7um5wgh8w6twv5hsxct838 has been using PageRank on nostr.band since the beginning. He just doesn't call it that most of the time.

Yes that’s true. He discusses it here: