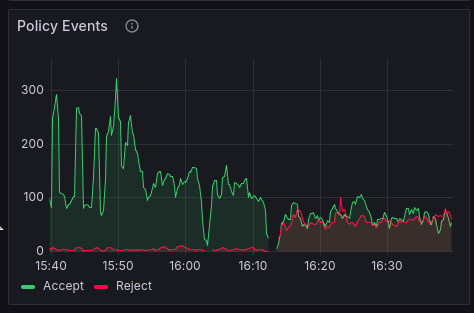

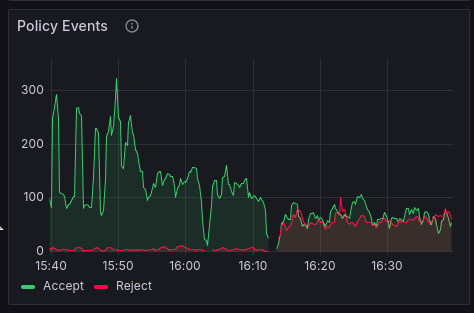

Look how much crap is blocked by just requiring the user to have a kind 0 on the relay already. That's like 90% of it.

Look how much crap is blocked by just requiring the user to have a kind 0 on the relay already. That's like 90% of it.

👀

nostr:note1vgvhwjn6ewsr7z4cq0qc4j4fpz0aqrc66u5xt7r7fat35p5s09uqlvggjv

so they did it. onboarding impossible?

chat gpt helped me out here, i guess:

This Nostr post refers to how effective a simple requirement can be in filtering out unwanted or low-quality content on a relay (a server in the Nostr protocol, which is a decentralized social network protocol). Here's a breakdown of the key parts:

"Requiring the user to have a kind 0 on the relay":

In Nostr, events (messages, posts, updates) are categorized into different "kinds." A kind 0 event typically refers to a profile metadata update (such as name, bio, or avatar).

Requiring a user to have a kind 0 event on the relay means that only users who have updated their profile metadata on that particular relay can post or engage on it.

"That's like 90% of it":

The author is suggesting that by implementing this basic requirement (having a kind 0 event on the relay), you can block about 90% of unwanted content, spam, or malicious posts.

"Look how much crap is blocked":

The phrase "crap" here refers to low-quality content, spam, bots, or malicious actors. The author is pointing out that many of these issues are filtered out by simply requiring a small commitment from users (updating their profile metadata).

In essence, the post highlights that a basic filter like requiring users to provide a profile (kind 0 event) can drastically reduce undesirable content on a Nostr relay.

But this requires rebroadcasting your profile metadata each time you add a new relay, doesn’t it?

I feel like if you don't even put your profile data on a relay, are you really even using that relay?