When I'm talking about learning curves, I'm talking in the economic sense, like this:

https://galepooley.substack.com/p/how-learning-inverts-the-supply-curve

When people find a broken , probably unfair strategy in certain game, they begin to harness them in new emergent ways, just like when a profitable product is "improved" through massive repetitive production and learning things about it

I consider this one of the forces that drives a significant part of the players to play a game in the first place. Suddenly cutting the while strategy could provoke an exodus of the participants and be counterproductive for people generating ideas while playing the game. I consider a great advantage that AI could take all ideas as vectors and could find ways to "cut in half" the broken mechanic. People could keep learning about them and the Bad effects of the evil mechanic could be mitigated because (I guess) there aren't infinitely extended exponential learning curves in the world (eventually the profit potential could be exhausted)

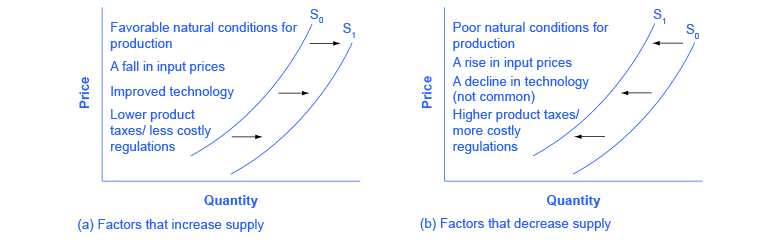

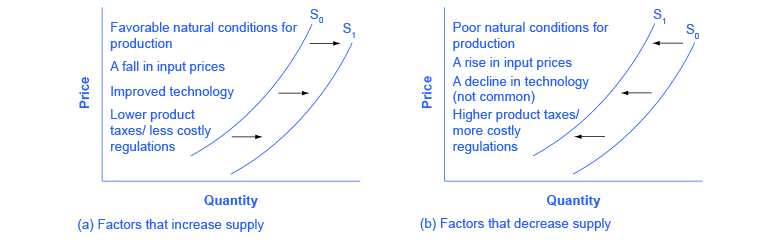

i think the curve moves like the (a) in the picture when productivity increases

Yeah, that improves the productivity (supply) of certain product. The thing is that people are also incentivized to produce for a profit. If people have so much demand for a product, its price will drop pretty quickly (in a standard of law of supply and demand, free market, of course) and the producers will eventually be incentivized to produce other things because the opportunity cost of doing that will be more favorable

Thread collapsed

Thread collapsed

but yeah AI may come to a point where it will play with people while people think they are playing a game. this is so apparent in racing games. when you suck at it, the NPC cars also start slowing down 😄 🤦♂️

It's a similar worry to AI making an entire world of paperclips, right? If AI systems are produced by the catallactic competition of a lot of parties with their own interests and fed Nostr data (which helps prevent the spread of lies), You could expect to organically have AIs that are farther and farther away from the paperclip dystopia and if one of them becomes somewhat paperclippy, it will eventually be exposed by other models, I guess 🤷

yes rouge AI can be fixed by consciously curated AI

Another thing is that balancing games (making them more fair) is by itself an extremely difficult and time-consuming task.

Cutting a broken strategy in half and letting it evolve through the learning of the players is a lot smoother and simpler than trying to eliminate the bad thing inmediately, which could make the state of the game even more broken

That's why "I think" it's sustainable. It's simple and stupid in plain sight

this looks like at least starting the negotiation and keeping the negotiation going in order to reach a deal. both sides want a thing they don't know where the equilibrium is.

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed