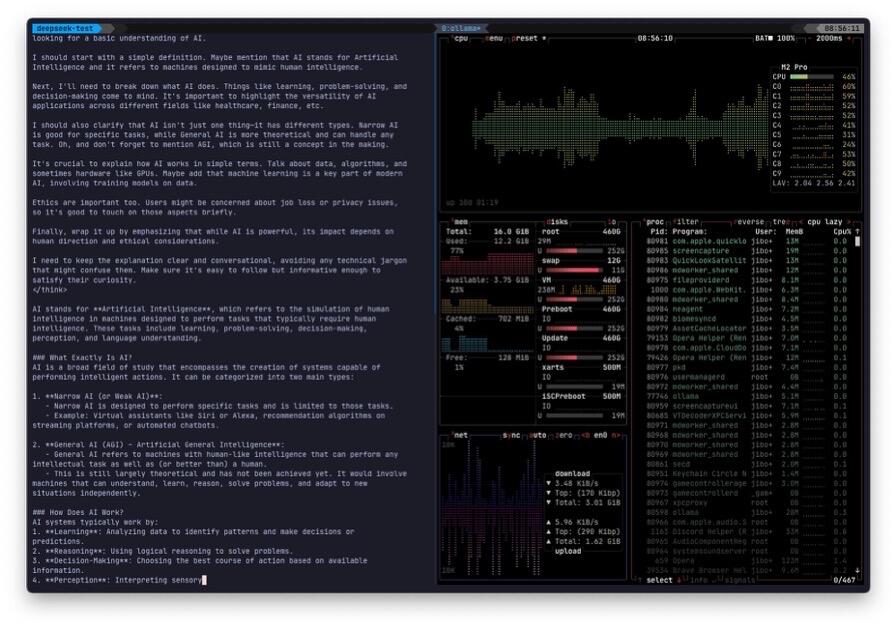

MacBook Pro M2 / 16GB ram can run DeepSeek-r1 14b comfortably. #ai #deepseek #ollama  nostr:note1extdr5qtywfrz4mr0ztw5pp47l088nthd3e2y87a7zxvct0r5qdqsk88ql

nostr:note1extdr5qtywfrz4mr0ztw5pp47l088nthd3e2y87a7zxvct0r5qdqsk88ql

Discussion

For real!! I was thinking I would need to daisy chain some Mac minis to do it!!

To be fair, I am running the distilled 14B model, not the full 700B,… I don’t have the hardware 😁

But I feel it is enough for what I need. I use an Obsidian AI chat plugin that talks to Ollama.

I use it to give context to notes, reword sentences, give suggestions, simple text stuff 😁