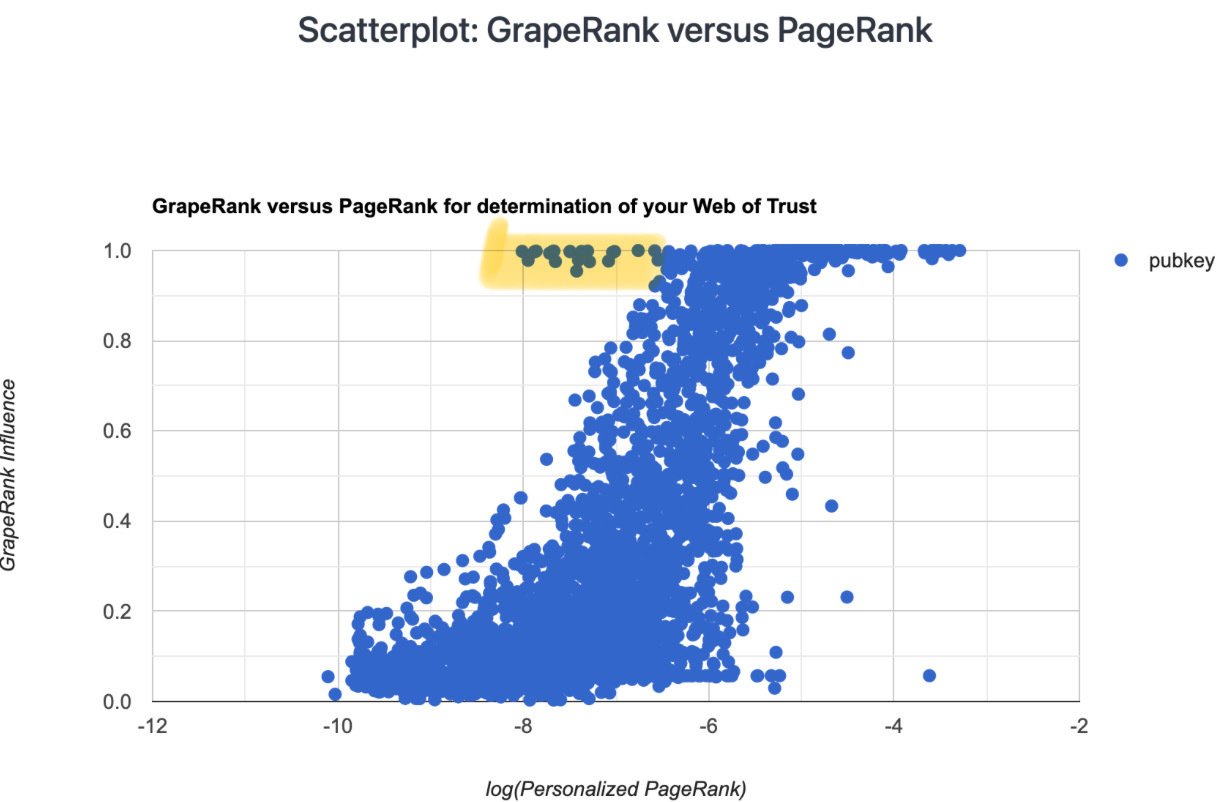

algorithmic analysis of my web of trust

~ 180k pubkeys in all

The ones highlighted in yellow are the nostrich.house bots.

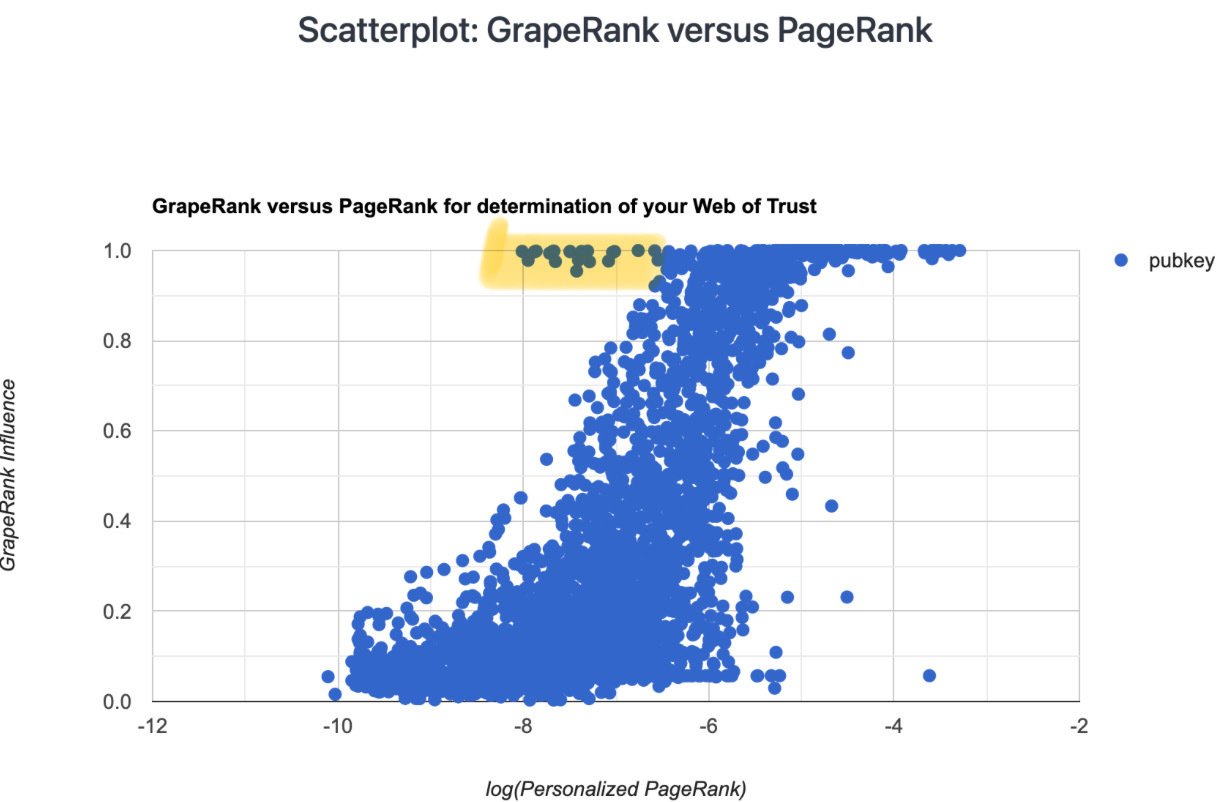

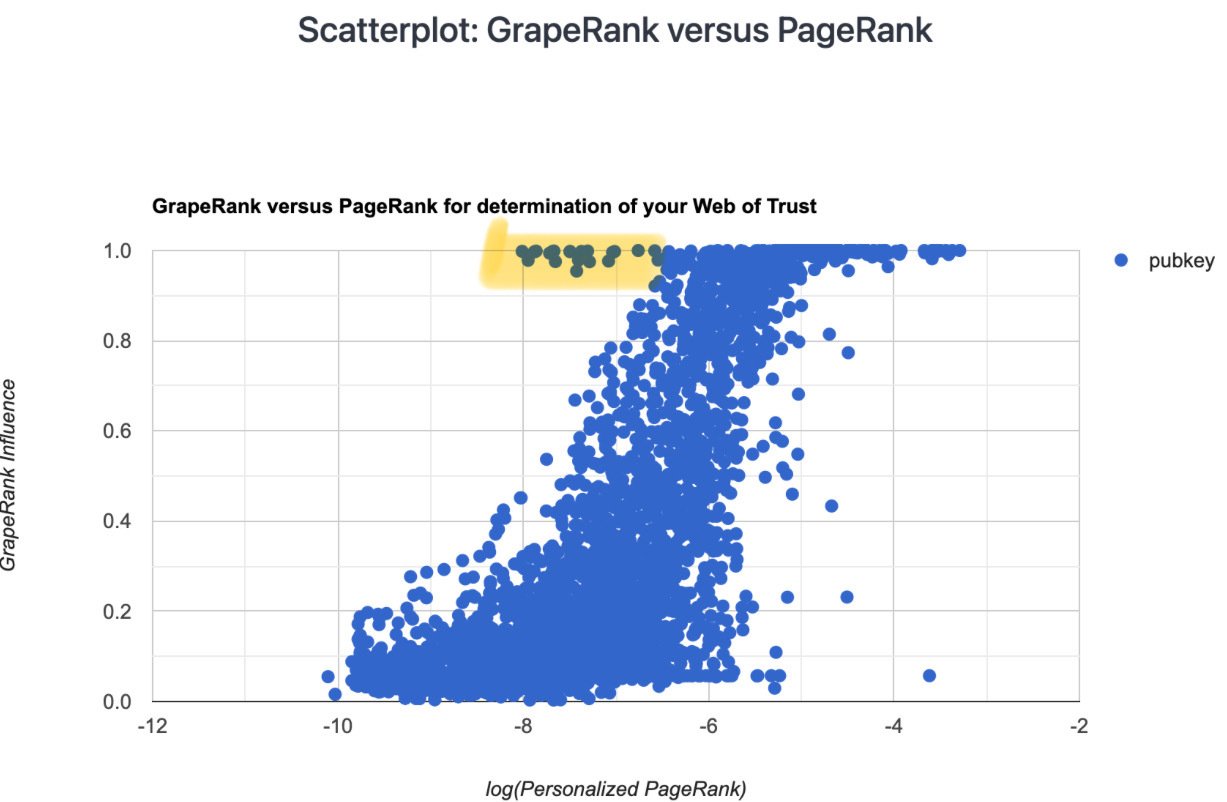

algorithmic analysis of my web of trust

~ 180k pubkeys in all

The ones highlighted in yellow are the nostrich.house bots.

What am I looking at for x and y axes?

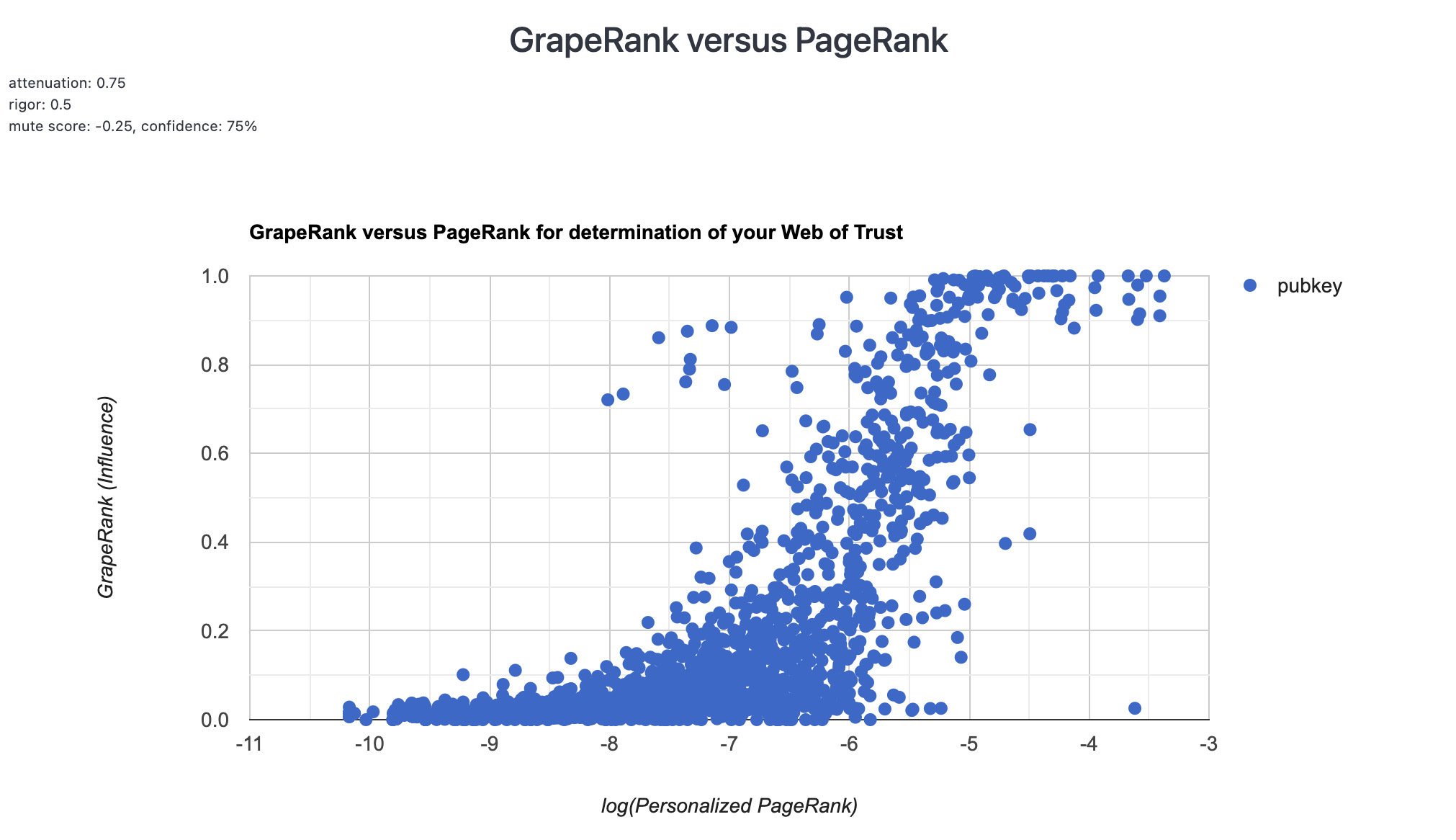

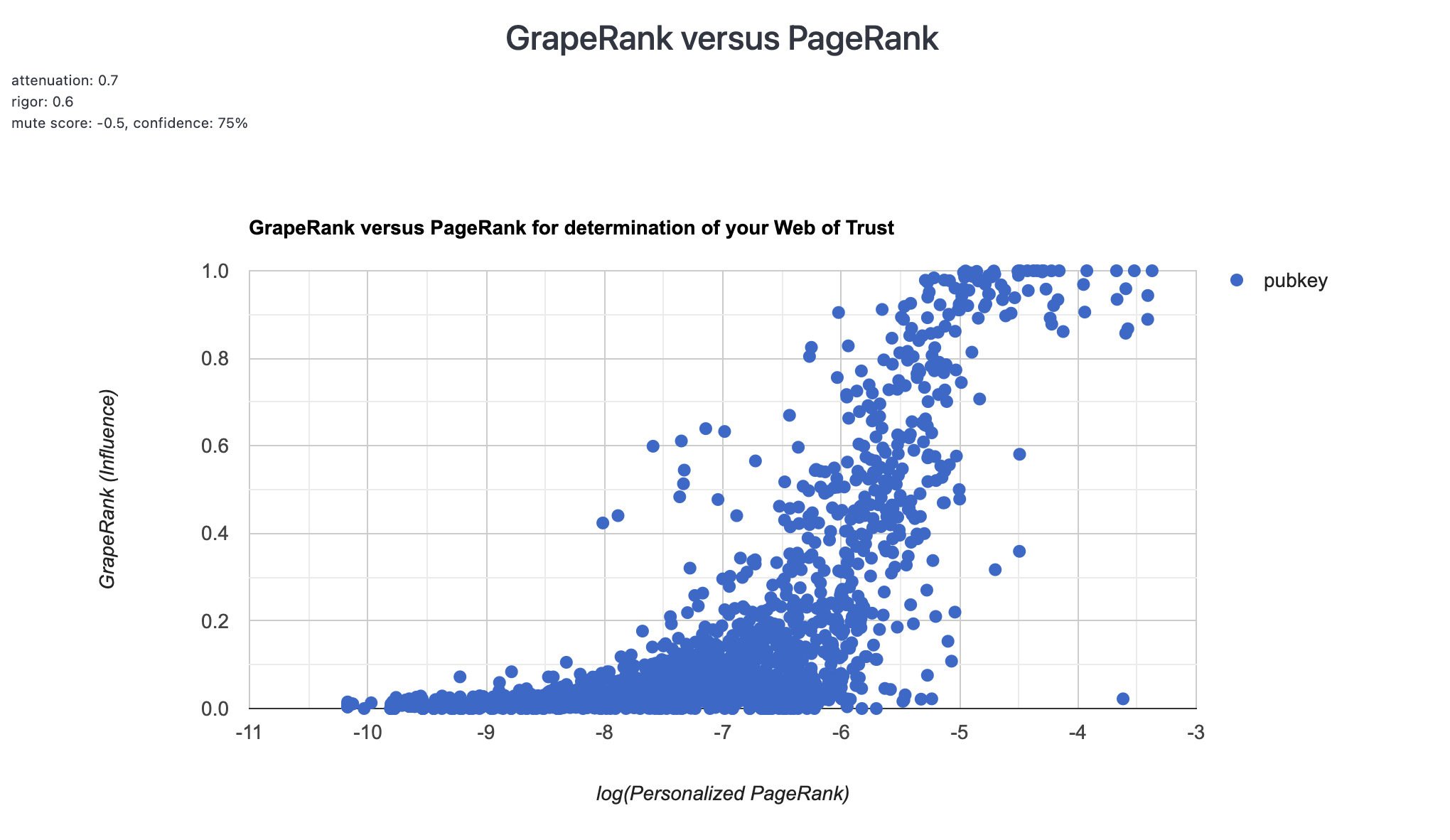

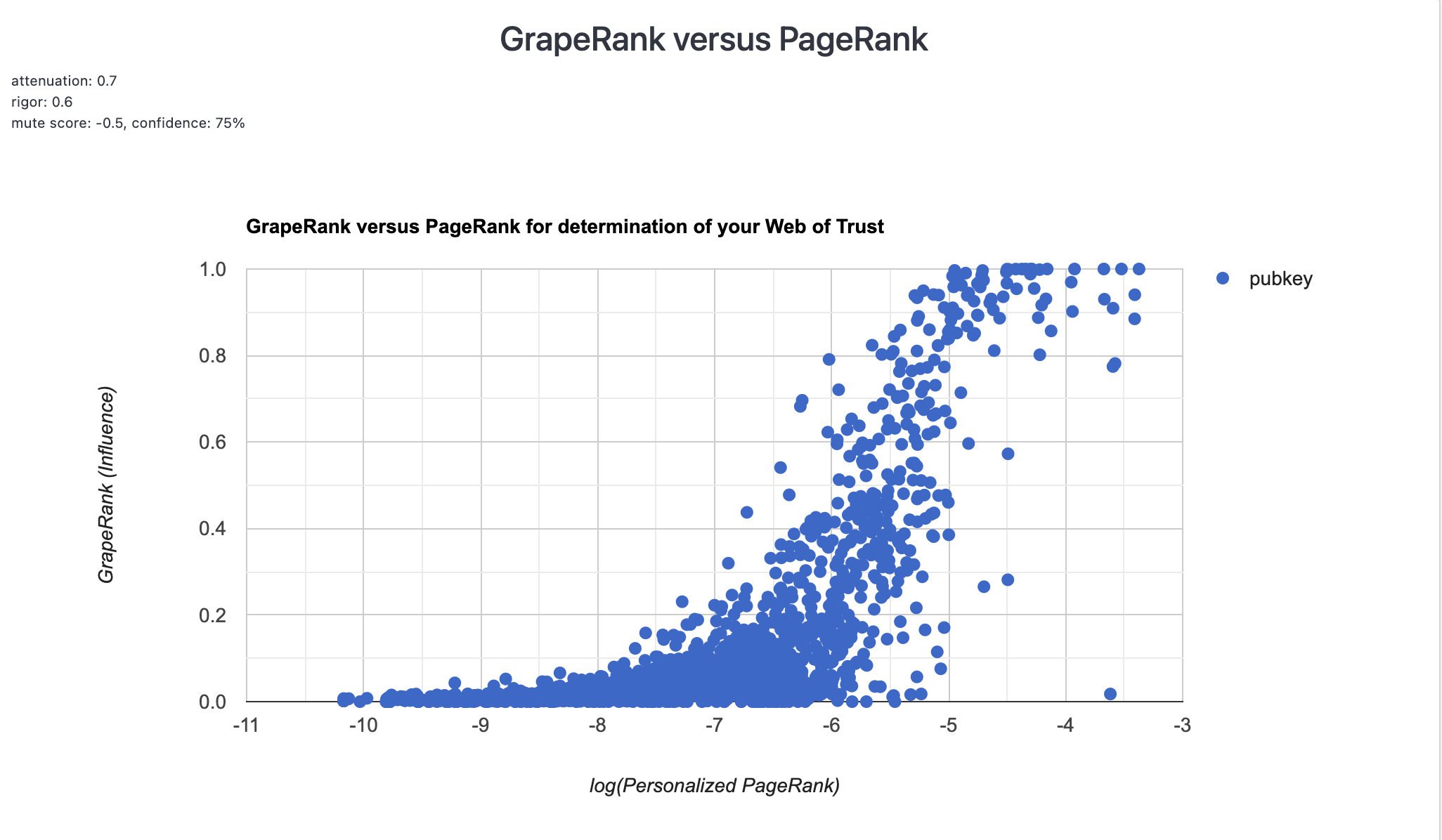

X axis is the log of the personalized PageRank score of each pubkey, with more trusted on the right, less trusted on the left.

Y axis is the GrapeRank score (specifically, the influence) of each pubkey, with more trusted = higher score.

It appears to me that there is a particular group of bots that can be picked up using this graph that would not be readily identified by using either PageRank or GrapeRank alone.

What is the bottom right one?

There are 30 pubkeys above -4 on X axis and below 0.2 on Y axis (the graph shows only a few thousand of the 180k pubkeys in my follows WoT) — Most of them are throwaway accounts I created myself for testing purposes and I’m the only follow. A few are real accounts that I follow but they have only 1 or 2 other follows.

how's it possible that these bots get so much GrapeRank and so little PP?

They all seem to follow each other. If just one of the batch gets followed by a regular user, the GrapeRank score gets off zero, and following each other boosts their scores more.

Interestingly, I think this exact same strategy works in meatspace, and for basically the same reasons.

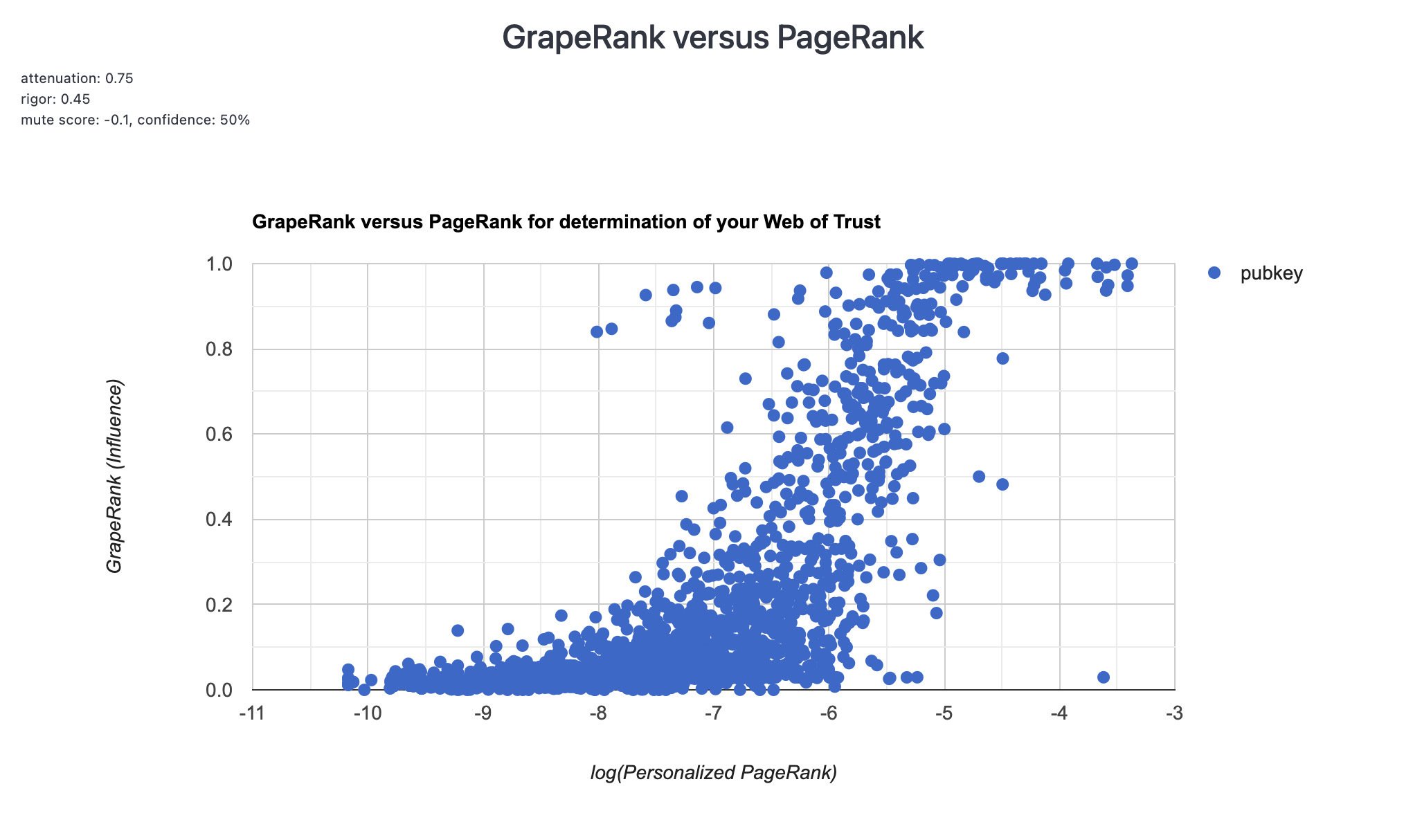

The power of GrapeRank is that there are multiple parameters that can be adjusted to address different attack vectors like this one, and the parameters all have an intuitive interpretation. I’ll get some snapshots and show how that works.

I am using a different idea with PP. First off, it's a monte-carlo version, so I am simulating a bunch of random walks.

Secondly, I've added a new stopping rule: break the walk when a cycle is found.

example: a walk is a bunch of IDs of nodes, like {1, 11, 234, 7, 0}

The walk {1,2,1,2,1,2} contains cycles, so it's stopped earlier at {1,2}. This means 1 and 2 gets visited less often.

Cycles are really improbable for normal users, but highly probable for spam networks. The net result that I've found is basically no change for normal people, and a big de-rank for such networks (their scores go down by a factor of ~3)

I would have expected cycles to be pretty common for normal users. Many of my follows follow each other and also follow me back.

yeah, but follows in random walks.

You see, nodeA follows 100 others. If each of these follows, follow him back and other 99 nodes

then the probability of doing nodeA --> follow --> nodeA is 1/100

Are you saying (or assuming) that when you encounter an army of N bots, then the bot army tends to be more tightly integrated than an organic group of normal users, bc the bot army strategy is for every bot to follow every other bot? Could a more sophisticated bot army then decide not to do this?

I suppose one way to understand why cycles are bad is that you get paths that are infinitely long. GrapeRank addresses this problem using a parameter called attenuation factor, aF, a number between 0 and 1. For any given path starting with the reference user, the weight of each successive hop is multiplied by aF. So the impact of a node that is N hops away on a given path is reduced by aF^N.

Take a look at these 5 graphs and what happens to the batch of bots from beginning to end. As the parameters are adjusted gradually from permissive (first graph) to restrictive (last graph), the GrapeRank scores of the bots gets pushed downward.

true, but almost everyone else gets pushed down as well.

In the above graphs, the grapevine algo interprets a follow as meaning “real nostr user” and a mute as the opposite. So one of the adjustments is to increase the amount of weight given to a mute. This will have more of an effect on the population of bots than other populations.

One of the next steps will be to incorporate reports (kind 1984), which should likewise help in the differentiation. I don’t know if PageRank has a natural method to incorporate different types of edges or not.