its not the storage requirement thats the problem with using an SD card

it's the sustained read/write during initial block download(which pruned nodes still have to complete) most SD card readers use controllers which arent suitable for that as they are mostly designed around phones or cameras for lower end devices

is there anyone that runs bitcoin core on an SD card?

id be surprised if it could get through IBD

a lot of mobile banking apps service interruptions this monday

on the surface the media reports as a rush for demand in dollar trading

but from learning about the disproportionate ratio between actual cash and debt products....

makes me wonder which banks actually hold sufficient dollars on-hand and services were shut off because of it🤔

somewhat relevant. Taiwan Dollar (issued by the Central Bank of China -- not that china) has capital controls and extensive KYC for USD

🤔, so which is it? you can have whatever impression you want but this works in a certain way.

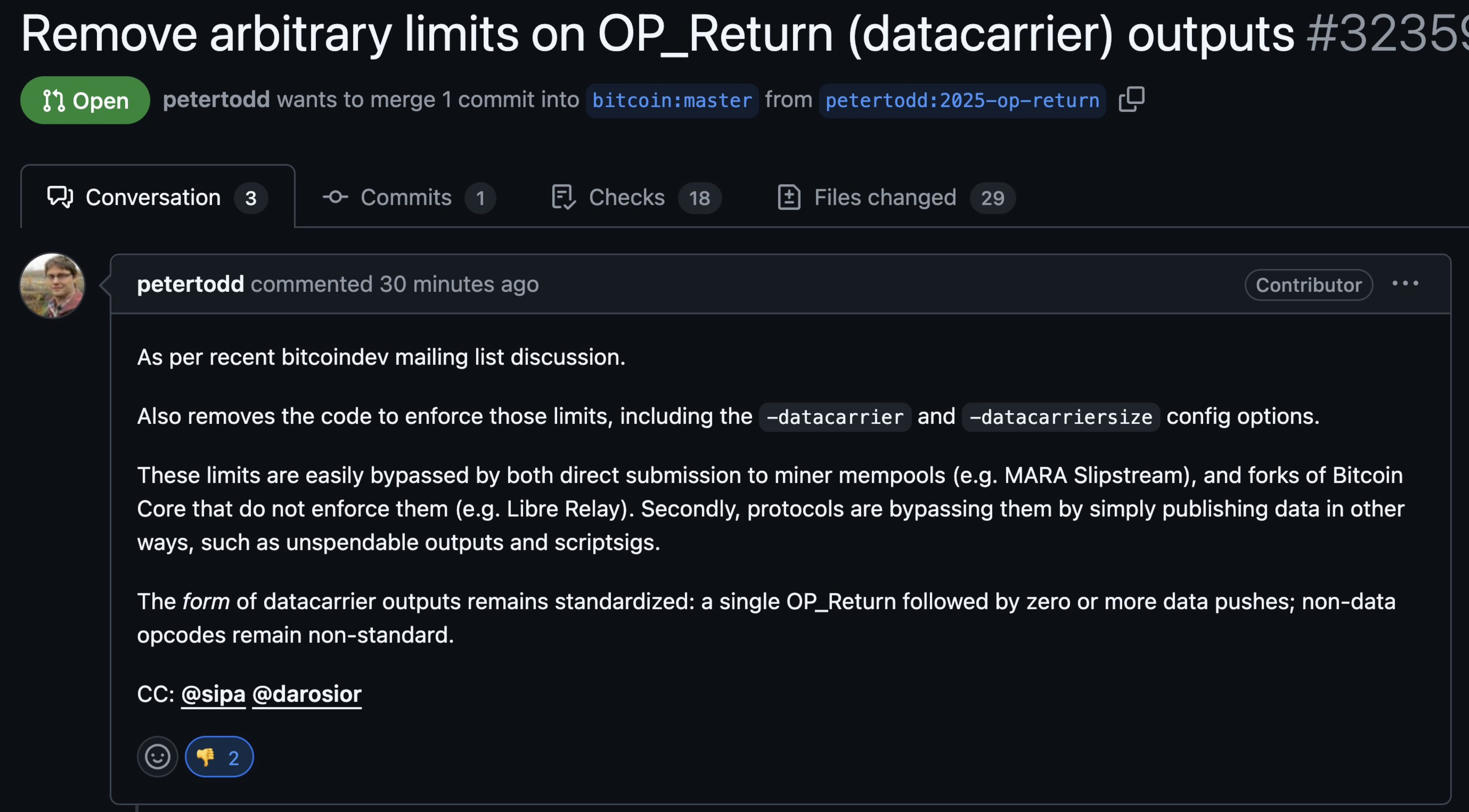

are these larger OP_Return transactions successfully relayed through the network because a sufficient amount of nodes actively configured their node to do so (because Core or Knots wont do it out of the box save for Libre Relay and some very old versions) or are these transactions resorting to services like slipstream because the wider network wont relay them?

because if you're saying these transactions get through by a small subset of configured nodes relaying to one another, consider to extending that conclusion to nodes choosing to configure in another direction too 😅 and if you're saying these transactions had to be sent to the miner directly because the wider p2p network wont relay them, well...seems like you understand that filters work and that it costs something to get around them 🤷

apologies, my nostr-edit appears not not have made it to you: "arent nodes running filters to impede this propagation as a way to marginally discourage behavior relaying *blocks with* transactions not accepted by the wider network"

i think you mistake the occasional stunt by miners to throw random junk they choose to include in blocks as a failure of mempool policy. it'd be as absurd as to pointing to an empty block and blame it on mempool policy too

arent nodes running filters to impede this propagation as a way to marginally discourage behavior of relaying transactions not accepted by the wider network? thats the entire point, it works. why wouldnt expanding those filters to include utxo-bloat spam work also?

when a peer is relaying a bunch of spam to my node, my node basically treats that peer like a block relay. not my business that he sends those txs to me multiple times and not his business i refuse to forward along transactions. in the event where multiple chaintips appear, I'd prefer to relay that the block with more of the transactions ive already validated in memory over the one where theres transactions my node hasnt validated

what do you mean by 'degraded manner' for the network as a whole?

other than a lower availability of these spam transactions in any given set of nodes' mempool, it makes little no difference for a node that is enforcing existing policy which is the network at large. it would only be degraded for the participants that want higher availability of arbitrary data prior to the spam transaction getting mined which is even a smaller set of people than the people who actibely upgrade software and/or apply patches.

the argument i see being made is that fee estimation could be messed up, but the thing is that fees are up to the node. their ability to calculate fees on their own criterion of what to bid against is none of anyone's business and certainly doesnt degrade the rest of the network. the node knows whats best, assumptions on what a miner "might" include in blocks dont need to (and shouldn't) be forced upon them.

seems like thats something that requires some flexibility and left for nodes to decide

i tend to agree that a scenario exists where 'eventually' OP_Return limits should be relaxed, but in my head that scenario happens like maybe in a decade if a sustained effort to bloat the chain proves to exceed the desire for users to 'use bitcoin' far exceeding the efforts today or historically.

what is the acceptable limit of OP_Return? at what point is loosening the limit no different than removing it altogether?

what is your position on how new nodes on the network should be configured? should these limits (or lack thereof) be set in stone or allow some flexibility through configuration file like how it is done today.

while blocks occasionally (or arguably often) contain arbitrary data transactions that bypass limits set by node policy, those OP_Return transactions are only able to relay on a small subset of nodes on the network (proponents of removing limits often cite libre relay and some miners that accept out of band payments). whether or not there is an actual need for these larger op_returns i have no idea, but it would appear that those transactions have not been able to proliferate across the wider network of legacy nodes and have no way of expanding their use, this probes thay existing policy are effective. i havent seen an argument why new nodes should be expected to join the nodes which relax limits rather than the wider network which overwhelming enforce the limits.

anecdotally i run Core with the patch 28408 for about 18 months, and i send transactions regularly, my fee estimation has worked fine and ive been able to keep up validing blocks containing transactions not in my mempool just fine whether or not my peers include Bitcoin Knots, Libre Relay, or a peer still running v.15

utxo-bloat methods of storing data have only been tolerated on the network because it has been controversial to include those methods under current policy checks. if the concern is to address utxo-bloat perhaps that discussion should be opened up again and a clearer conclusion be made

"the cat and mouse game is futile, look at all the damage the mouse did, letting the cat we-kneecapped-and locked-in-a-cage out would make it worse so lets delete the cage with the cat in it so theres more room for mice"

Core continues to display a lack of leadership on the topic of arbitrary data.

grant money used on gaslighting instead

does anyone actually run a node off of these?

given the limitations of I/O of the controllers how long does a rescan take, if it can even finish at all 🫠

ive been running Core with pull request 28408 (match more datacarrying) for well over a year. before the discussion for the PR was closed, at taiwan bitdevs we applied this patch together as a way to show our attendees how datacarrier works (or more like why it was broken). some users even continue to use that version of Core in their nodes, even applying the patch up through v28/29.

the users understand how arbitrary data still show up on blocks and still go through the process om their own. the patch itself works just fine, i dont see why datacarrier should be removed if it is working properly.

i followed the recent mailing list discussion and i dont have any strong opinions on the implementations that choose to bypass existing restrictions. doesnt matter that much to me, but the PR you submitted removes a functionality node operators use and im a bit lost as to why.

i dont buy that removing restrictions will reduce utxo bloat, intuitively it makes me think the situation would be made worse

i dont have a random fancy whitepaper to kick off discussion like the ML, i can only ask whats wrong with fixing it

on a positive note, at least theres acknowledgement that datacarrier is dysfunctional. best of luck with your endeavors to remove it, id even prefer that result compared to doing absolutely nothing.

Core should just remove datacarrier altogether if they're being honest. its a shame because the fix from december '23 works just fine 🥲

this episode of "oh no Core, theres this whitepaper out there for another form arbitrary data storage, what should we do? its out of our hands😵💫, lets go tweak OP_Returns" doesnt make any sense.

watching this laser-eyed i cant help to think the shinkansen gone awry is symbolic to the runaway disaster that is the japanese economy and people continue to act normal in the face of absurd circumstances.

like theres this scene where they're calculating the trajectory of the runaway train and it takes a whole team of people using rulers and papers to get to a conclusion (while going through the whole chain of command). like come on, being thorough is fine but use some software 😅 meanwhile the rescue attempts get boggled down by bureaucracy.

"nothing stops this train" -- perils of central planning

oh well, at least a fax machine didnt show up

i suppose its healthier, but i kinda feel bad for kids these days, their chocolate bars, bubblegum, and hard candies all suck now

smaller portions, worse ingredients 🥲

nah, different film. just came out

the plot aside, the film does a pretty great job introducing the details of the shinkansen, apparently lots of collaboration JR East for the film.

watched Bullet Train Explosion (2025) on netflix. it seemed to be a cash-grab sequel to an old japanese 70s film and i expected to be pretty silly

the whole time im thinking

"nothing stops this train" 🤣🤣

its an optical illusion from a layered window pane made into viral video last year

in chinese mythology, the legend is that the world started with ten suns which one day decided to cause havoc on earth by appearing together instead of one after another, the hero Hou Yi was tasked to shoot down the suns and thankfully spared one