They've taken a very light approach, but the upside is that it doesn't make anything worse. Sometimes that's all you need from technology.

If you want to actually use AI, LLM Farm will let you run the integer quantization of Phi3-mini. It won't blow you away, but it's conversational and 100% local.

Well that limits things. I've noticed that autocomplete will do math with unit conversions as well. And the Spoken Content voices are better, if you'd like it to read a web page or iBooks content. I don't know if that's "Apple Intelligence" or just the new OS.

I like it so far. It reduces notification clutter, pulls out the emails that I probably shouldn't miss, and ... well that's basically all I use it for but damn those can be worth it. All local, and never in my way.

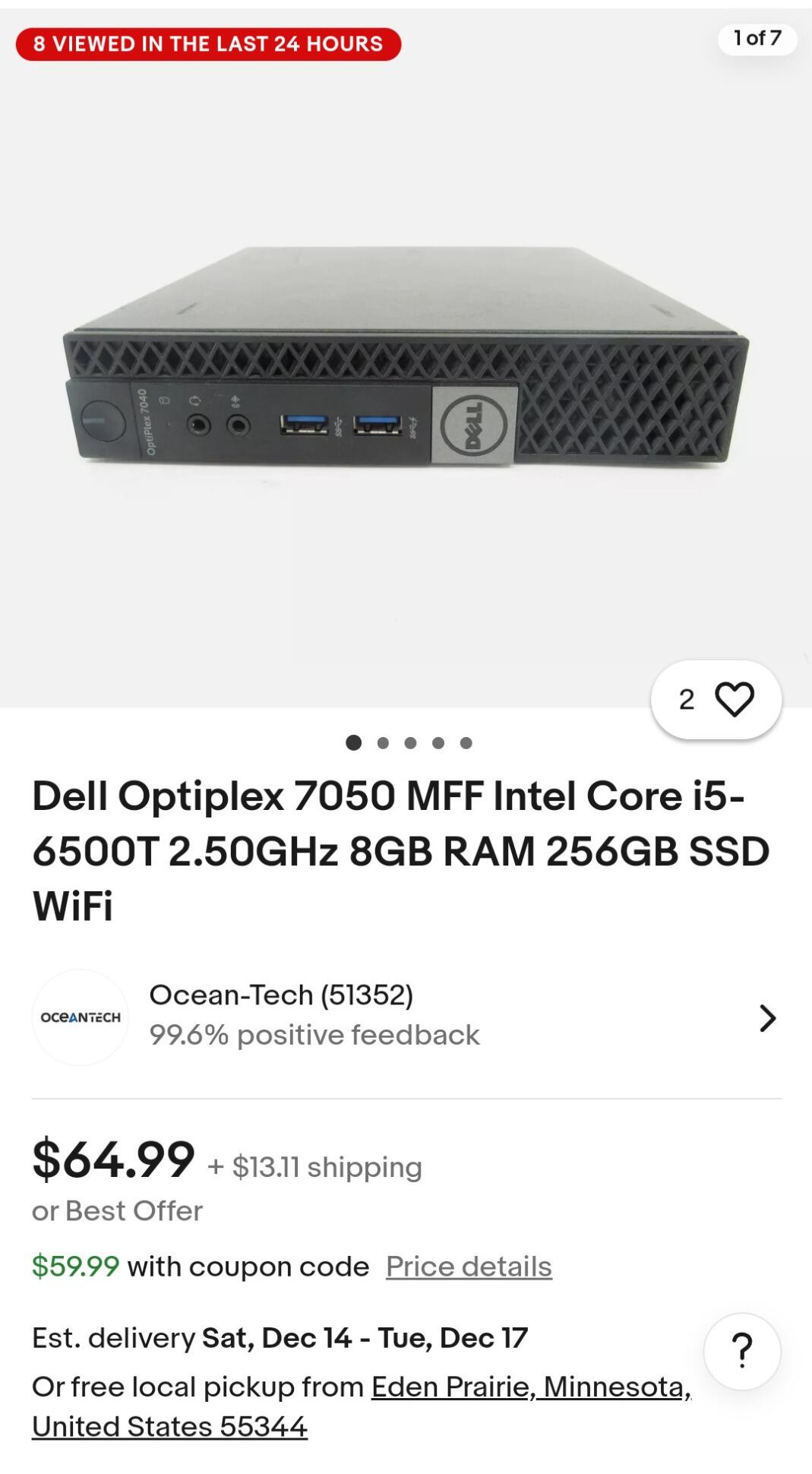

I prefer an RPi based on noise / heat / power, but if you have space in a well insulated and cooled closet, people always underestimate the value of used corporate gear. Many of the lemons would have died and been replaced under warranty, so those that make it to the secondary market often live longer than expected.

El Salvador to scale back bitcoin dreams to seal $1.3bn IMF deal

Politicians always disappoint, here it is.

https://www.ft.com/content/847cdb57-2d56-4259-ab8e-f95032efa259

I don't see it as failure but as pragmatic success. Bitcoin can still be accepted, they can keep everyday life stable, and they set the precedent that you can have national adoption of Bitcoin without being cut off from traditional options.

It's always a marathon, rarely a sprint.

OTOH... Nothing bites harder than DNS

They offer many services. I'm talking about using WARP to shield yourself from your ISP: swapping one monopoly for a different one. You could also use one of many other VPN providers instead of WARP.

But people also wrap Cloudflare around their DC, and that dramatically centralizes things. Cloudflare is almost certainly the NSA's best friend.

Unless you're using a well known VPS, you're probably their only customer who legitimately has other options 🫡

Living in the future is always some combination of expensive and inconvenient. But who doesn't want to live in the future?

Ten times the money might get you twice the performance. But, a server can get you ten times the performance for about the same price. This is what makes Ollama so compelling.

It's nice to see a 4B model on my phone, but on a PC in the closet I can run a 70B. These are the trade offs you make to live in the future (meaning, ahead of the curve).

Two? Through separate providers? I'm not saying I haven't done it, but server uptime is not the same as availability over the net.

🤷🏻♂️ It's a minor point. I've served plenty of things from a home PC. It's even more reliable now that we have better VPN / CloudFlare options, because it's harder for your ISP to differentiate the traffic.

Well... if the server has it queued up in memory, and you have a 10Tbit link throughout, and there's nothing else using bandwidth, etc etc.

In practice my 2.5 Gbit fiber hardwired to a 10 Gbit router, hardwired to a 1 Gbit PC with plenty of CPU and memory rarely pulls 100 MB/s. That said, on the rare occasion it does (usually from Microsoft or Steam), I wish that I had sorted out a better link from the router to the PC.

And even WiFi 6's relatively high latency still makes for playable VR on a Quest 2. We always have more than we think, but less than we want.

WiFi 7 is additionally supposed to have a 1 msec latency mode ⚡️

Gun advocates certainly think so. Funny that service members don't.