You can branch in the ChatGPT interface by editing your message or regenerating the response. There's more flexibility in the API playground "chat" interfaces. I'm not sure how you'd merge conversations though. Obviously you can submit any sequence of messages as the conversation history, but there's internal state that won't transfer by simply merging the messages

Might want to brush up on your ConLaw: there's no mention of "citizens" here:

"Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the government for a redress of grievances."

I understand your sentiment, but that isn't how a nation of laws works. But perhaps we are no longer a nation of laws

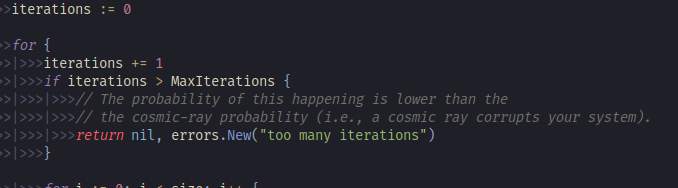

Once you see that synthetic intelligence provides a near-universal incremental step, every functional programmer knows what comes next

AI is like brute force: if it isn't working, you aren't using enough of it

nostr:note1nhdv4yrn6ct6vdc34gtqd2ghdkmjnfcwdevr7uu8pxea5h7alc2qkk9ml7

When it really matters you should run tor over the VPN

He's a permanent resident. And, you're okay with the de facto suspension of habeas corpus? I've read too much history to agree with your points

https://en.m.wikipedia.org/wiki/Detention_of_Mahmoud_Khalil

In a way you're technically correct as he was detained without any charges at all.

Am I supposed to Google it for you?

Vibe coding is a universal Rails that can write its own tests. How you wield it is on you

Everyone who's used Cursor can't stand goose

... until they go back to Cursor

Happens all the time. Read the news. Read some Zinn

Freedom of Speech is highly uneven in the US. I have no doubt that you or I could do this, but others are not so well protected

Crazy? Could easily be said of Somerville or Manhattan

Spending $200 on tokens takes a lot more work than eating fries

"Claude sometimes thinks in a conceptual space that is shared between languages, suggesting it has a kind of universal “language of thought.” We show this by translating simple sentences into multiple languages and tracing the overlap in how Claude processes them.

"Claude will plan what it will say many words ahead, and write to get to that destination. We show this in the realm of poetry, where it thinks of possible rhyming words in advance and writes the next line to get there. This is powerful evidence that even though models are trained to output one word at a time, they may think on much longer horizons to do so."

It's always been latent space

"We find that the shared circuitry increases with model scale, with Claude 3.5 Haiku sharing more than twice the proportion of its features between languages as compared to a smaller model"[https://www.anthropic.com/research/tracing-thoughts-language-model]

Evidence that capability comes from the number of things that they "grok", not more parameters. They have more room for memorization, yet they're actually using *less* space? Once we figure out how to get smaller models to grok more, we'll get Claude 3.7 level capabilities out of local models

The NSA already

- has your cloud photos

- knows everyone you meet with

- knows who Satoshi is

Know your threat model