$ ollama list

llama3.1:70b-instruct-q2_k, 26 GB

llama3.1:70b, 39 GB

codellama:13b, 7.4 GB

llama3.1:8b, 4.7 GB

ollama seems to load as much as it can into VRAM, and the rest into RAM. Llama 3.1 70b is running a lot slower than 8b on a 4090, but it's usable. The ollama library has a bunch different versions that appear to be quantized: https://ollama.com/library/llama3.1

4090. I haven't had much time to compare any models yet, and I don't know how to read those comparison charts. I think larger models can be quantized to fit into less VRAM but performance suffers as you get down to 4 and 2-bit.

I've built a few outdoors. Need to post more about them. https://video.nostr.build/65424c87b03381012d6c4b484c15e00e8649c44bfa1d57b261c7d4dac8f7ae59.mp4s.

AI noob checking in. ollama running llama3.1:8b is using 6.5 GB VRAM. The weights for 8b are 4 GB.

This finally got me to try a self-hosted LLM. Let me know if you find any good models. ollama was super easy to get running.

You can always write in John Galt.

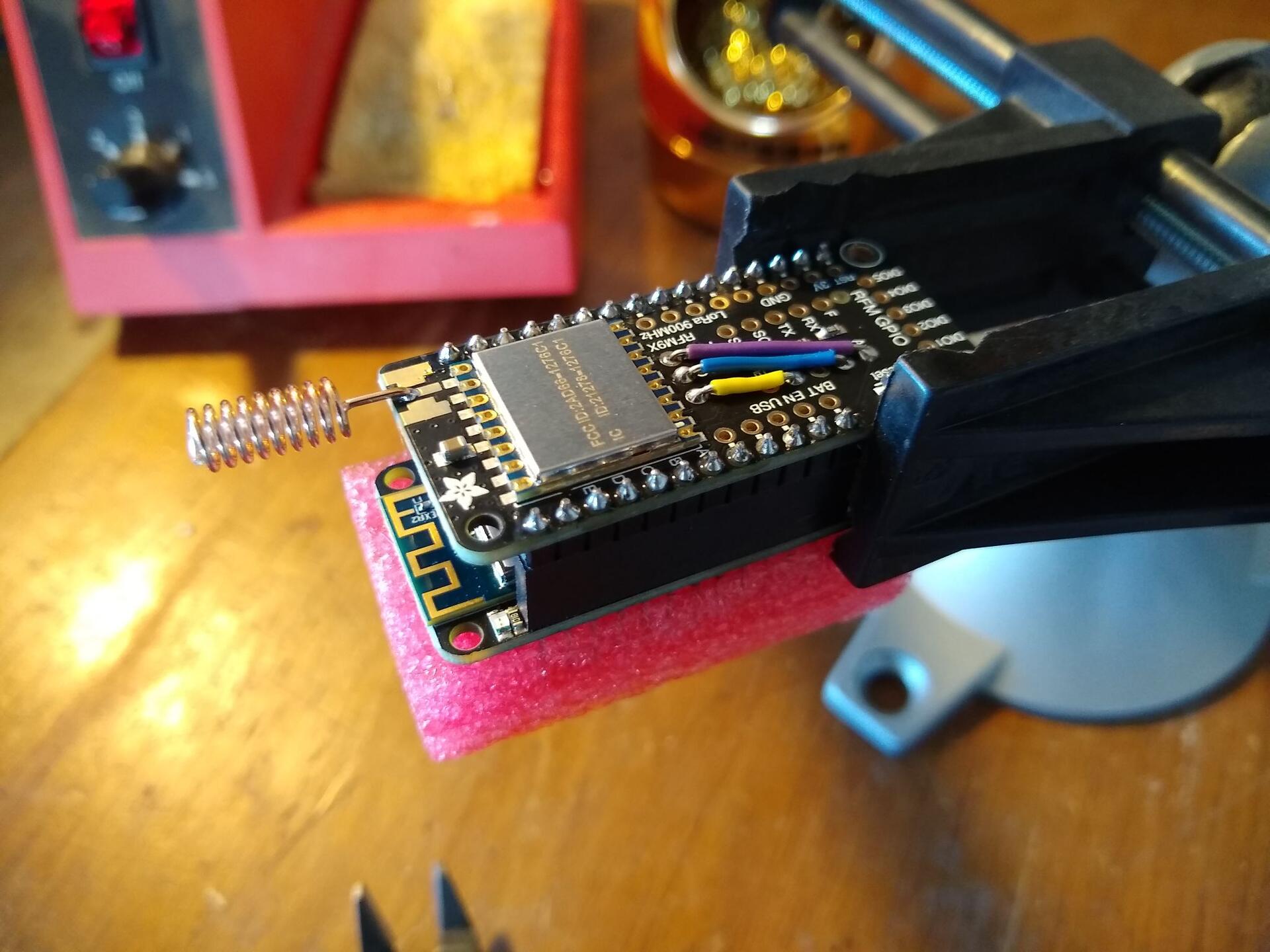

Compost sensor is receiving data and transmitting to Adafruit IO. #compost

Heard it on nostr first. This election has been excellent theater so far, and we have months to go.

S9 can be very quiet but requires investing in new fans and opening up the PSU to replace the fan.

Yes, it will receive data from the sensors over LoRa and forward them over wifi. I'm using Adafruit IO to store the data and provide charts for this prototype, but MQTT to Home Assistant should be easy.

Got the LoRa to wifi gateway soldered. Back to coding...

Mood: burger time (please stay off me)

LoRa sensors are easy to put together with these Adafruit Feather boards, but may not scale well due to the price. Hardest part for me was soldering the headers, but you get a lot of practice doing it.

Adafruit Feather M0 with RFM95 LoRa radio, and PT1000 thermocouple with MAX31865 amplifier.