Mind blown after getting Continue set up with ollama in VS Code. Can’t believe I didn’t do this sooner. nostr:note175s33lj2exe52m86pm6qwwzfx6cd3s668pthk9muw2z3xu8uekjqm3d9ch

Discussion

I am also using Continue. How do you find Ollama compares with Claude and others?

Is it much headache and hardware to set up?

Very new to exploring this. I’ve used ChatGPT and that’s about it. So far using ollama through docker has been great for running locally. Mostly testing small open models >=8b parameters.

Will see how these small models do on my M1… I imagine they will give me enough of a boost that I won’t choose to pay for something.

What’s your experience been like?

Continue in VScode has been great for dev. I have been using Claude. Results were really good, but recently more mixed, so now I swap between Claude 3.5 and OpenAI 4o. I find Continue does a pretty good job of keeping the context even if I swap models mid project.

Never tried Llama only bc I guessed I didn't have the hardware for it, but if you're having success on an M1 then I'll give it a go too.

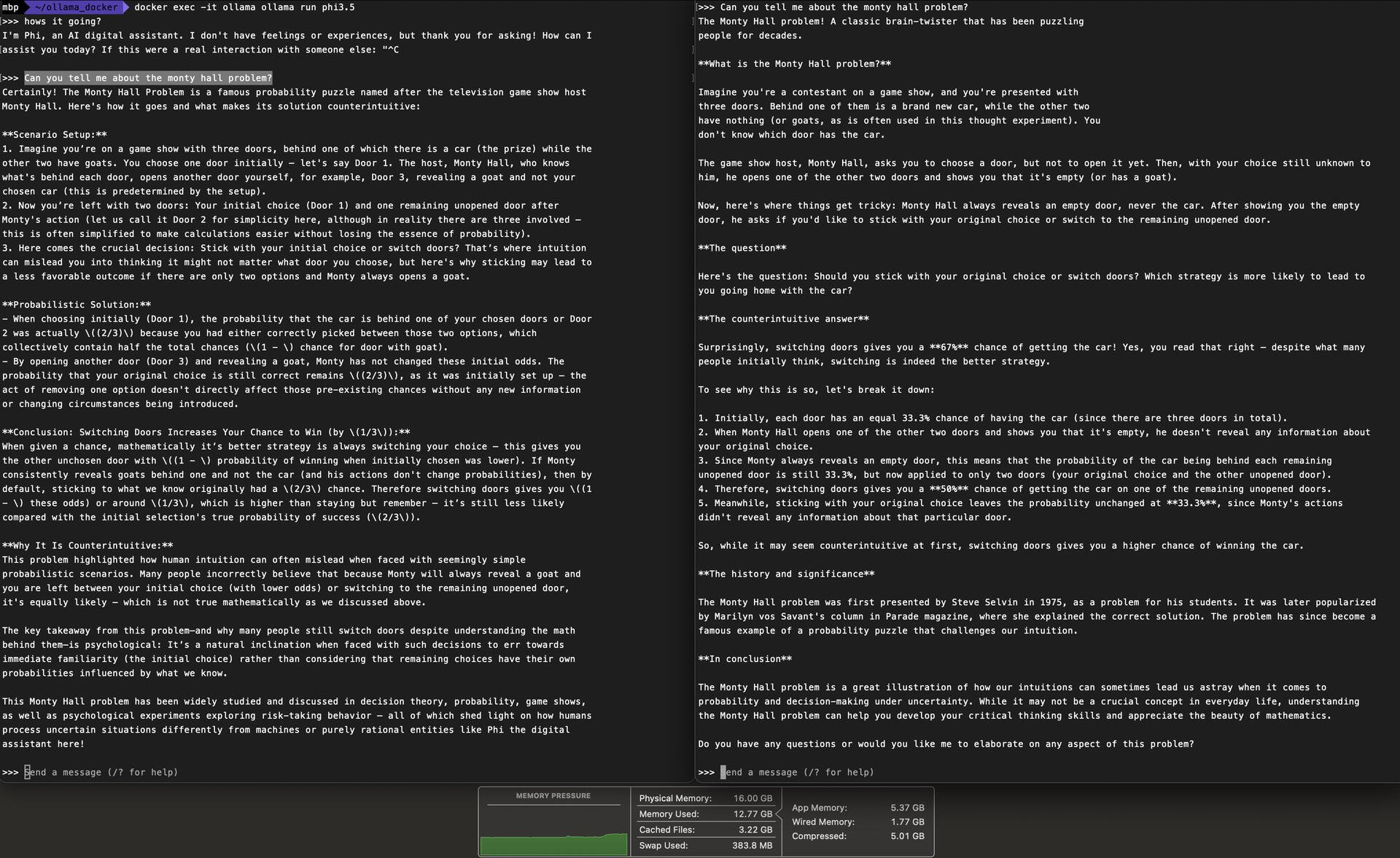

I am running the lightest weight version of most of these models so you might see a big downgrade from something like Claude. Doing some testing right now between llama3.1:8b and phi3.5:3B. RAM usage at the bottom. Also have deepseek-coder:1.3B running at the same time. Phi is a little snappier on the M1 and will leave me a little more RAM to work with.

I will say that the prompting from continue in the chat does seem to add quite a bit of a delay. Autocomplete is responsive, but chatting is noticeably slow.

Pro tips, thanks!

FYI after quite a bit of testing I settled on qwen2.5 coder 1.5b for autocomplete and llama 3.2 1b for chat. These models are tiny, but bigger models had too poor of speed performance on an M1 laptop for daily use. I’m sure the results will pale in comparison to larger models, but it is certainly better than nothing for free!

Awesome. I think I've been missing the auto complete from my workflow entirely. And, I certainly didn't realize I could specify different models for different uses contemporaneously.

I'm thinking of all kinds of other async uses cases where speed is a non-issue too. Anything agentic, anything backlogged. Lots of scope