I thought Llama was FB. Is it a collaboration between FB and nvidia?

Discussion

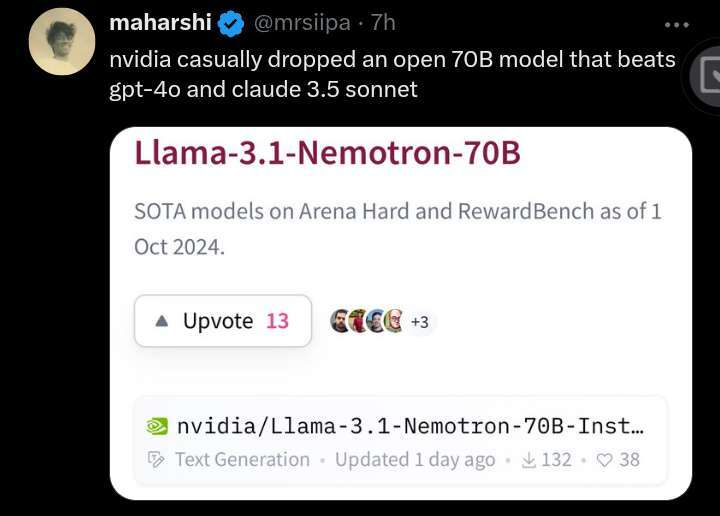

Pretty sure Llama is all Meta. But tons of people have dropped forks and fine tunes of it since it’s FOSS. This is likely NVIDIA just working off of their base model and applying their RLHF tricks or whatever to make it better.

It's open source so I'm assuming this is Nvidia's modified version of the 70b model.

Now I just need 80gb of vram to run it...

You really just need 48gb to run a respectable quant. Or 24vram+ram if youre patient

Its early days but eventually most PCs etc will be tailored to run models so in 5-10 years the specs on machines will catch up and the models will also become more efficient.

Have you seen this: https://youtu.be/GVsUOuSjvcg

I'm not patient. Also I only have 12gb vram + 24gb system ram.

I wonder what's the cheapest way to get 48gb vram running on a local machine.

Llama is open source model anyone can build on it. Why Zuck said he open sourced it so anyone can do the work and make it better for him.