Yeah, GitHub is the #1 way for devs to communicate, and Nostr devs aren't an exception. You basically HAVE to at least mirror something on there, or you don't exist.

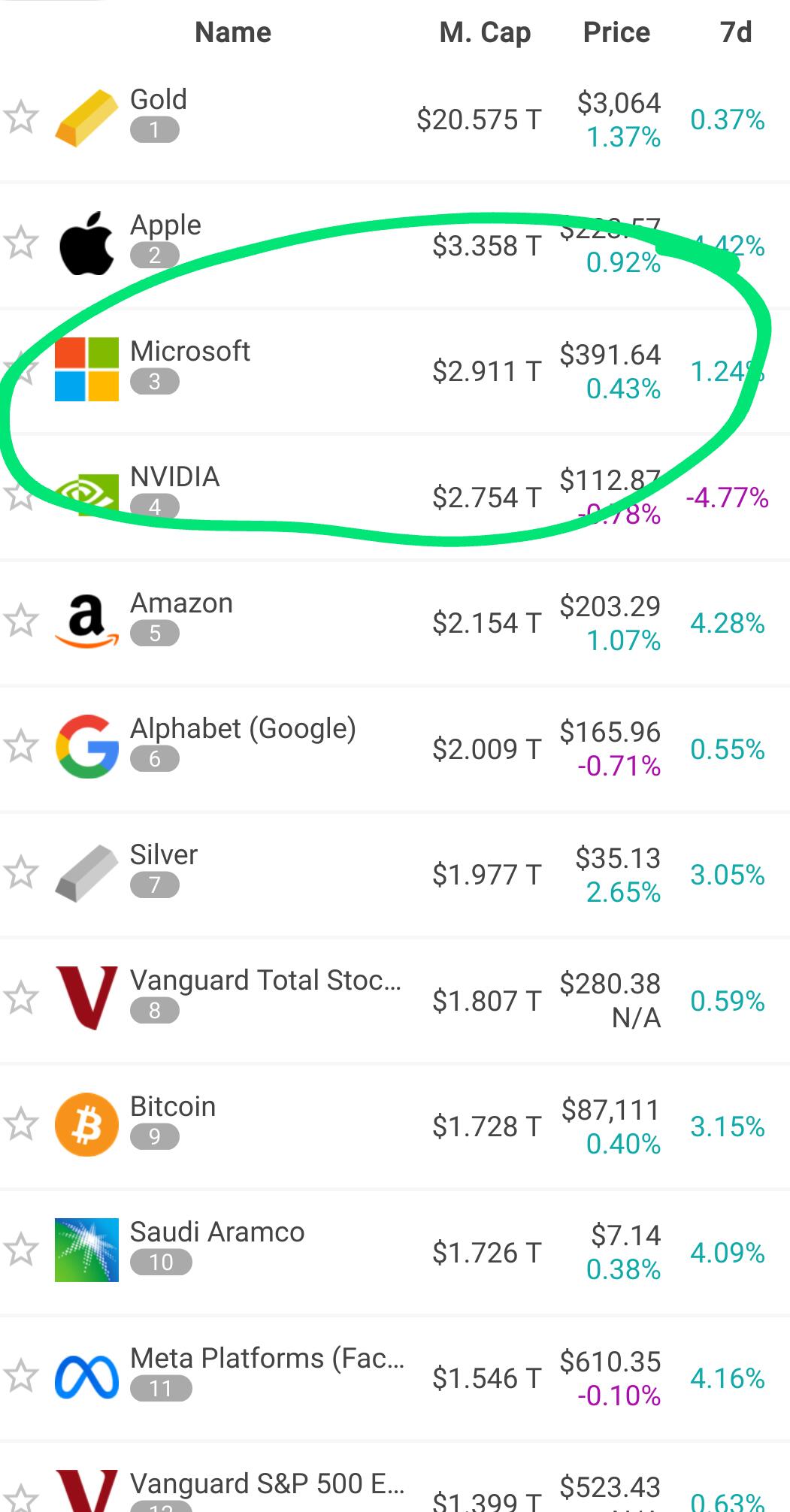

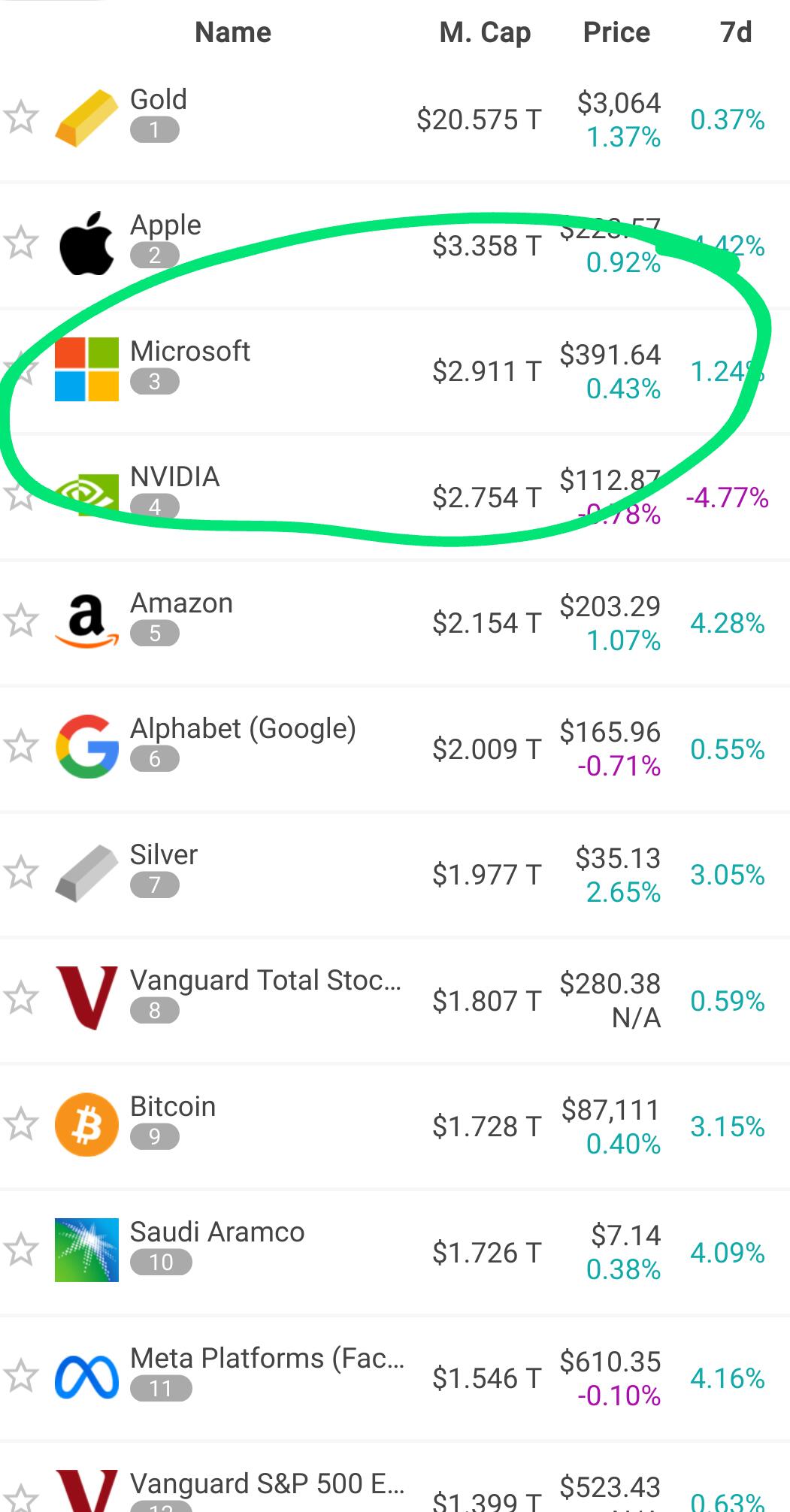

They have a real strangehold on almost all development teams, on the whole planet, because they control everyone's repos and interactions. The same way Office 365 is nearly impossible to get away from, in the corporate world. Owning both of them is why Microsoft is so gigantic.

We can't compete with that level of centralization, but we're determined to offer a complete, professional, FOSS, decentralized setup, that can eventually be installed on lots of people's home or work servers. This gitserver is the Mother Server, so to say, and where we'll be beta testing everything, and will be SEO and AIO, but it should eventually just become one of many because relays mean that will all be able to look -- and interact with -- the same things from different places. Since we're such an infrastructure-focused project team, we decided that this could be our first big contribution to the effort.

Nostr wants to make git distributed again, and we support that message.

Shouldn't be too hard. 😁 Here is the competition, Nostr:

I forgot to hit send on this earlier today, but speaking of David vs. Goliath, if you haven’t done it already, make sure to tarpit Alexandria, TheForest1, GitCitadel, and anything else you’re deploying that is expected to hold a lot of data. Otherwise, scrapers will come for the goods, overload the infrastructure and cost you quite a few sats.

https://arstechnica.com/ai/2025/03/devs-say-ai-crawlers-dominate-traffic-forcing-blocks-on-entire-countries/

https://zadzmo.org/code/nepenthes/

https://blog.cloudflare.com/ai-labyrinth/

this stuff annoys the hell out of me too, i run my test #realy fairly infrequently but within minutes some bot is trying to scrape it for WoT relevant events, and i wish there was an effective thing to slow that down

i tried adding one kind of rate limiter but that didn't really work out so well, in the past what i've seen work best tends to be where the relay just stops aswering and dropping everything that comes in... probably if that included pings the other side would automatically drop

i've now added plain HTML and i think that requires something also but maybe more simple, like, if it gets a query more than once every 5 seconds for 5 such periods it steadily adds more and more delay in processing, the difference to sockets is to do it on http it has to associate with an IP address

I'm assuming your first-level WoT can auth and get around delays. Otherwise, uploading publications would collapse.

yeah, they are only reading, not writing, so all that would really be required is to slow them down

probably could split the direct followed vs follows of follows into two tiers also so that the second level of depth get less service quality

probably maybe should work on it today because this problem with scrapers is fairly annoying

it's only nostr WoT spiders at this point, i wish i could just suggest to the spider people to just open a subscription for these and catch all the new stuff live, that would be more efficient for them and less expensive for relay operators

at this point it's fairly early in the game for that stuff so maybe some education of WoT devs might help...

cc: nostr:npub176p7sup477k5738qhxx0hk2n0cty2k5je5uvalzvkvwmw4tltmeqw7vgup there is no point in doing sliding window spidering on events once you have the history, from then on just open a subscriptiion

this would be more friendly from the AI spiders if they had a way to just catch new updates, but unless dey pay me! i'm just gonna tarpit them

Yeah, I don't get the point of spidering data you can just stream. Like, they spider Nostr websites and it's like... just subscribe to the relay. *roll eyes*

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Thread collapsed

Yeah right now I just have a user agent list and that does most of the work, the bots that are hitting me mostly have "~bot" in the user agent field so it's been working to stave them off for now. I have to hop in and update the list every few days it helps.

I was interested in rDNS lookups for blocking since most of the IPs i've dealt with come from rDNS bot sources like bytedance, openai and so on.

Thread collapsed

Thread collapsed