The mega-based LLM has arrived

Discussion

A few answers are a bit silly, but it’s good to be able to retrain open models. Hopefully they keep up with the closed foundation models.

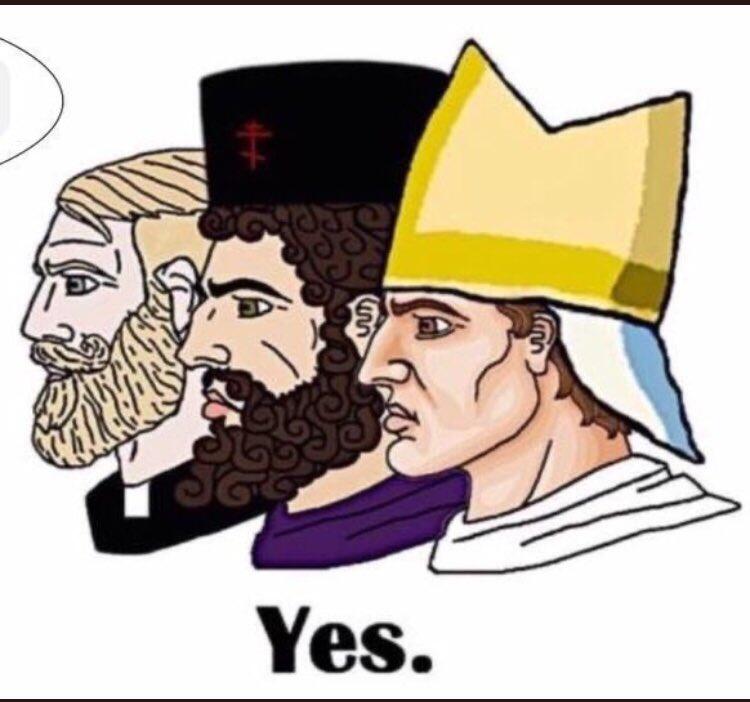

So, Nostriches are generally fans of conspiracy theories and unprovable faith based beliefs?