What's your bare metal LLM setup like nostr:npub1utx00neqgqln72j22kej3ux7803c2k986henvvha4thuwfkper4s7r50e8 ?

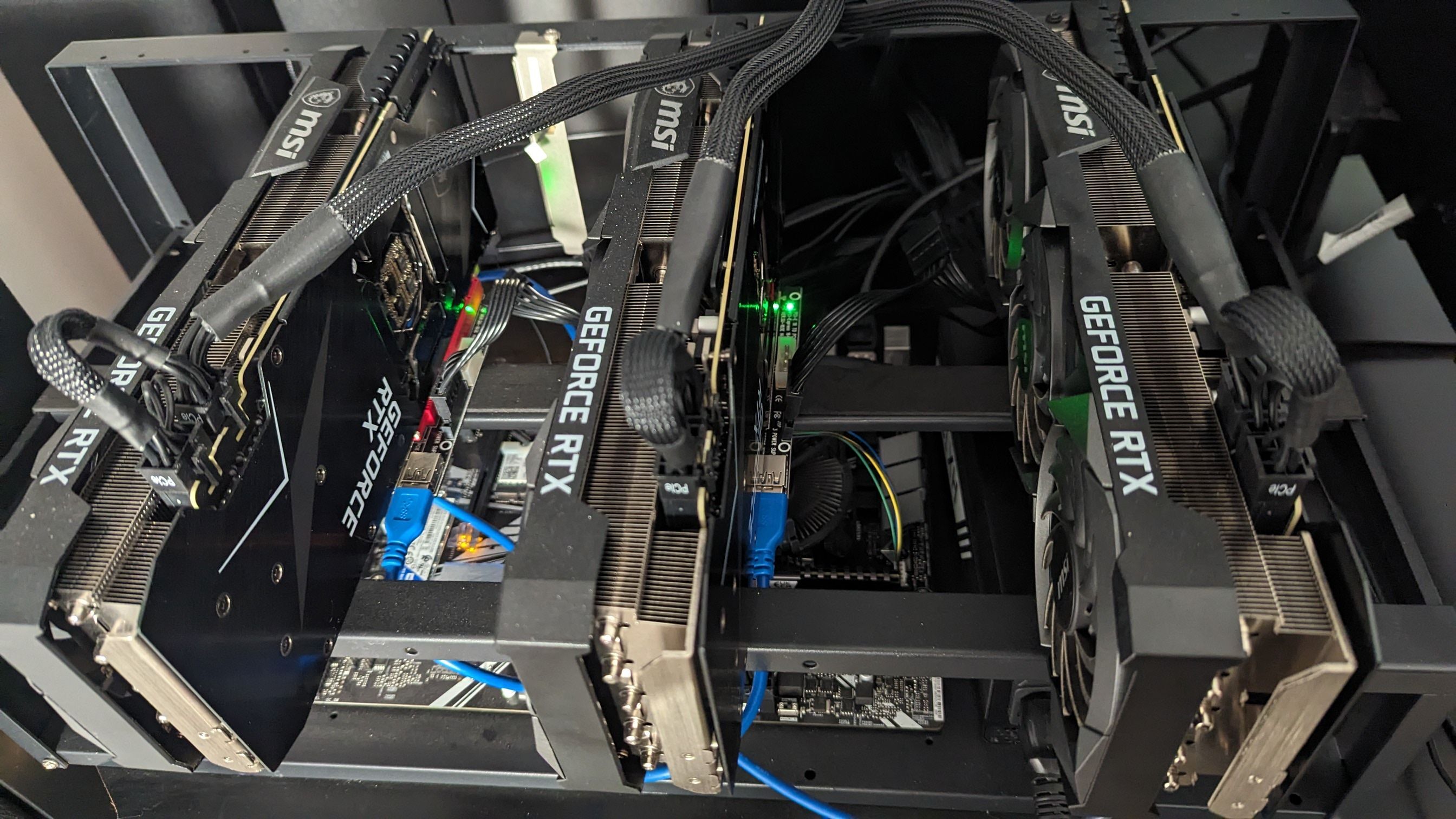

Well I can help you if you have questions. But running large local LLMs still won't be able to achieve what Large Language Models at data enters can deliver. nostr:npub1utx00neqgqln72j22kej3ux7803c2k986henvvha4thuwfkper4s7r50e8 has more experience building a rig specifically for this with 3 2070s if I remember right. He may have something to say on how well that can realistically perform.