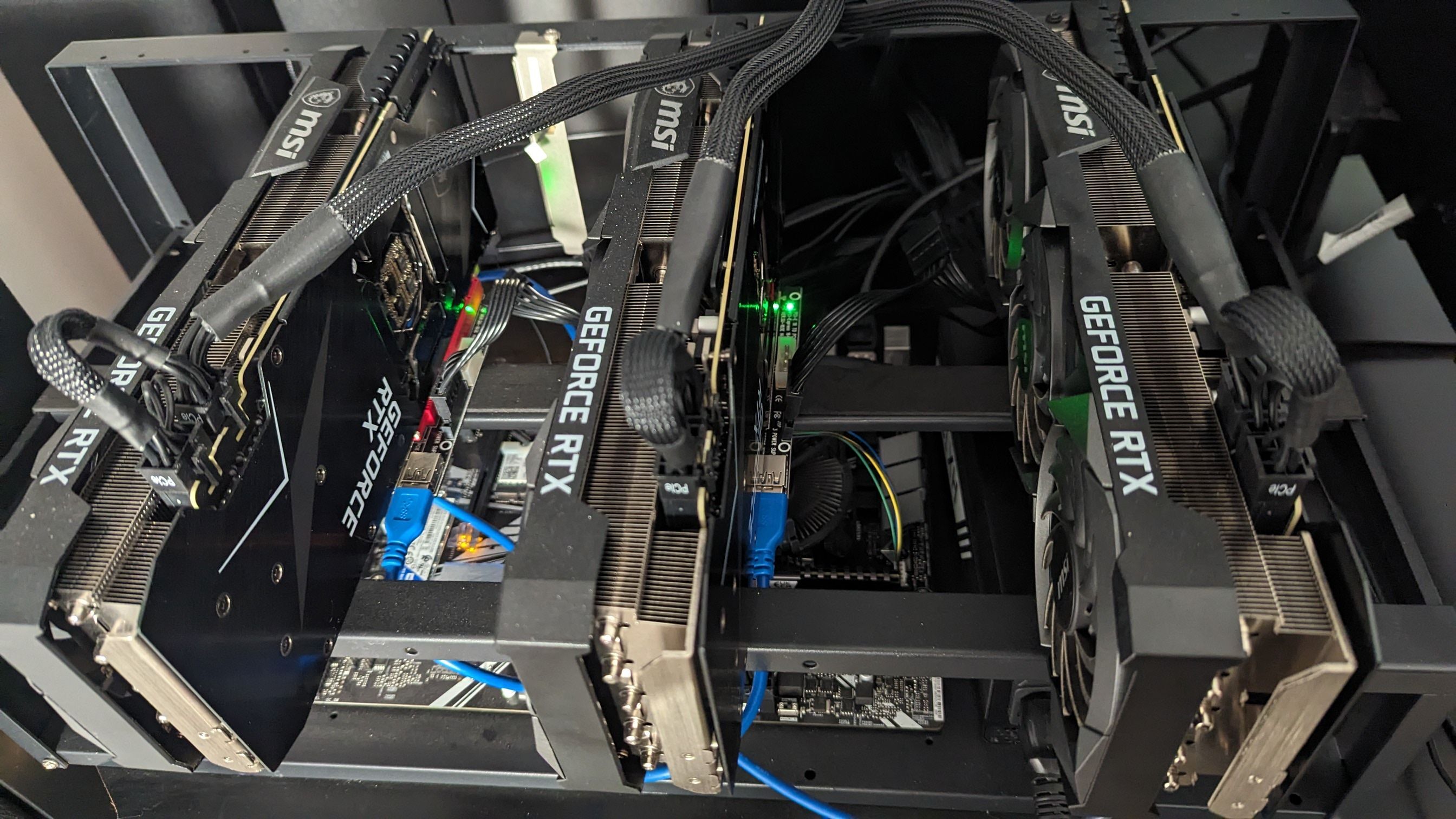

Well I can help you if you have questions. But running large local LLMs still won't be able to achieve what Large Language Models at data enters can deliver. nostr:npub1utx00neqgqln72j22kej3ux7803c2k986henvvha4thuwfkper4s7r50e8 has more experience building a rig specifically for this with 3 2070s if I remember right. He may have something to say on how well that can realistically perform.

Discussion

Thank you. What about just a kickass gaming PC?

like just roughly what motherboard & CPU?

Also, pcpartpicker.com is a wonderful resource.

GPU is pretty much all that matters, more specifically, the amount of video ram on the card. I incidentally got an older 16GB nvidia 3060 before I learned about LLMs and it can do quite a lot for $300. I'd say you want 16GB minimum. 24GB is best you can do on normal cards.

Excellent, I'm on the right path.

My BOM atm is a few GPU's (3070's maybe), but also have ~64GB RAM ddr5 nvme.2 with 8-12 TB ssd plus NAS for backup. Prob overkill yes but already budgeted for this. Idk what motherboard or CPU for something like this

Y'all are great thanks for the recommendations and sharing your rigs

What's your bare metal LLM setup like nostr:npub1utx00neqgqln72j22kej3ux7803c2k986henvvha4thuwfkper4s7r50e8 ?