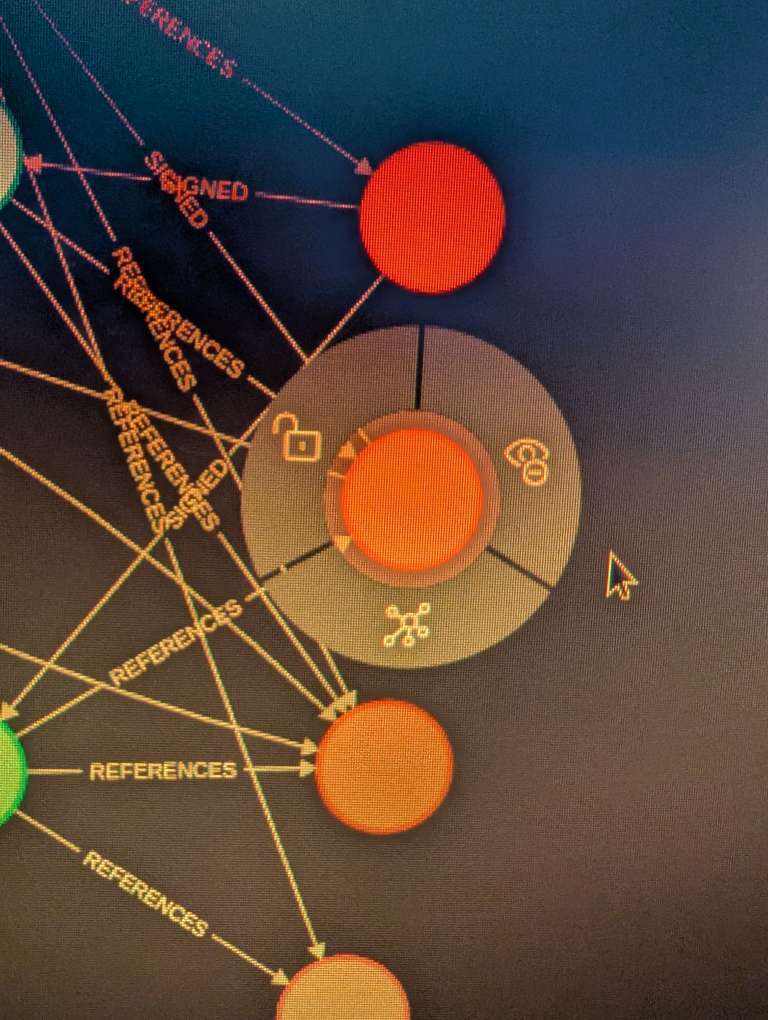

There's a lot of variation on how events are connected in Nostr. It'll take a lot of work just to get the basics down.

This is just using "e" and "p" tags as references, and I already found, just from a random query, that Gossip references both the root and the direct parent with "e" and has a value at the end of the tag to specify which is which. Keep Nostr weird I guess.

Not to mention references that only exist in the content via nostr: or @ constructs. But hey, as long as the data is there, Neo4j can handle it.

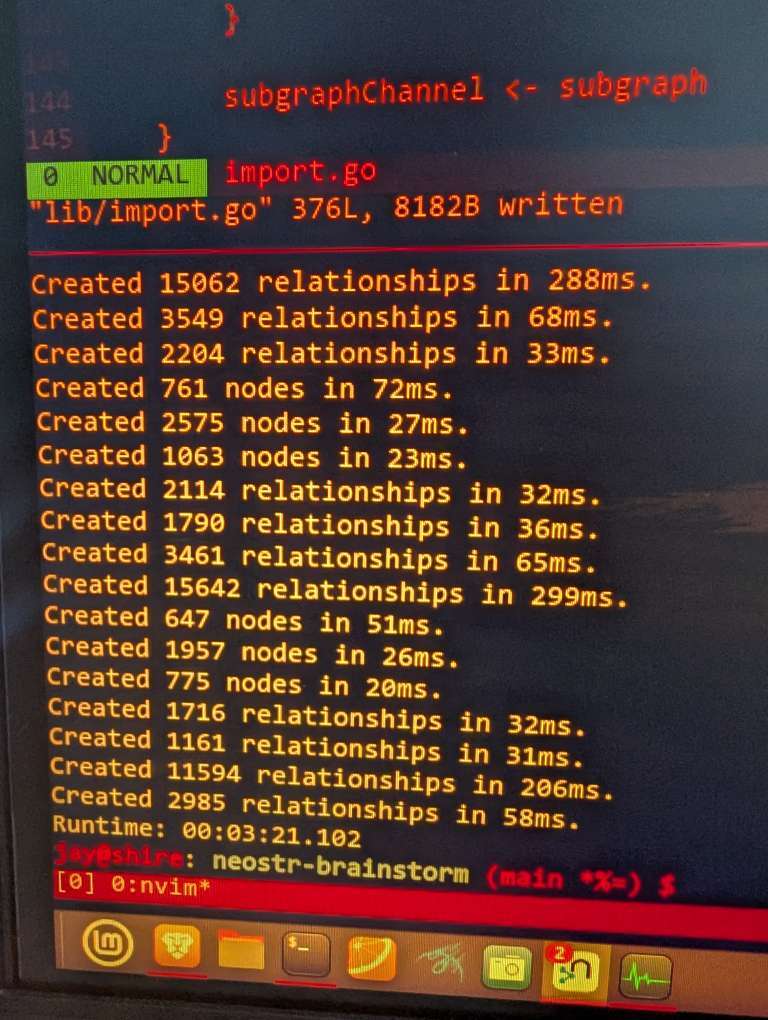

nostr:nprofile1qqsw2feday2t6vqh2hzrnwywd9v6g0yayejgx8cf83g7n3ue594pqtcpr3mhxue69uhkummnw3ezucnfw33k76twv4ezuum0vd5kzmqprfmhxue69uhhyetvv9ujuarpwpjhxarj0yhxu6twdfssz9mhwden5te0dehhxarj9enx6apwwa5h5tnzd9aqhdwwcj also, I've written a batch unwind import in Go and found that it's so fast with the right batch size and indexes that you're only limited by your IO speed in getting the events over the wire.