I’m surprised we still don’t have musig or frost widely deployed after 3 years, i thought that was one of the main benefits: smaller multisig that looks like a singlesig tx. tapscript/tree complexity seemed pretty crazy to me but i just assumed i was too dumb to see what it was useful for.

Curious how fast it is, do you have an m4 max ?

dev model should be better as well

This image is my property now. I generated it by running a model for 50 minutes. If you want to do it -

Bash command:

mflux-generate --model dev --prompt "Luxury food photograph" --steps 25 --seed 2 -q 8

Git repo: https://github.com/filipstrand/mflux

(Thanks nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s)

thats a lot of steps

Sara Imari Walker quoting kevin lu(?) at 57:57

https://podcasts.apple.com/ca/podcast/the-joe-rogan-experience/id360084272?i=1000664618984

Ken lu*

Sara Imari Walker quoting kevin lu(?) at 57:57

https://podcasts.apple.com/ca/podcast/the-joe-rogan-experience/id360084272?i=1000664618984

“If you want to predict the future, look at things that haven’t changed in centuries”

you need to accept the terms and conditions on huggingface while logged in

also from what I understand its mostly memory bandwidth constrained? so its not all cores.

why not, assuming gpu cores keep increasing?

there's a convenience and power factor though. a box of gpus is annoying

there is https://github.com/raysers/Mflux-ComfyUI but I haven't tried comfyui yet

was just the example prompt in the repo:

mflux-generate --model schnell --prompt "Luxury food photograph" --steps 2 --seed 2 -q 8

generated this image on my m2 mac with 32gb of ram in about 38 seconds:

using:

https://github.com/filipstrand/mflux + schnell model

impressed... could replace my use of midjourney.

nostr:note1pnezaa9ehx0kwt5pcxn33vrx27pa2uxsakusudvrqsj42tg7nd2szwxg7e

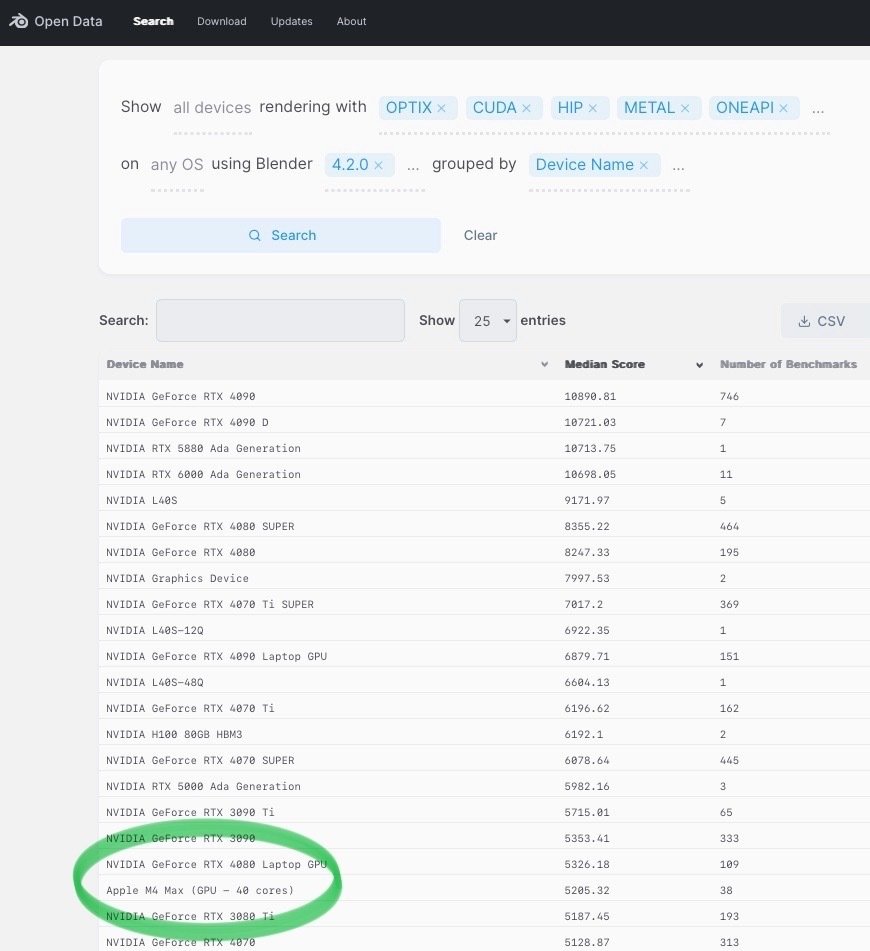

apple silicon gpu rising in benchmarks scores. having an rtx 3090-comparable gpu in a laptop with 64-128gb of unified ram is going to to be interesting…

especially on a “budget” compared to 64gb nvidia gpus which are like $30,000

large models require lots of gpu memory, so apple silicon seems like an increasingly interesting platform for the budding ai hacker.

M4 macs are becoming an interesting (and surprisingly cheaper) option for running local LLMs. They have lots of unified memory, integrated gpus and neural cores that are pretty good for running local models.

Git issues + project management

nostr:npub18m76awca3y37hkvuneavuw6pjj4525fw90necxmadrvjg0sdy6qsngq955 nostr:npub1xtscya34g58tk0z605fvr788k263gsu6cy9x0mhnm87echrgufzsevkk5s for some reason this post didn’t show up until I write a new post. Using the latest AppStore version rn. nostr:note1mzzw7nunfg7dgl62rxhey4we45hkakqd32f4ufzckj6ma9dcr7zs820pju

May fail to send initially but it will keep retrying until you have connection again. We just need to make this more obvious somehow

I gave this exact feedback on the PR review, with the addition of pulling followed hashtags from your contact list into a column

ai powers most of my learning these days. during my 1h walk a day i get ai to read articles and papers to me. I occasionally stop to talk to chatgpt and ask questions about certain topics. What a bizarre future we’re in.

Music discovery isn’t what it used to be. Fountain Radio makes it fun again.

Wave goodbye to algorithmic playlists and say hello to a new communal listening experience powered by Bitcoin and Nostr.

Fountain Radio is live in version 1.1.8 now. Here's how it works:

Add and upvote tracks in the queue ➕

Search for any song on Fountain and pay 100 sats to add it to the queue.. Upvote any track in the queue to change its position. 1 sat equals 1 upvote and the track with the most upvotes will play next.

Support the artist currently playing ⚡️

Boost to send a payment with a message on enable streaming to send a small amount for every minute you spend listening. 95% of every boost and streaming payment goes directly to the artist currently playing.

Post in the live chat 💬

Hang out with other listeners in the live chat by connecting Nostr. You can post chat messages for free. Every time a track is added or upvoted this appears in the activity feed too.

Save tracks to your library 💜

Listen to your latest discoveries again later in the app. Tap on any content card to add a song to your library or a playlist, or see more music from that artist.

Listen to Fountain Radio on other apps 🎧

Fountain Radio now has its own RSS feed so you can tune in on any podcast app that supports live streams and Nostr live streaming platforms like nostr:npub1eaz6dwsnvwkha5sn5puwwyxjgy26uusundrm684lg3vw4ma5c2jsqarcgz.

Artist takeovers 🔒

Artists can now take control of the music and host a listening party. During a takeover, only the host can add tracks to the queue and upvotes are disabled.

The first artist takeover will be with nostr:npub19r9qrxmckj2vyk5a5ttyt966s5qu06vmzyczuh97wj8wtyluktxqymeukr on Wednesday 27th November at 12:00pm EST. Want to host a takeover? Get in touch and we will get you scheduled in.

You can also find Fountain Radio on our website here:

👀

people are so obsessed over the nips repo like it has any real power. the power comes from implementors choosing to implement things or not. the best ideas will have the highest number of implementations. just because a nip is merged doesn't mean its a good idea

Yeah for some reason my notifications are much more accurate in Damus. Idk why but nostr:npub12vkcxr0luzwp8e673v29eqjhrr7p9vqq8asav85swaepclllj09sylpugg is always missing notifications in comparison.

It’s pretty simple why. they depend on a centralized server for all their stats and feeds, we do not. damus will always work, so people can use us when primal goes down. We will reach the same level of ux with a more robust client architecture, will just take a bit more time because it is much harder to do.

I also have this for note blocks, which are the parsed sections of a note, these are also stored in the database. this way each frame I can simply walk and interact with these structures immediately after they come out of the local database subscription and enter the timeline.

You'll like this video. This guy uses lots of optimization techniques to get a PHT that runs more than twice as fast as the gperf one (the best one he found that isn't specifically constructed) https://www.youtube.com/watch?v=DMQ_HcNSOAI

great video

Right! Its cool to see what people are reading

still in it for the tech

It’s probably in the apple docs somewhere but im too lazy to find it

I'll probably do it. it's pretty simple to implement

nostr will have the best tech and the most devs, people won’t be able to ignore that for long. other clients on other protocols will run into heavy handed moderation issues due to centralization of power, government pressure, and lack of user keys. This is the main risk I see for mastodon, bluesky and big tech, regardless of current user numbers.

As long as people care about freedom and building a sovereign presence in cyberspace, nostr is inevitable.

It’s possible most people don’t actually care about these things, but at least we’ll have the best solution for people who do. Those people are the coolest to hang out with anyways.

You don’t need testflight to zap notes, you only need to run https://zap.army

nostr:npub1zafcms4xya5ap9zr7xxr0jlrtrattwlesytn2s42030lzu0dwlzqpd26k5 what happened to Friday? That was too soon?

alpha launch is end of month